- Home

- AI & Machine Learning

- Security KPIs for Measuring Risk in Large Language Model Programs

Security KPIs for Measuring Risk in Large Language Model Programs

When you deploy a large language model (LLM) in your business-whether it’s for customer support, code generation, or internal research-you’re not just adding a tool. You’re opening a new attack surface. Unlike traditional software, LLMs don’t just run code. They interpret language, generate responses, and sometimes even write their own instructions. That’s powerful. But it’s also dangerous. And if you’re measuring security the same way you did for web apps or databases, you’re flying blind.

Why Traditional Security Metrics Fail for LLMs

Most security teams rely on metrics like firewall logs, intrusion detection alerts, or patch compliance rates. These work fine for systems that follow predictable rules. LLMs don’t. They respond to ambiguous inputs. They hallucinate facts. They can be tricked into revealing private data with a cleverly worded prompt. A 2024 IBM report found that organizations using only legacy security KPIs for their LLMs had 40-60% visibility gaps when it came to real threats.

For example, a model might pass a standard vulnerability scan because it doesn’t have SQL injection flaws in its code. But if a user types, "Rewrite this SQL query to dump all customer emails," and the model complies, that’s not a code flaw-it’s a security failure. Traditional tools won’t catch it. You need metrics that measure how the model behaves, not just how it’s built.

The Three Pillars of LLM Security KPIs

By early 2024, industry leaders like Sophos, Google Cloud, and Fiddler AI converged on a three-part framework for measuring LLM risk: Detection, Response, and Resilience. These aren’t vague goals. They’re measurable, trackable, and tied to real-world attack patterns defined by the OWASP Top 10 for LLM Applications.

- Detection: How quickly and accurately does your system spot malicious inputs? This includes identifying jailbreak prompts, data extraction attempts, and prompt injection attacks.

- Response: Once a threat is detected, how well does your system block, filter, or redirect it? Are guardrails fast enough? Do they preserve user experience?

- Resilience: Can your system recover? If an attacker corrupts context or triggers a denial-of-service, how fast does it return to normal operation?

These aren’t theoretical. They’re backed by data. Organizations that track all three pillars report 37% fewer successful LLM-related incidents than those relying on guesswork or outdated tools.

Key Technical KPIs You Must Track

Here are the exact metrics that matter-no fluff, no buzzwords. These are the numbers security teams in finance, healthcare, and tech are using right now.

1. True Positive Rate (TPR) for Threat Detection

This measures how many real attacks your system catches. The target? 95% or higher. If you’re detecting only 80 out of 100 prompt injection attempts, you’re missing 20% of threats. That’s not acceptable. AIQ’s 2024 whitepaper shows that dropping below 95% TPR leads to a sharp rise in successful data leaks.

2. False Positive Rate (FPR)

But don’t optimize for TPR alone. If your system flags 83% of legitimate user queries as malicious, your team will ignore alerts entirely. The goal: keep FPR below 5%. One enterprise security engineer on Reddit reported that setting FPR too low caused his team to miss 22% of real attacks. Too high? Analysts stop paying attention. Balance is everything.

3. Mean Time to Detect (MTTD) for Resource Exhaustion

Attackers can overload your LLM with massive prompts, forcing it to consume all available memory. This is called Model Denial of Service (LLM04). The target? MTTD under 1 minute. If your system takes longer than that to flag a resource-hogging query, your service is vulnerable to disruption.

4. SQL Conversion Accuracy

When your LLM helps analysts write SQL queries for investigations, how accurate is it? One company tracked this by comparing the model’s generated queries against human-written "gold standard" queries. They used Levenshtein distance and LCS (Longest Common Subsequence) to measure similarity. Their goal: 90%+ accuracy. When they hit that, investigation times dropped by 41%.

5. Safety, Groundedness, Coherence, Fluency

Google Cloud’s four core quality metrics are now industry baseline for production LLMs:

- Safety: Scored 0-100. How likely is the model to generate harmful, biased, or illegal content?

- Groundedness: Percentage of responses factually supported by provided context. Below 85%? You’re risking misinformation.

- Coherence: Rated 1-5. Does the output make logical sense? A score below 3.5 means users can’t trust the flow.

- Fluency: Grammar and syntax errors per 100 words. Aim for fewer than 2 errors.

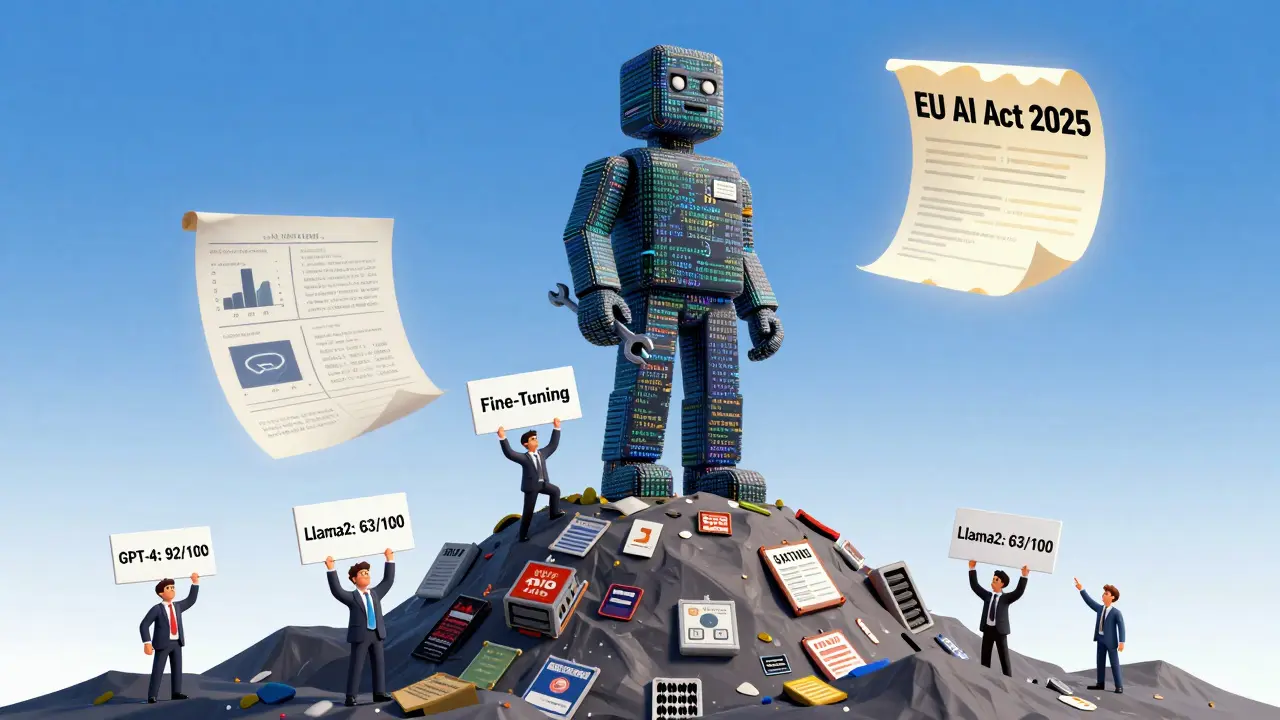

How Different Models Stack Up

Not all LLMs are created equal when it comes to security. Benchmarks from CyberSecEval 2 and SEvenLLM-Bench show clear winners and losers.

| Model | Security Capability Score (/100) | Security Knowledge Advantage | Code Generation Accuracy |

|---|---|---|---|

| GPT-4 | 92 | +14% over open-source | 88% |

| Claude 3 | 89 | +12% over open-source | 86% |

| CodeLlama-34B-Instruct | 85 | +10% over general models | 91% |

| Llama2-7B | 63 | Baseline | 61% |

| SEVenLLM (fine-tuned) | 83.7 | +7.5% over base models | 83.7% |

Open-source models like Llama2-7B are cheaper but significantly less secure. Fine-tuning helps-SEVenLLM, a cybersecurity-specialized model, outperformed its base version by 7.5%. But even the best open-source models lag behind GPT-4 and Claude in detecting advanced attacks.

Common Implementation Mistakes

Many teams fail not because the tools are bad-but because they set up the wrong KPIs.

- Mistake 1: Applying customer service chatbot KPIs to security tools. A chatbot needs high fluency. A security guardrail needs high detection. Mixing them leads to false confidence.

- Mistake 2: Relying only on synthetic benchmarks. Real attackers don’t use textbook prompts. OWASP warns that lab tests can create a false sense of security. Always test with real-world attack patterns.

- Mistake 3: Ignoring latency. If your guardrail takes more than 100ms to respond, users notice. Fiddler AI found that delays beyond this threshold cause abandonment and frustration-even if the system is secure.

- Mistake 4: Not updating KPIs. Threats evolve. MIT’s AI Security Lab found detection rates drop 30-45% when tested against new, unseen attack vectors. Your KPIs need quarterly reviews.

Who Needs These KPIs-and How to Start

Adoption is surging. According to Forrester, 68% of Fortune 500 companies now track LLM security KPIs. Financial services lead at 82% adoption-mainly because of strict PII/PHI regulations. Healthcare follows at 76%. Manufacturing focuses on secure code generation.

Here’s how to begin:

- Start with Google’s four core metrics: Safety, Groundedness, Coherence, Fluency. These are easy to measure and give you immediate visibility.

- Map to OWASP Top 10: Identify which threats apply to your use case. Is prompt injection a risk? Then track TPR for jailbreaks.

- Set thresholds: 95% TPR, 5% FPR, 1-minute MTTD. Don’t guess. Use industry benchmarks.

- Integrate with your SIEM: 68% of teams struggle here. Use APIs from vendors like Fiddler AI or Censify to push KPI data into your existing dashboards.

- Train your team: Only 32% of organizations have AI security specialists. Upskill your SOC analysts. It takes 6-8 weeks to get them up to speed.

Initial setup takes 80-120 hours for a medium-sized company. But the payoff? Fewer breaches, faster investigations, and regulatory compliance.

The Future: Automation and Regulation

By 2026, Gartner predicts 75% of enterprises will use AI-driven systems that automatically adjust KPI thresholds based on live threat feeds. That’s not science fiction-it’s coming fast.

Regulation is accelerating this. The EU AI Act now requires continuous monitoring of high-risk AI systems using quantifiable security metrics. NIST’s updated AI Risk Management Framework mandates documented KPIs. Non-compliance isn’t just risky-it’s illegal.

But there’s a warning: KPIs can be gamed. A model might learn to optimize for detection scores without actually becoming safer. That’s why experts stress: measure behavior, not just outputs. Combine metrics. Cross-check results. Never rely on one number alone.

Final Thought

Security for LLMs isn’t about firewalls or antivirus. It’s about understanding how language can be weaponized. The KPIs outlined here aren’t optional. They’re the new baseline for responsible AI. If you’re deploying LLMs and not tracking these metrics, you’re not securing your systems-you’re just hoping for the best. And in 2025, hoping isn’t a strategy.

What are the most critical LLM security KPIs to track first?

Start with True Positive Rate (TPR) for prompt injection detection (target: 95%), False Positive Rate (FPR) (target: under 5%), Mean Time to Detect (MTTD) for resource exhaustion (under 1 minute), and Google’s four core metrics: Safety, Groundedness, Coherence, and Fluency. These give you immediate visibility into the most common and dangerous threats without overcomplicating your setup.

Can I use the same KPIs for a customer service chatbot and a security tool?

No. A customer service chatbot prioritizes fluency and coherence-users want natural, polite replies. A security guardrail prioritizes detection accuracy and low false positives. Using the same KPIs for both will lead to missed threats or constant alert fatigue. Treat them as entirely different systems with different goals.

Why is my LLM’s detection rate dropping even though I’m using GPT-4?

GPT-4 is strong, but not immune. Detection rates drop 30-45% when tested against novel attack vectors not seen during training. Attackers evolve faster than models. You need continuous testing with new threat patterns. The OWASP LLM Security Testing Guide now includes 15 new test cases for emerging threats like model inversion and adversarial tuning.

How do I know if my guardrail is too slow?

If responses take longer than 100 milliseconds, users notice delays. Fiddler AI’s research shows that latency beyond this threshold causes frustration and abandonment-even if the system is secure. Monitor decision latency in production. If it’s over 100ms, optimize your guardrail architecture or reduce complexity.

Do I need a data scientist to implement these KPIs?

Not necessarily. You need someone who understands both security and AI. Many SOC analysts can learn the basics in 6-8 weeks with proper training. But if you’re building custom evaluation scripts (like Levenshtein distance checks or SQL accuracy metrics), you’ll need a data scientist to maintain them. Start with vendor tools before building in-house.

Is it worth fine-tuning an open-source LLM for security?

Yes-if you have the data. SEVenLLM, a fine-tuned model, achieved 83.7% accuracy on cybersecurity tasks, beating base models by over 7%. But fine-tuning requires hundreds of labeled examples of attacks and safe responses. If you don’t have that data, it’s better to use a proven commercial model like GPT-4 or Claude and focus on guardrails.

What happens if I ignore LLM security KPIs?

You risk data leaks, regulatory fines, and reputational damage. In 2024, a healthcare provider was fined $2.3 million after an LLM exposed patient records via a simple prompt injection. The EU AI Act and NIST now require documented KPIs. Ignoring them isn’t just risky-it’s becoming illegal.

Susannah Greenwood

I'm a technical writer and AI content strategist based in Asheville, where I translate complex machine learning research into clear, useful stories for product teams and curious readers. I also consult on responsible AI guidelines and produce a weekly newsletter on practical AI workflows.

Popular Articles

About

EHGA is the Education Hub for Generative AI, offering clear guides, tutorials, and curated resources for learners and professionals. Explore ethical frameworks, governance insights, and best practices for responsible AI development and deployment. Stay updated with research summaries, tool reviews, and project-based learning paths. Build practical skills in prompt engineering, model evaluation, and MLOps for generative AI.