Legal Counsel Playbook for Generative AI: Priorities, Checklists, and Training

A legal counsel playbook for generative AI turns institutional knowledge into automated workflows that cut contract review time by half. Learn the priorities, checklists, and training steps to implement it safely and effectively.

Vibe Coding vs Traditional Programming: Key Differences Every Developer Needs to Know

Vibe coding lets anyone build software with natural language, while traditional programming demands deep technical skill. Learn when to use each-and why the best teams use both.

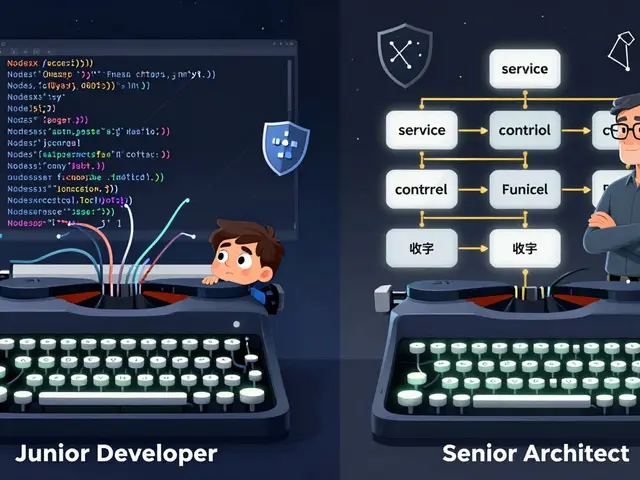

Role Assignment in Vibe Coding: How Senior Architect and Junior Developer Prompts Change Code Output

Role assignment in vibe coding transforms AI code generation by specifying whether the AI should act as a senior architect or junior developer. Senior prompts deliver secure, production-ready code with better architecture, while junior prompts excel at teaching fundamentals. This technique cuts review time by up to 40% and is now used by 68% of professional developers.

Life Sciences Research with Generative AI: Protein Design and Literature Reviews

Generative AI is revolutionizing life sciences by designing custom proteins from scratch and transforming how researchers review scientific literature. This technology enables function-first engineering of proteins that never existed in nature, accelerating drug discovery and gene therapy development.

Content Moderation Laws and Generative AI: Platform Duties and Safe Harbors

As of 2026, platforms face strict laws on AI-generated content. From EU rules to U.S. deepfake bans, they must label, detect, and remove harmful synthetic media-while balancing safety and free expression.

Threat Modeling for Large Language Model Integrations in Enterprise Apps

Threat modeling for LLM integrations in enterprise apps is no longer optional. Learn the top five real-world risks-prompt injection, data poisoning, model theft, supply chain flaws, and insecure outputs-and how tools like AWS Threat Designer are making security practical for development teams.

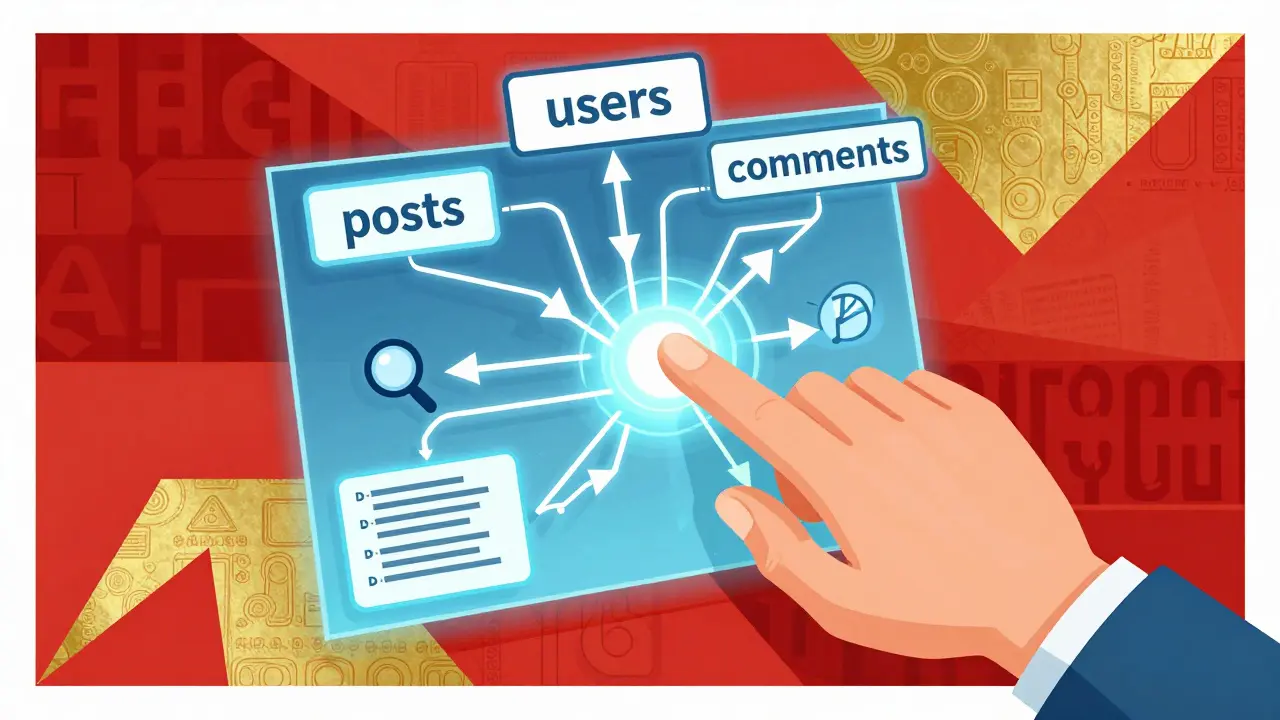

Database Schema Design with AI: Validating Models and Migrations

AI is transforming database schema design by generating production-ready models from plain language, validating structures for integrity, and auto-generating safe migrations. Learn how it works and why human oversight still matters.

How Autoregressive Generation Works in Large Language Models: Step-by-Step Token Production

Autoregressive generation powers major LLMs like GPT-4 and Claude by predicting text one token at a time. Learn how this step-by-step process works, why it’s dominant, and its key limitations.

Building AI Chatbots and Assistants with Vibe Coding and Retrieval Systems

Learn how vibe coding and retrieval systems let anyone build AI chatbots without writing code - and why security, debugging, and enterprise readiness still require human oversight.

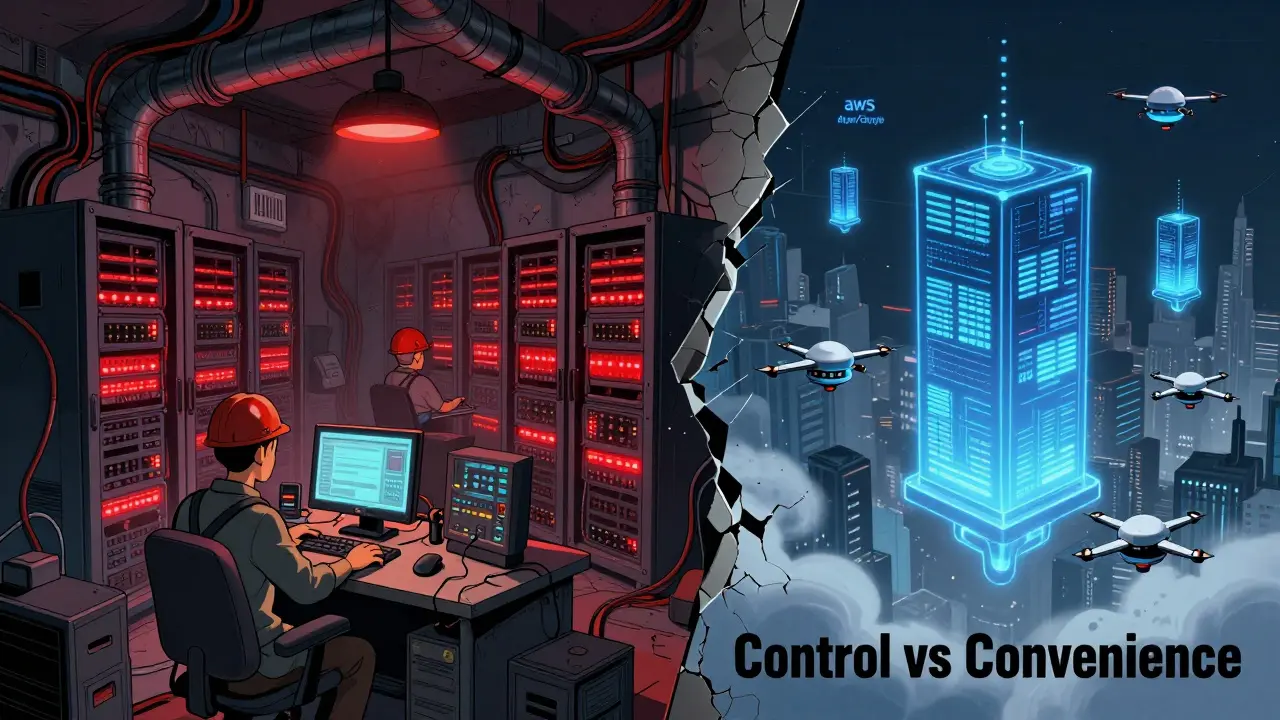

On-Prem vs Cloud: Enterprise Trade-Offs and Controls for Modern Coding

Choosing between on-prem and cloud for enterprise coding isn't about trends-it's about control, cost, and compliance. Learn the real trade-offs that affect deployment speed, security, and long-term scalability.

Next-Generation Generative AI Hardware: Accelerators, Memory, and Networking in 2026

In 2026, generative AI runs on next-gen accelerators, HBM4 memory, and Ethernet-based networking. NVIDIA, AMD, Microsoft, and Qualcomm are all pushing new silicon that’s reshaping how AI models train and infer.

Self-Supervised Learning in NLP: How Large Language Models Learn Without Labels

Self-supervised learning lets AI models learn language by predicting missing words in text - no human labels needed. This technique powers GPT, BERT, and all modern large language models.