Security Risks in LLM Agents: Injection, Escalation, and Isolation

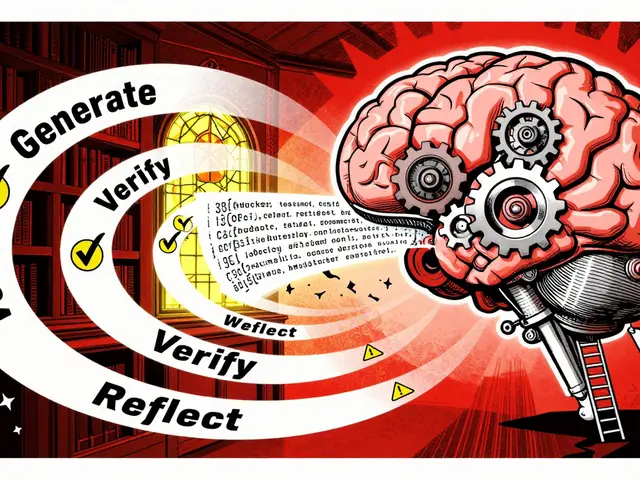

LLM agents can access systems, execute code, and make decisions autonomously-but that makes them dangerous if not secured. Learn how prompt injection, privilege escalation, and isolation failures lead to breaches, and what actually works to stop them.