- Home

- AI & Machine Learning

- Few-Shot Prompting Patterns That Improve Accuracy in Large Language Models

Few-Shot Prompting Patterns That Improve Accuracy in Large Language Models

Ever typed a question into an AI chatbot and got a response that sounded right but was completely wrong? You’re not alone. Even the most advanced models like GPT-4 or Claude 3 can stumble on complex tasks-like extracting medical codes, formatting JSON correctly, or solving multi-step math problems-if you just ask them outright. That’s where few-shot prompting comes in. It’s not magic. It’s not fine-tuning. It’s simply giving the model a few clear examples before asking it to do the real job. And it works-often dramatically.

Why Zero-Shot Prompting Falls Short

Zero-shot prompting means asking the model to do something with no examples. Just a raw instruction: "Classify this email as spam or not spam." Simple? Yes. Reliable? Not always. Models like GPT-3.5 or Gemini 1.5 can handle basic queries this way. But when the task gets messy-say, identifying drug interactions from clinical notes or converting free-form patient summaries into structured ICD-10 codes-the accuracy drops. Studies from Stanford and PMC show that zero-shot accuracy on complex NLP tasks often hovers between 65% and 72%. That’s acceptable for casual use, but not for healthcare, legal, or financial applications where mistakes cost money or lives. The problem isn’t the model’s knowledge. It’s the lack of context. Without seeing how you want the output shaped, the model guesses. And guesses can be dangerously wrong.What Few-Shot Prompting Actually Does

Few-shot prompting gives the model 2 to 8 examples of input-output pairs right inside the prompt. Think of it like showing a new employee how to fill out a form-not just telling them what the form is for, but walking them through three completed versions first. For example:- Input: Patient reports chest pain after jogging. Output: R07.9

- Input: Headache, no trauma, duration 2 days. Output: G44.9

- Input: Nausea and dizziness following chemotherapy. Output: R11.10

- Input: Fatigue and joint swelling after starting metformin. Output: ?

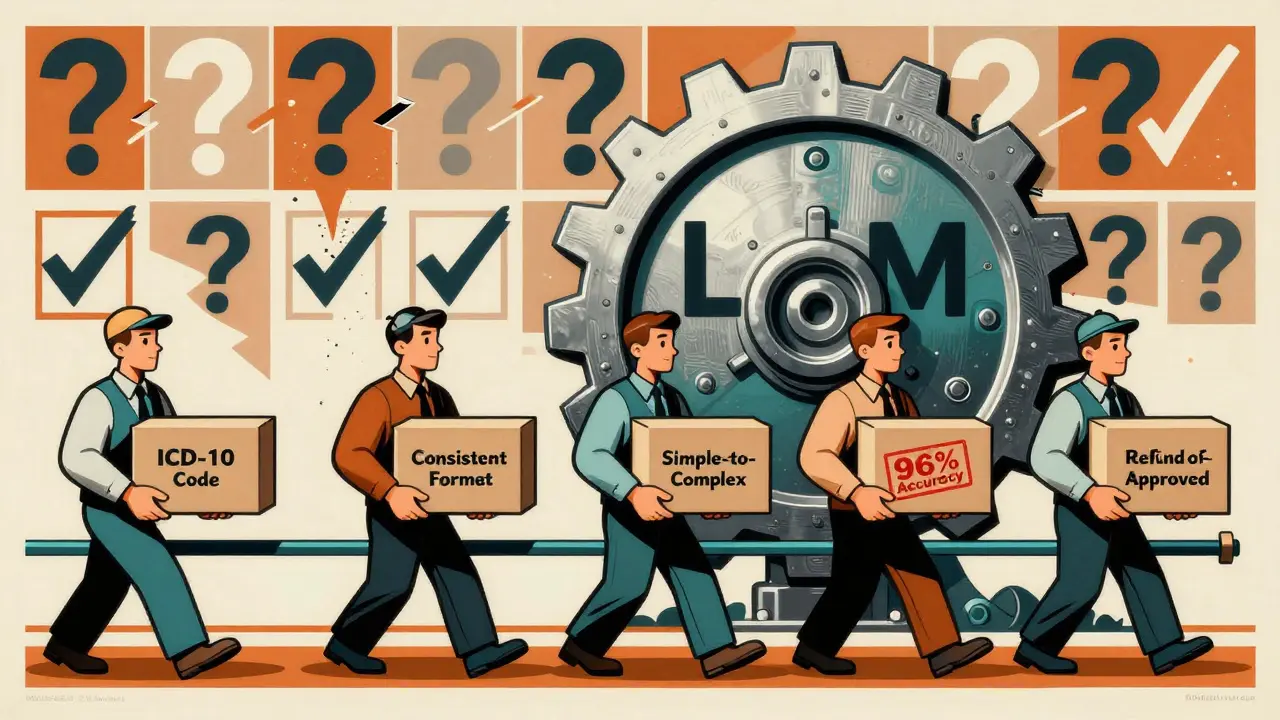

The 5 Best Few-Shot Patterns

Not all examples are created equal. The difference between a 15% improvement and a 40% one often comes down to how you structure those examples. Here are five proven patterns:1. Start and End with Strong Examples

Models don’t treat all examples the same. They remember the first and last ones best. This is called the “primacy and recency effect.” Put your clearest, most representative examples at the beginning and end. If you’re teaching the model to write customer service replies, don’t bury your best template in the middle.2. Use Consistent Formatting

Mixing up capitalization, punctuation, or structure confuses the model. If one example uses “Output:” and another uses “Answer:”, the model doesn’t know which to follow. Stick to one format across all examples. Use delimiters like--- or ### to separate input-output pairs clearly.

3. Progress from Simple to Complex

Don’t jump from “Classify this as positive or negative” to “Analyze sentiment across three paragraphs with sarcasm detection.” Build up. Start with easy cases, then introduce complexity. This helps the model gradually adapt its internal reasoning.4. Add Chain-of-Thought Steps

This is where few-shot gets even more powerful. Instead of just showing input → output, show the reasoning too:- Input: If a shirt costs $20 and is 25% off, what’s the final price?

- Output: First, calculate 25% of $20: 0.25 × 20 = $5. Then subtract: $20 - $5 = $15. Final price: $15.

5. Use Ensemble Prompting

This advanced trick, used in clinical AI systems, runs the same task through multiple few-shot prompts and picks the most common answer. For example:- Prompt 1: Uses medical jargon examples

- Prompt 2: Uses layperson examples

- Prompt 3: Uses structured templates

When Few-Shot Doesn’t Work (And What to Do Instead)

Few-shot prompting isn’t a silver bullet. It fails in three common scenarios:1. Real-Time Data Needed

If you need stock prices, weather updates, or live sports scores, few-shot won’t help. The model’s knowledge is frozen at its training cutoff (usually 2023-2024). For this, you need Retrieval-Augmented Generation (RAG)-pulling live data from databases or APIs.2. Too Many Examples Required

Some tasks need hundreds of examples to learn properly. Few-shot is designed for low-data scenarios. If you’re training a model to recognize 500 types of manufacturing defects, you’re better off fine-tuning. Few-shot maxes out around 8-10 examples for most models. More than that, and you risk hitting context window limits.3. Poor Example Quality

Bad examples hurt more than no examples. A 2024 PMC study found that misleading or inconsistent examples could drop accuracy by up to 12%. If you give the model an example where “high fever” maps to “R50.9” but then another where it maps to “A41.9,” the model gets confused. Always validate your examples with domain experts.How to Test and Refine Your Few-Shot Prompts

You can’t just write a prompt and hope it works. You need to test it. Here’s a simple workflow:- Start with zero-shot. Record accuracy on a test set of 20-50 samples.

- Add two high-quality examples. Test again. Did accuracy jump?

- Try adding a third. Does it help-or hurt?

- Swap out one example. Does performance change?

- Try chain-of-thought. Does reasoning improve?

- Use ensemble prompting. Does voting boost results?

Industry Adoption and Real-World Impact

This isn’t just lab talk. Few-shot prompting is already in production:- Healthcare: 32% of clinical NLP systems use it. One hospital system cut misdiagnosis flags by 41% using few-shot prompts to extract symptoms from doctor’s notes.

- Finance: Banks use it to classify transaction fraud patterns with only 5 labeled examples per category-saving millions in manual review hours.

- Customer Service: Companies like Zendesk and Salesforce embed few-shot templates in their AI chatbots to handle complex refund requests without human escalation.

The Future: Automation and Beyond

The next leap? Getting rid of manual example creation. Meta’s 2024 AutoPrompt system uses algorithms to automatically select the best few-shot examples from a dataset-cutting prompt engineering time by 22%. Google’s Vertex AI and Anthropic’s Claude Console now offer built-in few-shot templates. By 2026, Forrester predicts 65% of enterprise LLM apps will use optimized few-shot patterns as standard. But there’s a limit. DeepMind’s 2024 paper showed performance plateaus after 8 examples. We might be hitting a wall in in-context learning. The next breakthrough may need new model architectures-not better prompts.Final Thought: It’s About Control, Not Just Accuracy

Few-shot prompting gives you control. You’re not just asking the AI to guess. You’re guiding it. You’re setting boundaries. You’re saying: "This is how we want it done." That’s why it’s so powerful-not because it’s smarter, but because it’s more predictable. In a world where AI hallucinations cost companies millions, that predictability is worth more than any algorithm tweak. Start with two good examples. Test. Iterate. Watch your accuracy climb.What’s the difference between zero-shot and few-shot prompting?

Zero-shot prompting asks the model to perform a task with no examples-just a direct instruction. Few-shot prompting provides 2-8 input-output examples before the actual task to show the model the desired format and behavior. Few-shot typically improves accuracy by 15-40% on complex tasks because it gives the model clear context for how to respond.

How many examples should I use in few-shot prompting?

Most models perform best with 2 to 8 examples. Using more than 8 often doesn’t help and can fill up the context window, leaving less space for your actual task. Start with 2-3 high-quality examples and test. Add more only if accuracy improves. Some advanced techniques like ensemble prompting use multiple prompts with fewer examples each, rather than one long prompt with many examples.

Does few-shot prompting work with all large language models?

It works best with models that have 10 billion parameters or more, like GPT-3.5, GPT-4, Claude 3, and Gemini 1.5. Smaller models often don’t benefit significantly because they lack the capacity for in-context learning. The technique is compatible with autoregressive models (like GPT), encoder-decoder models (like T5), and instruction-tuned models (like Claude), but results vary based on training data and architecture.

Can few-shot prompting replace fine-tuning?

It can replace fine-tuning for many tasks-especially when you need quick deployment, low cost, or no model changes. Few-shot is cheaper and faster than fine-tuning, which can cost thousands of dollars and take days. However, fine-tuning typically achieves 8-15% higher accuracy on highly specialized tasks with large datasets. Use few-shot for rapid iteration; use fine-tuning for permanent, high-stakes applications.

Why do my few-shot prompts sometimes give inconsistent results?

Inconsistencies often come from poor example quality, mixed formatting, or the "lost-in-the-middle" effect, where models ignore examples placed in the middle of the prompt. Always use consistent structure, place key examples at the start and end, and validate examples with domain experts. Testing with small batches helps spot these issues early.

Is few-shot prompting secure for sensitive data?

It depends on how you use it. If you’re feeding patient records, financial data, or proprietary business logic into the prompt, you’re sending that data to the model provider’s servers. For sensitive data, use on-premise models, encrypted APIs, or synthetic examples that mimic real data without using actual records. Never include real PII in prompts unless you’re certain of the provider’s privacy policies.

Susannah Greenwood

I'm a technical writer and AI content strategist based in Asheville, where I translate complex machine learning research into clear, useful stories for product teams and curious readers. I also consult on responsible AI guidelines and produce a weekly newsletter on practical AI workflows.

Popular Articles

3 Comments

Write a comment Cancel reply

About

EHGA is the Education Hub for Generative AI, offering clear guides, tutorials, and curated resources for learners and professionals. Explore ethical frameworks, governance insights, and best practices for responsible AI development and deployment. Stay updated with research summaries, tool reviews, and project-based learning paths. Build practical skills in prompt engineering, model evaluation, and MLOps for generative AI.

Let me tell you something profound: this isn't about prompting. It's about the soul of machines learning to mimic human intention. We're not teaching AI-we're exorcising our own laziness into its circuits. Every example we feed it is a whispered prayer for it to understand us without us having to be clear. And yet... it works. Not because it's smart, but because we're desperate enough to make it so.

It's like teaching a ghost to cook by showing it five photos of pasta. The ghost doesn't know what pasta is. But it knows the shape of our hunger.

okay but like… why is everyone spelling ‘ICD-10’ wrong in the examples? it’s not ‘ICD10’ or ‘Icd-10’ it’s ICD-10. period. also ‘R07.9’? you missed the leading zero in the second example. this whole post is like a grammar apocalypse and nobody cares. how are we trusting life-or-death medical codes to people who can’t even format a decimal correctly?

also ‘G44.9’ is headache? no it’s not. that’s unspecified headache. you’re supposed to use G43 for migraine. you’re just making people worse.

What strikes me isn’t the technique-it’s the quiet desperation behind it. We’ve built these colossal models, trained on the entirety of human knowledge, and yet we still need to hold their hand like toddlers learning to tie shoes. We didn’t evolve intelligence to outsource it to machines that need hand-holding. We evolved it to understand, to reason, to feel.

But here we are. Feeding them five examples of chest pain like they’re toddlers learning colors. And we call this progress?

Maybe the real failure isn’t the model. It’s our refusal to build systems that think, instead of just mimic. We’ve created mirrors that reflect our own laziness, then praise them for seeing clearly.