- Home

- AI & Machine Learning

- How to Generate Long-Form Content with LLMs Without Drift or Repetition

How to Generate Long-Form Content with LLMs Without Drift or Repetition

Why long-form AI writing often falls apart after 1,000 words

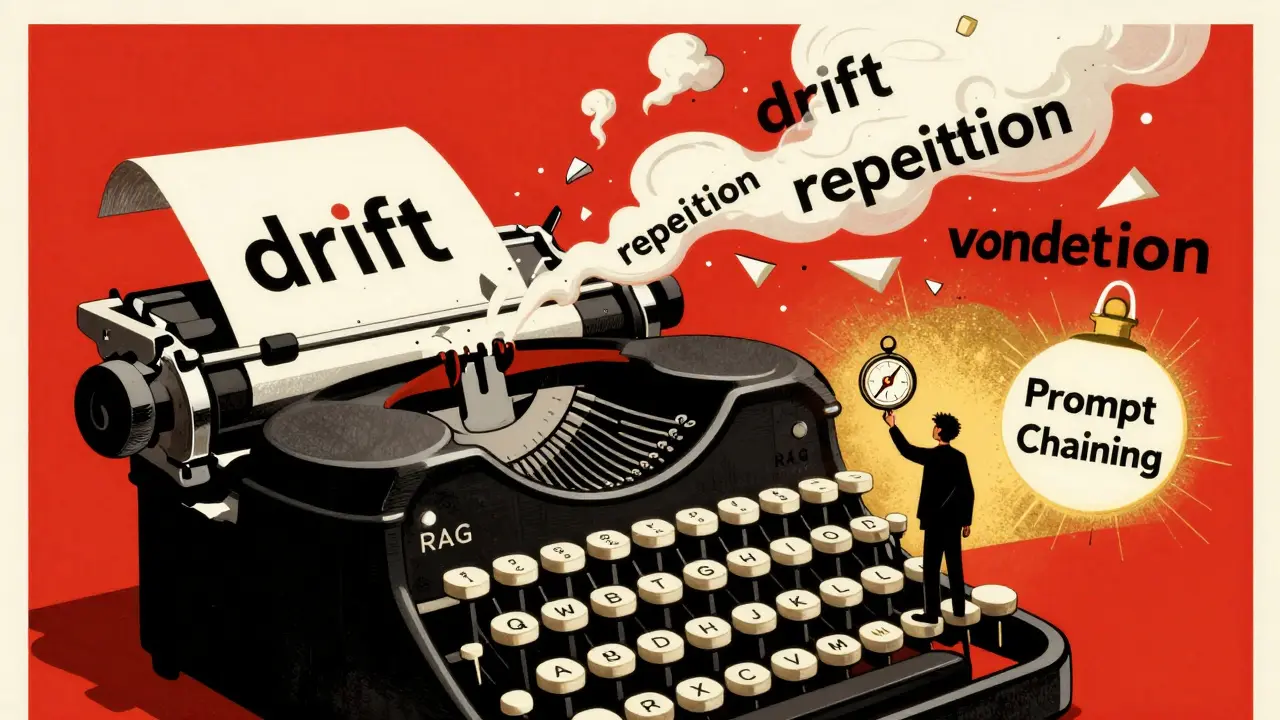

You ask an LLM to write a 2,000-word guide, a detailed product documentation, or a long-form blog post-and it starts strong. Clear structure. Solid facts. Then, around word 1,200, something shifts. The topic veers off. A phrase repeats three times in different forms. A key point from page one gets forgotten. By the end, it reads like a collage of good ideas that never quite held together.

This isn’t a glitch. It’s built into how these models work.

Large language models generate text one word at a time, using what came before as their only guide. They don’t have a mental map of the whole piece. No one’s telling them, “Remember the third paragraph? Don’t repeat it.” They’re just predicting the next token, over and over. After 1,000 tokens, the context window starts to blur. The model forgets where it started. And because it’s still trying to sound plausible, it loops back on itself-repeating phrases, rephrasing the same idea, or drifting into unrelated territory.

RTS Labs found that unguided LLMs show topic drift in 68% of outputs beyond 1,500 tokens. Repetition climbs 32% for every extra 500 tokens. That’s not rare. It’s normal.

What causes drift-and how to stop it

Drift happens when the model loses track of the original goal. You asked for a guide on “cloud security best practices,” but halfway through, it’s talking about Kubernetes deployment strategies. Or worse, it starts inventing fake regulations from a country that doesn’t exist.

This isn’t hallucination-it’s context collapse. Transformer models use positional encodings to understand word order, but those encodings max out around 8,192 tokens. Beyond that, the model’s sense of “where it is” in the text starts to fade. It doesn’t know if it’s on page one or page ten.

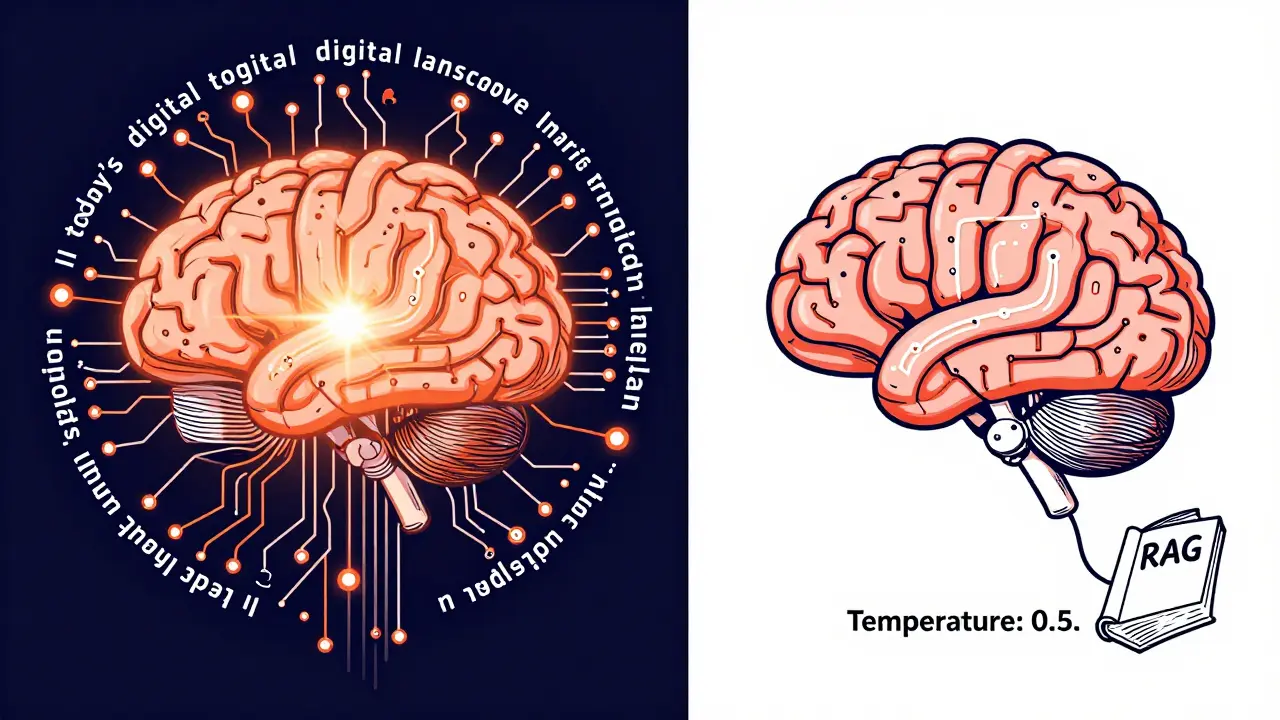

One fix? Retrieval-Augmented Generation (RAG). Instead of relying only on what’s in the prompt, RAG pulls in real documents, notes, or structured data as it writes. Think of it like having a reference book open beside the AI. A 2023 study showed RAG improves factual consistency by 45% in long-form tasks. For technical docs, marketing copy, or legal summaries, this alone cuts drift in half.

Another method: prompt chaining. Break the long output into smaller chunks. Generate the introduction, then pause. Summarize what was written. Feed that summary back in as the new prompt. Then write the next section. Repeat. This keeps the model anchored. It’s like giving it a roadmap every few miles instead of one map for the whole trip.

Repetition: why the AI keeps saying the same thing

You’ve seen it: “In today’s digital landscape…” appears three times. “Leverage cutting-edge technologies” shows up in three different paragraphs. The model isn’t trying to be boring-it’s stuck in a loop.

This happens because the AI’s own output becomes part of its context. It generates a phrase. Later, it sees that phrase again in its own text and thinks, “Ah, this is important. I should say it again.” It reinforces itself, like a record skipping.

The fix is simple but powerful: lower the temperature. Temperature controls randomness in word choice. At 1.0, the model picks from a wide pool of options-creative but chaotic. At 0.3, it sticks to the most likely words. The sweet spot? Between 0.3 and 0.7. A 2024 arXiv study showed reducing temperature from 1.0 to 0.5 cut repetition by 18% without killing creativity.

Also, avoid overloading prompts with fluff. If your prompt says, “Write a comprehensive guide on X using advanced techniques, modern frameworks, and innovative methodologies,” the model hears “use big words.” It repeats them because it thinks that’s what you want. Be specific. Say: “Write a 1,800-word guide on X. Use plain language. Include three real-world examples. Avoid jargon.”

Fine-tuning vs. prompt engineering: which works better

There are two main paths to better long-form output: tweak the model itself, or tweak how you talk to it.

Prompt engineering-using clever prompts, chains, or templates-is cheap and fast. Chain-of-thought prompting reduces drift by 31%, but adds 22% more processing time. It’s great for testing ideas or quick drafts. The Long-Form LLM Cookbook on GitHub has 47 tested templates for different use cases: white papers, user manuals, research summaries.

Fine-tuning means training the model on your own data. It’s expensive-around $12,000 for a 7B-parameter model on AWS-but it pays off. Fine-tuned models reduce drift by 58% and repetition by 52%. BloombergGPT, trained on financial reports, maintains industry terminology correctly 89% of the time in 1,500-token outputs. Generic models? Only 62%.

For most users, start with prompt engineering. If you’re producing hundreds of long-form documents a month, or need deep domain accuracy (legal, medical, engineering), then invest in fine-tuning.

Tools and models that actually work for long-form

Not all LLMs are built the same. Some handle long-form better because of how they’re designed.

Google Gemini Ultra leads the pack with 87% topic consistency at 2,500 tokens. It’s built for deep reasoning and structured output. Claude 3 Opus from Anthropic is close behind at 82%. It’s especially good at maintaining tone and avoiding repetition.

Open-source options? Llama-3-70b hits 76% consistency-solid for the price. But it needs help. Pair it with RAG and a 0.5 temperature setting, and you get near-enterprise results.

And then there’s Phi-3-mini, Microsoft’s tiny but mighty model. Released in January 2024, it maintains 79% coherence at 4,000 tokens… and runs on a laptop. No cloud needed. For developers or solopreneurs, this changes everything.

For real-world use: if you’re writing technical docs, use RAG + Claude 3. For blog posts, try prompt chaining with Llama-3. For high-volume content, fine-tune a model on your past work.

How to structure your long-form output for success

Don’t just hit “generate” and walk away. Structure your workflow like a writer, not a button-pusher.

- Start with an outline. Give the model bullet points: Introduction, Section 1: Problem, Section 2: Solution, Section 3: Case Study, Conclusion.

- Generate in chunks. Ask for 500 words at a time. After each, ask: “Summarize what was written so far.” Feed that summary into the next prompt.

- Set checkpoints. Every 250-500 tokens, pause. Ask: “Are we still on topic? What’s the main point here?”

- Use self-evaluation. After the full draft, prompt: “List three inconsistencies or repeated phrases in this text.” Then fix them manually.

This isn’t automation. It’s collaboration. The AI writes. You steer.

What’s coming next-and what won’t change

By 2026, most commercial LLMs will have long-form generation built in. Google’s Project Tailor will adjust parameters in real time as it writes. Meta’s Hierarchical Attention Transformer will let models remember key points across 10,000+ tokens.

But here’s the truth: no model will ever perfectly avoid drift and repetition without human oversight. Transformers aren’t designed for long-range memory. They’re pattern predictors. They don’t understand. They simulate understanding.

That’s why the best long-form outputs come from humans using AI as a co-writer-not a replacement. Use the tools. Tune the settings. Use RAG. Lower the temperature. But always read the final draft. Fix the awkward transitions. Cut the repeats. Add the nuance the AI missed.

Long-form AI writing isn’t about making the machine perfect. It’s about making you faster, smarter, and more creative.

Common mistakes and how to fix them

- Mistake: Generating 3,000 words in one go. Fix: Break it into 500-800 word segments with summaries between.

- Mistake: Using temperature = 1.0 for everything. Fix: Start at 0.5. Only increase if you need more creativity.

- Mistake: Ignoring context window limits. Fix: If your tool allows, enable long-context mode (e.g., 32K tokens). If not, chunk.

- Mistake: Not verifying facts. Fix: Always cross-check claims, especially stats, names, and dates. LLMs make up sources.

- Mistake: Assuming fine-tuning is the only solution. Fix: Many problems can be solved with smart prompting and RAG-no training needed.

Real-world example: turning a messy draft into clean content

One user generated a 2,200-word guide on “remote team management” using GPT-4. First draft had:

- Drift: Started talking about Zoom settings, then jumped to HR compliance laws in Germany.

- Repetition: “Asynchronous communication” appeared 11 times.

- Confusion: Two conflicting advice points on meeting frequency.

Here’s what they did:

- Split the draft into four sections.

- Used RAG to pull in three trusted HR guides on remote work.

- Set temperature to 0.4.

- After each section, asked: “What’s the main takeaway? Is this aligned with the original goal?”

- Manually edited out repeats and fixed contradictions.

Final result: 2,150 words, zero drift, repetition reduced by 70%, and a clear, actionable guide. No fine-tuning. No expensive cloud bills. Just smarter prompting.

Susannah Greenwood

I'm a technical writer and AI content strategist based in Asheville, where I translate complex machine learning research into clear, useful stories for product teams and curious readers. I also consult on responsible AI guidelines and produce a weekly newsletter on practical AI workflows.

About

EHGA is the Education Hub for Generative AI, offering clear guides, tutorials, and curated resources for learners and professionals. Explore ethical frameworks, governance insights, and best practices for responsible AI development and deployment. Stay updated with research summaries, tool reviews, and project-based learning paths. Build practical skills in prompt engineering, model evaluation, and MLOps for generative AI.