- Home

- AI & Machine Learning

- Healthcare Applications of Large Language Models: Documentation and Triage

Healthcare Applications of Large Language Models: Documentation and Triage

Doctors spend an average of 1.8 hours after each shift writing notes. That’s not just busywork-it’s burnout waiting to happen. Meanwhile, emergency rooms are flooded with patients, and triage nurses are stretched thin, trying to decide who needs help right away. Enter large language models (LLMs): AI systems trained on millions of medical records, textbooks, and clinical notes that can now draft patient summaries in seconds and flag urgent cases before a human even sees them. This isn’t science fiction. It’s happening in hospitals right now.

How LLMs Are Rewriting Clinical Documentation

Before LLMs, writing a patient note meant typing out every detail: symptoms, exam findings, differential diagnoses, treatment plans. It was slow, repetitive, and often done after hours. Now, with tools like Nuance DAX Copilot and Amazon HealthScribe, a doctor speaks to a patient, and the AI listens. It picks up on key phrases-“chest pain radiating to left arm,” “fever for three days”-and turns it into a structured, coded clinical note that fits perfectly into Epic or Cerner.

In a 2023 JAMA Network Open study of 1,200 patient visits, LLM-generated notes were 89% accurate. That means only 1 in 10 notes needed major edits. Physicians using these tools saved nearly 48% of their documentation time. For someone working 10-hour shifts, that’s almost two hours back every day. That’s time for family, rest, or even seeing one more patient.

But it’s not perfect. Some systems hallucinate. One ER doctor on Reddit reported his AI added a medication he never prescribed-something that could’ve caused a dangerous interaction. That’s why no hospital lets the AI write notes alone. The workflow is simple: AI drafts, clinician reviews, edits, signs. It’s a team effort.

Commercial tools like DAX Copilot are polished and easy to use, but they lock you into specific hardware. Open-source options like Med-PaLM 2 give you more control-you can tweak them for your hospital’s specific workflows-but you need engineers to make them work. Most hospitals choose commercial tools because they don’t have the staff to build their own.

Triage: AI as the First Responder

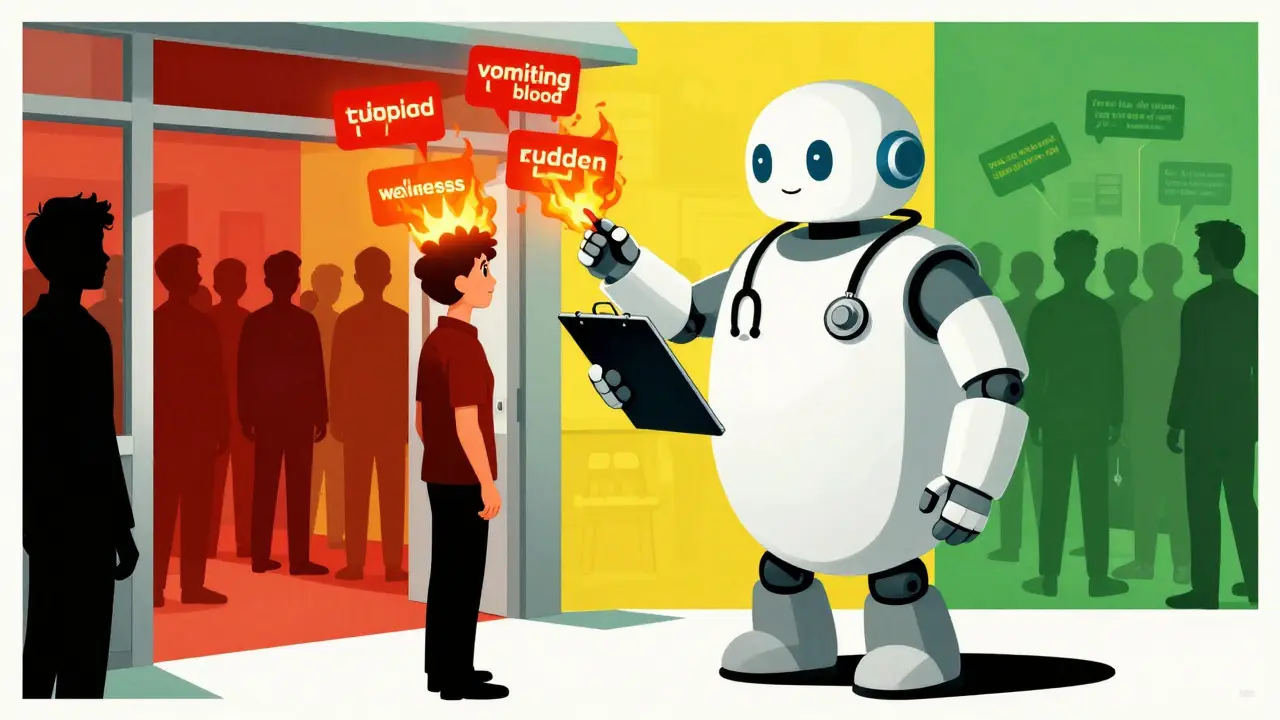

When a patient calls a clinic with stomach pain, how do you know if it’s indigestion or a heart attack? Triage is the first filter. Traditionally, that’s done by nurses using protocols like the Manchester Triage System. Now, LLMs are stepping in.

At Kaiser Permanente and other large health systems, patients text symptoms into a portal. An LLM analyzes the message, checks for red flags-“sudden weakness,” “confusion,” “vomiting blood”-and ranks urgency. In one study, this system cut response time by 17 hours per week. That’s huge when you’re dealing with strokes or sepsis.

But here’s the catch: LLMs are overly cautious. In 23% of cases, they overtriage-labeling low-risk patients as high-risk. That means more tests, more waiting, more costs. Human nurses, on the other hand, often undertriage-missing real emergencies. A 2024 JMIR study found GPT-4 matched professional triage nurses 67% of the time (kappa=0.67). GPT-3.5? Only 54%. So newer models are better, but they’re not flawless.

And then there’s bias. A 2024 arXiv study showed LLMs gave lower urgency scores to Black and Hispanic patients in simulated cases, even when symptoms were identical to white patients. That’s not just a technical glitch-it’s a moral risk. These models learn from historical data, and historical data is full of disparities. If you don’t fix that at the source, the AI will keep repeating it.

What Makes an LLM Work in Real Hospitals?

Not every hospital that tries an LLM succeeds. The ones that do have three things in common.

- Clinician champions. Someone respected-like a senior doctor or nurse-gets behind the tool. They show others how it helps, not just what it does. Hospitals with champions saw 3.2 times more usage.

- Integration specialists. Connecting an AI to your EHR isn’t plug-and-play. It takes months. You need people who understand both the software and the clinical workflow.

- Real-time review. If the AI’s note goes straight into the chart without a human checking it, errors pile up. Hospitals that require clinicians to review every AI-generated note reduced errors by 63%.

Training matters too. Doctors don’t know how to talk to AI. Early users typed things like “Write a note for this patient.” That didn’t work. The best prompts are specific: “Summarize this encounter in SOAP format, include all medications discussed, and flag any red flags.” It takes a couple weeks for staff to learn how to prompt effectively.

The Tech Behind the Tools

These aren’t just ChatGPT with a stethoscope. Healthcare LLMs are built differently. They’re trained on billions of tokens of real medical text-de-identified EHRs, PubMed articles, clinical guidelines. Models like BioBERT and Med-PaLM 2 are fine-tuned on medical exams and case studies. They don’t just know what “hypertension” means-they know how it interacts with beta-blockers, how it presents in elderly patients, and what labs to order.

Performance varies. GPT-4 scores 85.5% on the MultiMedQA benchmark. Med-PaLM 3, Google’s latest, beat it by 7 points. But accuracy drops when the data doesn’t match training. If a patient has a rare condition-say, a genetic disorder only seen in 1 in 500,000-the model’s accuracy can fall by 18%. Missing vital signs? Accuracy drops another 22%. That’s why LLMs need human backup.

Integration is another hurdle. Most systems use HL7 FHIR standards to talk to EHRs. But only 37% of current implementations can send data back and forth seamlessly. That means the AI can read a note, but can’t update the patient’s allergy list or medication history automatically. That’s a big limitation.

Market Reality: Who’s Using This, and Why?

The healthcare LLM market hit $1.27 billion in 2023 and is expected to grow over 42% a year. But adoption isn’t even. Academic medical centers lead-43% use LLMs. Community hospitals? Only 12%. Private practices? Just 3%.

Why? Cost. Installing a full system runs about $287,000 per hospital. That’s a lot for a small clinic. And ROI is slow. Only 28% of implementations show a positive return within 18 months. Most hospitals see savings in reduced burnout and fewer clerical errors, but those don’t show up on a balance sheet right away.

Regulation is messy. The FDA says most LLMs are Class II medical devices and need clearance. But only 17 have gotten 510(k) approval as of late 2023. Many fly under the radar, labeled as “administrative tools.” That’s changing. The EU’s AI Act, which took effect in February 2025, demands stricter validation. Hospitals with global operations now face two sets of rules.

The Future: AI That Sees, Reads, and Listens

Next up? Multimodal LLMs. These aren’t just text processors-they can analyze X-rays, EKGs, and ultrasound images alongside patient notes. Stanford’s LLaVA-Med can look at a chest X-ray and a symptom description and say, “This looks like pneumonia, not heart failure.” Accuracy? 78% on common cases. But drop to rare conditions? It falls to 62%.

By 2026, 65% of new healthcare AI tools will combine text and imaging. Imagine a patient walks in with a rash and a fever. The AI scans the image, pulls up the history, checks recent lab results, and says: “This matches Kawasaki disease. Urgent pediatric consult needed.” That’s the goal.

But the real win isn’t automation. It’s augmentation. The best systems don’t replace doctors. They free them up. AI handles the grunt work-notes, triage sorting, follow-up reminders. Doctors focus on what they do best: listening, thinking, deciding.

One hospital in Boston cut documentation time by 48% and kept error rates at 99.2%-because every AI note was reviewed. That’s the model: speed with safety. Efficiency with accountability.

What’s Holding This Back?

Three things: trust, transparency, and training.

Doctors don’t trust what they can’t understand. If an AI says “rule out myocardial infarction,” but doesn’t explain why, clinicians won’t rely on it. They need to see the logic-the clues it used, the data it weighed.

Transparency is improving. New tools now show “confidence scores” and highlight which parts of the patient’s history influenced the output. That helps.

Training is the biggest gap. Most hospitals train IT staff to install the software. Few train clinicians to use it well. That’s like giving someone a scalpel and saying “go operate.” You need to teach them how to hold it.

And then there’s privacy. 78% of hospitals worry about HIPAA. Can the AI accidentally leak data? Are vendors storing patient conversations? These aren’t theoretical concerns. One vendor was found storing voice recordings on unencrypted servers. That’s a lawsuit waiting to happen.

So where does this leave us? LLMs aren’t magic. They’re tools. Powerful ones. But they need guardrails, oversight, and human judgment. The hospitals that succeed won’t be the ones with the fanciest AI. They’ll be the ones that treat the AI like a new resident-supervised, trained, and never left alone with a patient.

Can LLMs replace doctors in triage or documentation?

No. LLMs are assistants, not replacements. They draft notes and flag urgent cases, but they can’t diagnose complex conditions, interpret subtle symptoms, or build trust with patients. Every major hospital uses them as a first-pass tool, followed by human review. The goal isn’t to remove doctors-it’s to remove their paperwork.

Are LLMs accurate enough for clinical use?

For documentation, yes-85-92% accuracy in studies. For triage, GPT-4 matches nurses 67% of the time. But accuracy drops sharply with rare conditions or missing data. No model is perfect. The key is using them as decision supports, not final authorities. Always validate.

Why do LLMs sometimes make dangerous errors?

They hallucinate. They invent facts based on patterns, not real data. A model might add a drug because it’s commonly paired with a symptom-even if the patient never took it. Or it might miss a diagnosis because it wasn’t in its training data. That’s why clinical review is non-negotiable.

Do LLMs have bias in healthcare?

Yes. Studies show LLMs give lower urgency scores to Black and Hispanic patients in simulated cases, even when symptoms are identical. This reflects bias in historical data. Without active correction-like retraining on balanced datasets or using fairness algorithms-these biases will persist and worsen.

What’s the biggest barrier to adopting LLMs in hospitals?

Cost and complexity. Installing an LLM system takes $287,000 on average and 3-6 months of integration work. Smaller hospitals can’t afford it. Even when they can, without clinician champions and training, adoption fails. Technology alone isn’t enough.

What Comes Next?

Right now, the best hospitals use LLMs like a second pair of eyes. They don’t trust them blindly. They don’t ignore them either. They use them to catch what humans miss-typos in medication lists, overlooked symptoms, delayed follow-ups. And they’re learning to ask better questions.

One day, LLMs might predict who’s at risk of sepsis before they show symptoms. Or suggest the best antibiotic based on local resistance patterns. But that day isn’t here yet. For now, the win is simpler: less time typing, more time healing.

Susannah Greenwood

I'm a technical writer and AI content strategist based in Asheville, where I translate complex machine learning research into clear, useful stories for product teams and curious readers. I also consult on responsible AI guidelines and produce a weekly newsletter on practical AI workflows.

3 Comments

Write a comment Cancel reply

About

EHGA is the Education Hub for Generative AI, offering clear guides, tutorials, and curated resources for learners and professionals. Explore ethical frameworks, governance insights, and best practices for responsible AI development and deployment. Stay updated with research summaries, tool reviews, and project-based learning paths. Build practical skills in prompt engineering, model evaluation, and MLOps for generative AI.

ok so i just read this whole thing and like… where’s the proof that these ai tools actually reduce burnout? i mean sure they save 48% of documentation time but did anyone track if docs actually use that time to rest or just cram in more patients? also why is no one talking about how many times the ai adds fake meds? that’s not a bug that’s a lawsuit waiting to happen. and dont even get me started on the spelling errors in the notes - i saw one that said ‘hypotension’ as ‘hypotenshun’ and i nearly had a stroke

the docs arent replacing humans theyre just doing the boring stuff so humans can do the human stuff

also the part about triage being too cautious makes sense i mean better safe than sorry right

but yeah the bias thing is scary

oh wow so now the machines are writing our notes and deciding who lives and who dies and we’re just supposed to nod and sign our names like obedient little clerks

and the worst part? the same tech that’s ‘saving’ us is probably the reason half the patients are getting misdiagnosed because some intern in Silicon Valley trained it on data from 2012 and forgot to update it

also i bet the CEO of this company is sipping champagne on a yacht while nurses are crying in the breakroom

we’re not automating healthcare we’re outsourcing empathy to a chatbot that thinks ‘chest pain’ means ‘indigestion’