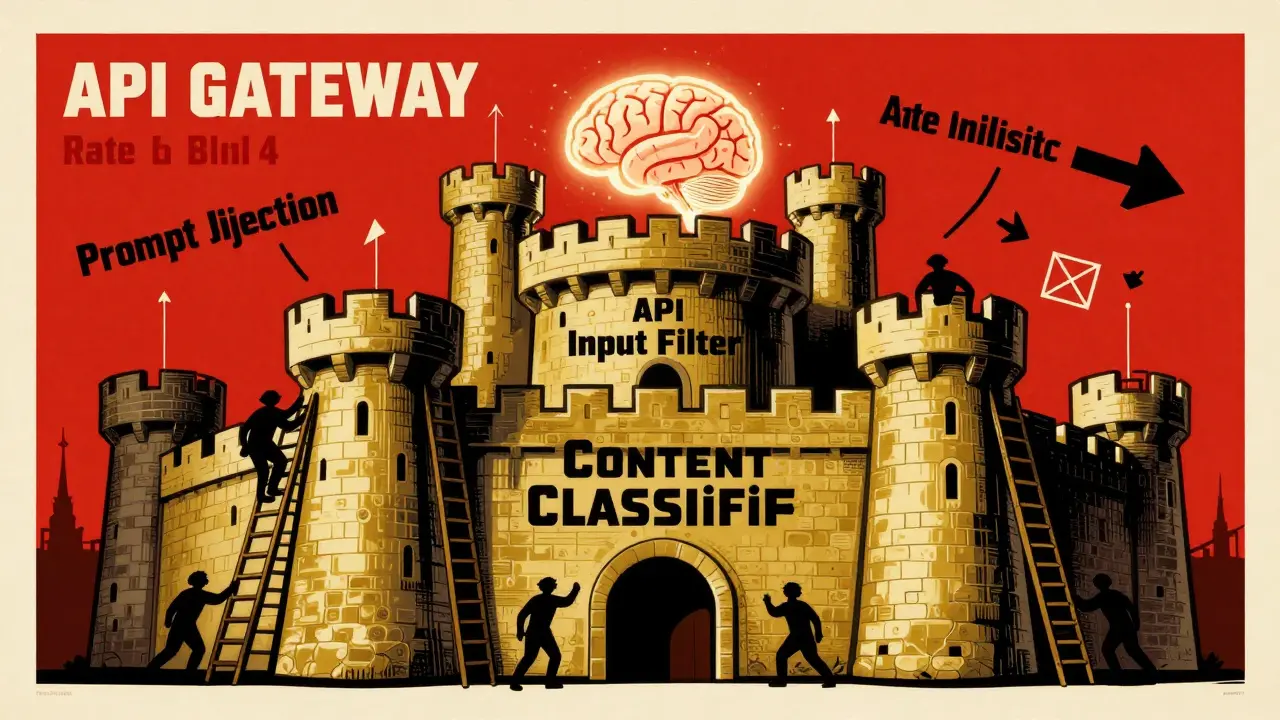

Safety Layers in Generative AI: Content Filters, Classifiers, and Guardrails Explained

Safety layers in generative AI-like content filters, classifiers, and guardrails-are essential for preventing harmful outputs, blocking attacks, and protecting data. Without them, AI systems become unpredictable and dangerous.