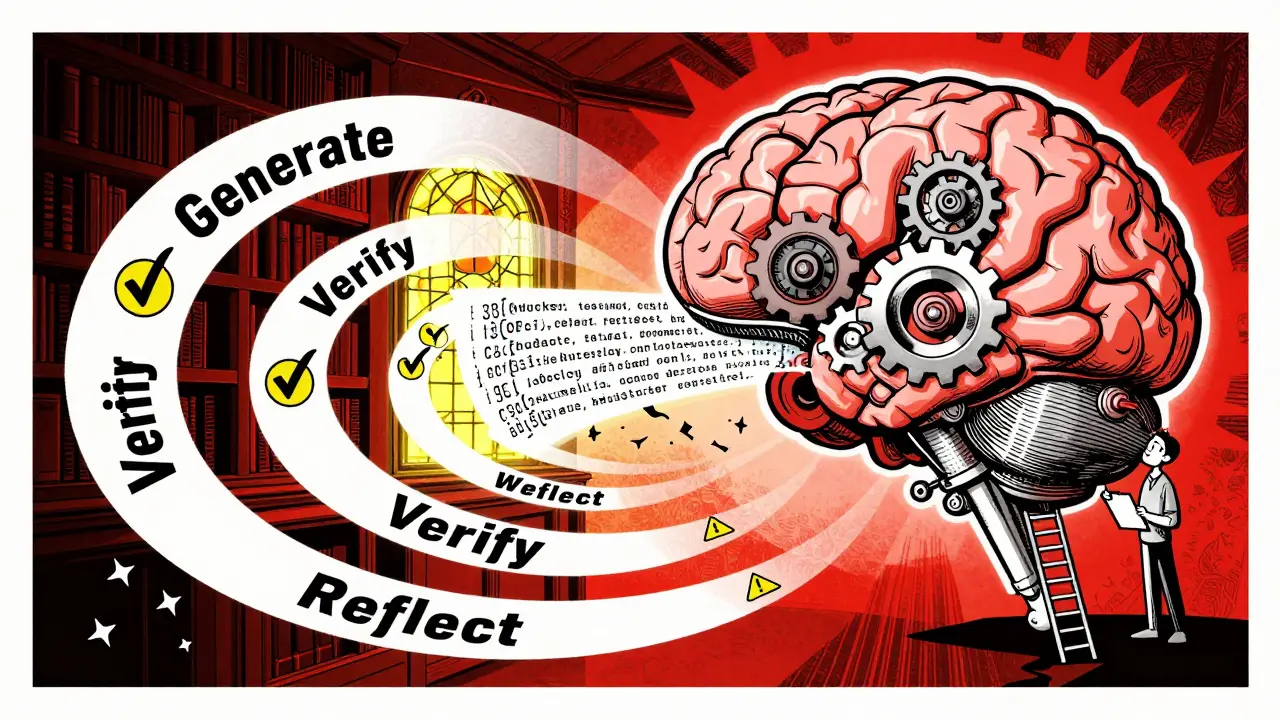

Post-Generation Verification Loops: How Automated Fact Checks Are Making LLMs Reliable

Post-generation verification loops use automated checks to catch errors in LLM outputs, turning guesswork into reliable results. They're transforming code generation, hardware design, and safety-critical AI - but only where accuracy matters most.