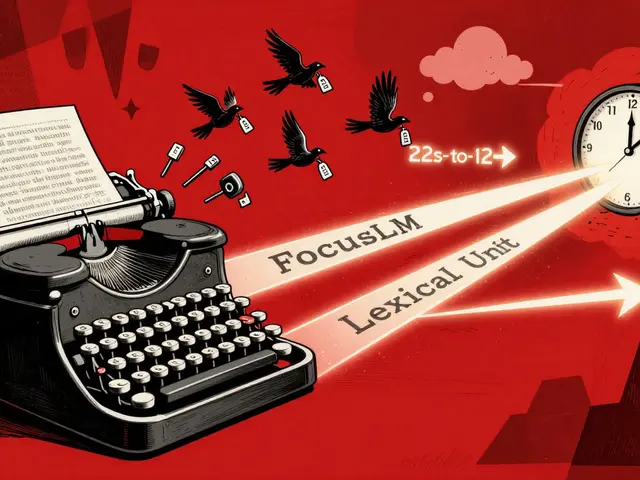

Speculative Decoding for Large Language Models: How Draft and Verifier Models Speed Up AI Responses

Speculative decoding accelerates large language models by pairing a fast draft model with a verifier model, cutting response times by up to 5x without losing quality. Used by AWS, Google, and Meta, it's now standard in enterprise AI.