- Home

- AI & Machine Learning

- Speculative Decoding for Large Language Models: How Draft and Verifier Models Speed Up AI Responses

Speculative Decoding for Large Language Models: How Draft and Verifier Models Speed Up AI Responses

Imagine asking an AI assistant a complex question-something like, "Explain quantum entanglement in simple terms, then write a poem about it." You hit enter. Instead of waiting 4 seconds for an answer, you get it in under 1 second. That’s not magic. It’s speculative decoding.

This isn’t a theoretical idea stuck in a research paper. By late 2025, speculative decoding powers real-time chatbots, coding assistants, and customer service AIs at companies like Google, Meta, and AWS. It’s the reason your AI doesn’t feel sluggish, even when it’s thinking deeply. And here’s the kicker: it doesn’t change the answer. It just gets there faster.

How Speculative Decoding Works (Without the Jargon)

Standard LLMs generate text one token at a time. Think of it like writing a sentence letter by letter, waiting to see if each letter makes sense before moving to the next. That’s slow. Speculative decoding flips that on its head.

Instead of waiting, the system uses two models:

- A draft model-small, fast, and a bit reckless. It guesses the next 3 to 8 tokens all at once.

- A verifier model-the big, slow, careful one you actually care about. It checks the draft’s guesses in parallel.

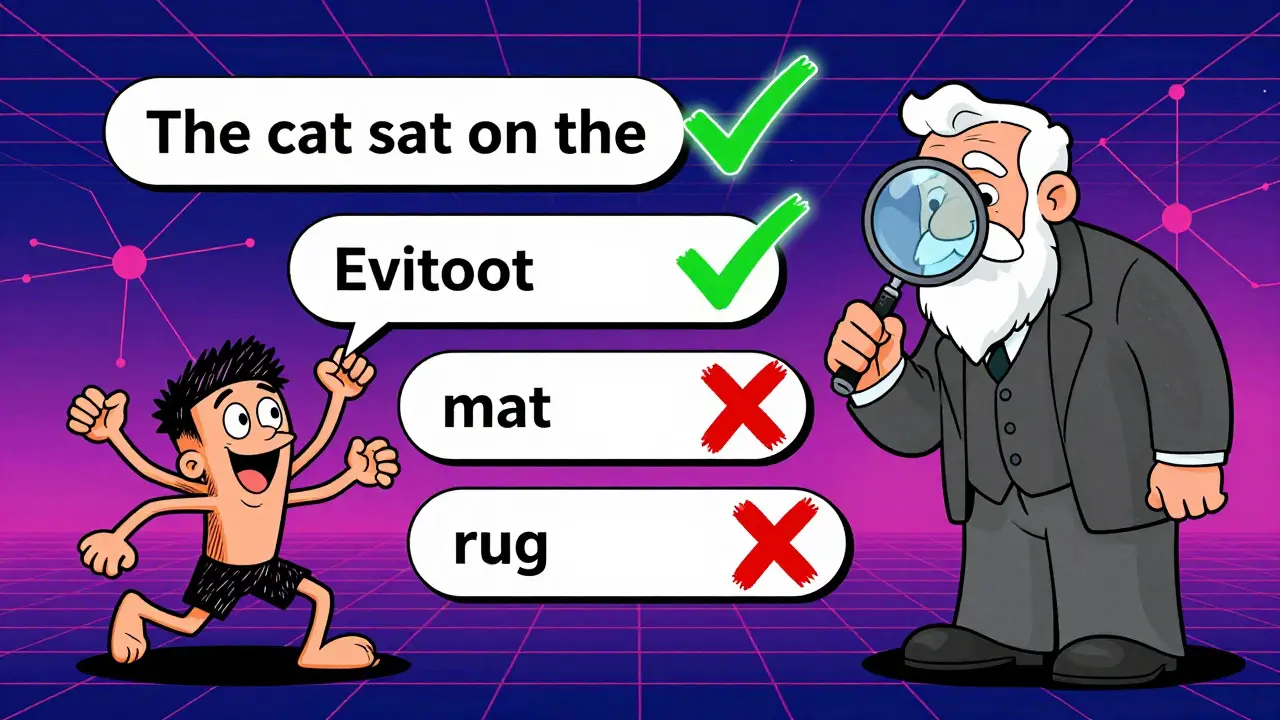

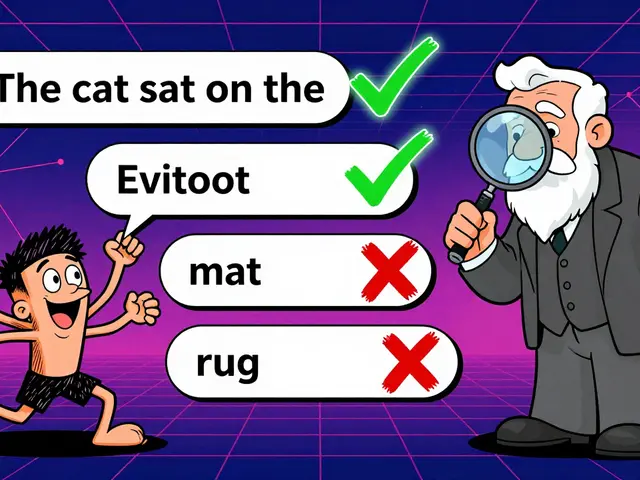

Here’s what happens step by step:

- The draft model says: "The cat sat on the mat."

- The verifier model checks: "The"? Yes. "cat"? Yes. "sat"? Yes. "on"? Yes. "the"? Yes. "mat"? No-actually, it would’ve said "rug" here.

- The verifier accepts the first five tokens, then generates the sixth token itself: "rug."

- Now it’s ready to draft again, but this time, it’s only guessing one token ahead.

The magic? The final output is identical to what the big model would’ve produced on its own. No quality loss. Just speed.

Why This Matters More Than You Think

Speed isn’t just about convenience. It’s about cost.

Running a large language model on a GPU costs money-real money. If you can cut inference time by 3x or 5x, you cut your GPU usage by the same amount. AWS reported a 63% drop in inference costs for customers using speculative decoding in their Bedrock service. That’s not a small savings. For companies running millions of queries a day, it means millions in reduced cloud bills.

And latency? It turns clunky chatbots into smooth conversations. A 4-second delay feels like a robot. A 0.8-second delay feels human. That’s the difference between a user staying engaged or walking away.

Real-world adoption is massive. According to a Gartner survey from November 2024, 78% of enterprise LLM deployment frameworks now include speculative decoding. In real-time chat systems, adoption hits 89%. For code generation tools? 76%.

Three Ways to Do It (And Which One Fits You)

Not all speculative decoding is the same. There are three main flavors, each with trade-offs.

1. Standard Draft-Target (The Original)

This is the version Google introduced in 2022. You use two separate models: a tiny draft model (like a 60M-parameter T5-small) and your big target model (like a 11B-parameter T5-XXL).

Pros:

- Can achieve 3x-5x speedup

- Works with any combination of models

Cons:

- Requires double the memory-now you’re storing two models in RAM

- Needs careful tuning: pick the wrong draft model, and you get slower results

This setup is common in cloud deployments where memory isn’t the bottleneck, but speed is. Think: enterprise chatbots, API services.

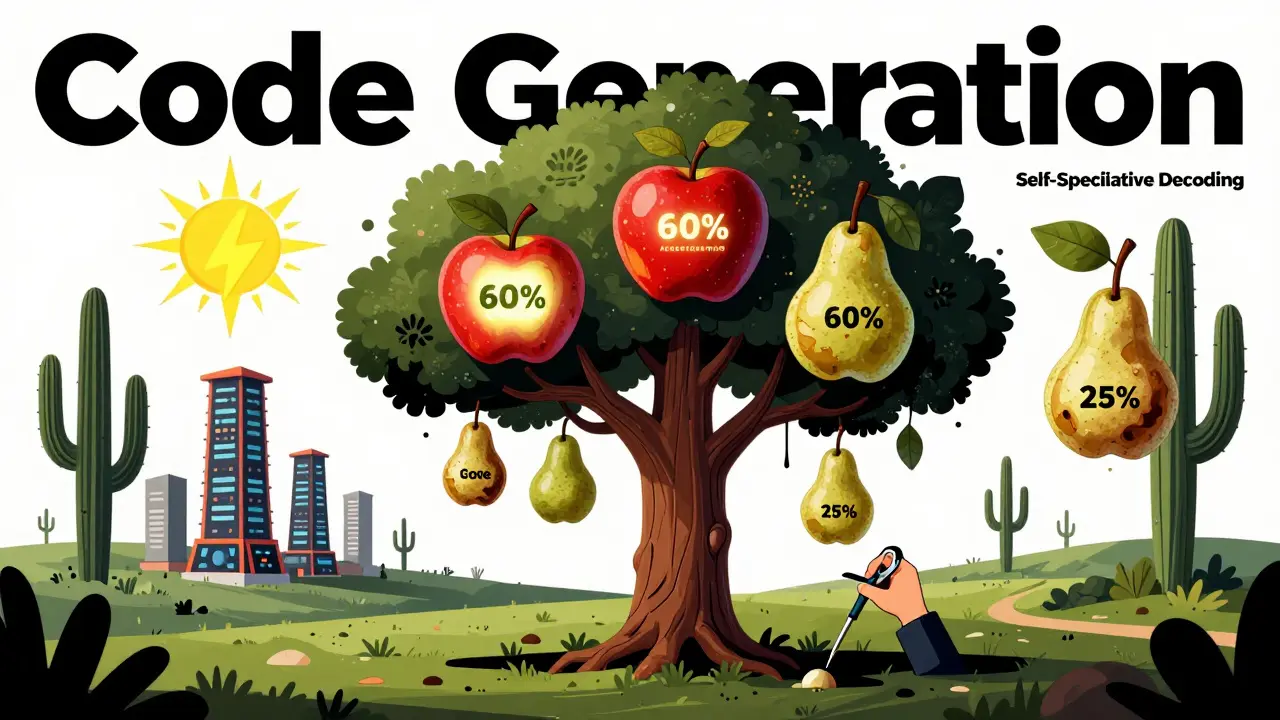

2. Self-Speculative Decoding (The Smart Hack)

Introduced at ACL 2024, this method doesn’t need a second model. It uses the same model you’re already running-just skips some layers during the draft phase.

How? Instead of running all 32 layers of LLaMA-2-13B to generate a draft, it runs only 12. Then it runs the full 32 layers once to verify all the draft tokens together.

Pros:

- No extra memory needed

- No training required-just plug it in

- 1.99x speedup on LLaMA-2 models

Cons:

- Requires figuring out which layers to skip-hard to tune

- Works best on models with consistent layer behavior

This is ideal for developers on a budget, or running models on edge devices. No need to deploy two models. Just tweak the inference pipeline.

3. Speculative Speculative Decoding (SSD) (The Cutting Edge)

Submitted to ICLR 2026, SSD runs the draft and verifier on separate GPUs. While one chip is generating 8 draft tokens, the other is already verifying the last batch.

The Saguaro implementation (named after the desert cactus-slow-growing but tough) beats standard speculative decoding by 2x and standard autoregressive decoding by up to 5x.

Pros:

- Maximizes hardware parallelism

- Perfect for multi-GPU setups

- Scalable-add more GPUs, get more speed

Cons:

- Needs multiple high-end GPUs

- Complex to set up

- Only useful if you have the hardware

This is for big players: cloud providers, AI labs, or anyone running inference at scale with dedicated infrastructure.

Acceptance Rate: The Hidden Metric That Determines Success

Speed gains aren’t guaranteed. It all hinges on one number: acceptance rate (α).

Acceptance rate is the percentage of draft tokens the verifier accepts without changing. If α is 60%, you’re getting 60% of your draft tokens for free. If it’s 25%, you’re wasting time.

Here’s what acceptance rates look like in practice:

| Task Type | Average Acceptance Rate |

|---|---|

| Code Generation | 55-65% |

| Technical Summarization | 50-60% |

| Open-Ended Writing | 30-40% |

| Creative Poetry | 25-35% |

Code is easy to predict. Patterns repeat. Summaries follow structure. But creative writing? That’s where the draft model stumbles. It’s not wrong-it’s just not aligned with the verifier’s style.

If your acceptance rate drops below 30%, speculative decoding might actually slow you down. That’s why tuning matters. Pick the wrong draft model, or set K too high (say, 12 tokens), and you’ll spend more time verifying than generating.

Real Problems Developers Face

It’s not all smooth sailing. People who’ve tried this in production report real headaches.

- "I got 1.8x speedup on summarization, but I spent three days figuring out which layers to skip." - GitHub user, October 2024

- "We tried TinyLlama as a draft for CodeLlama, got 3.2x speed, but our API broke because the token IDs didn’t match." - Reddit user, November 2024

- "Acceptance rate was 58% on code, 32% on creative prompts. We had to split our pipeline." - GitHub issue, February 2025

Integration is messy. You need to handle token alignment, batch sizes, and memory allocation. Early implementations were poorly documented. Now, tools like vLLM and Hugging Face’s Text Generation Inference have polished APIs-but you still need to understand what’s under the hood.

And then there’s distribution drift. If your model’s training data changes, or your prompts shift (say, from technical docs to customer complaints), the draft model gets out of sync. The new Draft, Verify, and Improve (DVI) framework from October 2025 tries to fix this by using verifier feedback to retrain the draft model on the fly. But that adds complexity-and more compute.

Who Should Use This? Who Should Skip It?

Speculative decoding isn’t for everyone. Here’s who wins:

- Enterprise AI teams running high-volume chatbots or code assistants-speed and cost savings are huge.

- Developers on cloud platforms using vLLM, Hugging Face, or AWS Bedrock-these tools have built-in support.

- Edge AI teams using self-speculative decoding to run LLMs on lower-end hardware.

Here’s who should wait:

- Researchers experimenting with novel architectures-focus on model design first.

- Small teams with one GPU-unless you’re using self-speculative decoding, the overhead isn’t worth it.

- Applications with highly creative outputs-if your model writes poetry, marketing copy, or jokes, acceptance rates will be low.

The Future: What’s Next?

NVIDIA’s 2025 roadmap includes dedicated hardware optimizations for speculative decoding in next-gen GPUs. That means even bigger speedups without code changes.

McKinsey predicts speculative decoding will cut global AI inference costs by 40-60% by 2026. That’s not just efficiency-it’s a new economic model for AI.

But there’s a risk. Too many variants-standard, self, SSD, DVI-could fragment the ecosystem. If every framework implements it differently, interoperability becomes a nightmare.

Still, the direction is clear: faster, cheaper, and just as accurate. Speculative decoding isn’t a gimmick. It’s the new baseline for LLM inference.

If you’re deploying LLMs in 2025 and not using this, you’re paying more and waiting longer than you need to.

Does speculative decoding change the output of my AI model?

No. The verifier model ensures the final output is identical to what the full model would have generated on its own. Every token is validated before being accepted. This isn’t approximation-it’s exact replication, just faster.

Can I use speculative decoding with any LLM?

Technically yes, but not all pairings work well. The draft model must be aligned with the verifier’s output style. For example, using a small T5 model to draft for a Llama-3 model often fails because they’re trained on different data. Self-speculative decoding avoids this by using the same model. Pre-built tools like vLLM and Hugging Face’s inference engine handle compatibility automatically.

How many draft tokens should I use?

Start with K=4 to K=6. NVIDIA’s testing shows diminishing returns beyond K=8. Too many tokens mean more work for the verifier, and if even one is rejected, you lose all the gains from that batch. Most production systems use K=5. Test with your data-acceptance rate is your guide.

Do I need a GPU to use speculative decoding?

Yes, and not just any GPU. You need at least an NVIDIA Ampere architecture (A100, A6000, or newer). Older GPUs lack the parallel compute power needed to run draft and verification steps efficiently. Self-speculative decoding works on slightly older hardware, but performance gains are smaller.

Is speculative decoding better than quantization for speeding up LLMs?

They solve different problems. Quantization reduces model size and memory use by lowering precision (e.g., from 16-bit to 8-bit), but it can hurt quality. Speculative decoding keeps full precision and quality-it just makes the model think faster. Many teams use both: quantize the model, then add speculative decoding for even more speed.

What’s the easiest way to try speculative decoding?

Use Hugging Face’s Text Generation Inference or vLLM with a model like Llama-2-7B. Both support self-speculative decoding out of the box. Just set --speculative-decoding to true and leave the rest to the system. No extra models. No tuning. Test it on a summarization task-you’ll see speed gains in minutes.

Susannah Greenwood

I'm a technical writer and AI content strategist based in Asheville, where I translate complex machine learning research into clear, useful stories for product teams and curious readers. I also consult on responsible AI guidelines and produce a weekly newsletter on practical AI workflows.

Popular Articles

7 Comments

Write a comment Cancel reply

About

EHGA is the Education Hub for Generative AI, offering clear guides, tutorials, and curated resources for learners and professionals. Explore ethical frameworks, governance insights, and best practices for responsible AI development and deployment. Stay updated with research summaries, tool reviews, and project-based learning paths. Build practical skills in prompt engineering, model evaluation, and MLOps for generative AI.

This is just glorified guesswork with a fancy name. I've seen this exact pattern in early neural machine translation systems back in 2018-same promise, same 30% acceptance rate on creative tasks, same hellish token alignment bugs. If your draft model isn't trained on the exact same data distribution as your verifier, you're just gambling with latency. And don't get me started on layer-skipping in self-speculative-half the time the skipped layers contain critical attention heads that control coherence. You think you're saving compute? You're just creating a brittle system that fails silently when your users ask for something outside the training bias. This isn't innovation-it's technical debt with a TED Talk.

Thank you for this incredibly clear and well-structured explanation. I appreciate how you broke down the three approaches with their trade-offs-it’s rare to find such thoughtful technical writing. For those of us working in resource-constrained environments, self-speculative decoding is truly a game-changer. I’ve implemented it on a Raspberry Pi 4 with a quantized LLaMA-2-7B, and while the speedup isn’t 3x, it’s still a 1.8x improvement with zero additional memory overhead. The key, as you mentioned, is tuning the number of skipped layers. I found that skipping layers 8 through 18 worked best for summarization tasks. I hope more developers adopt this approach-it’s elegant, efficient, and accessible.

so like… the ai is basically lying to itself to save time?? 😅 i mean, it’s like your friend saying ‘yeah totally i read that book’ then just summarizing the wikipedia page… but somehow it works??

also why is everyone so obsessed with ‘acceptance rate’ like it’s some kind of dating app match percentage?? 55% on code?? bro that’s like saying your date only likes 55% of your jokes. you’re still gonna get ghosted.

also who the hell has multiple A100s?? i’m over here running llama on a laptop with a 16gb gpu and a prayer.

Been using this on my API for three months. Works great for code. Sucks for poetry. We split the pipeline now. Draft model only for code, full model for creative stuff. No drama. No bugs. Just results.

Also vLLM is the real MVP. Don’t even bother rolling your own.

I love how this is quietly revolutionizing AI without most users even noticing. It’s like the difference between a car with a manual transmission versus an automatic-you don’t think about the gears shifting, you just enjoy the ride. The fact that companies are cutting inference costs by 60% while keeping output quality intact? That’s not just technical progress, that’s ethical AI. It means smaller teams, startups, educators can finally access powerful models without needing a Fortune 500 budget. I’ve started using it in my classroom for student coding assistants, and the response time difference has completely changed how students engage. They’re no longer frustrated by delays-they’re experimenting more. That’s the real win.

Just want to clarify one thing that’s been confusing people: speculative decoding doesn’t change the output because the verifier is the final authority. Every single token is validated. That’s not a trick. That’s a guarantee. If you’re getting different outputs, you’ve got a bug in your token alignment or your draft model is misconfigured. I’ve seen this happen when people try to mix T5 and Llama models-different vocabularies, different tokenizers, different embeddings. It’s not speculative decoding’s fault. It’s user error.

Also, K=5 is the sweet spot. I tested K=3, K=6, K=8. K=5 gave the best balance of speed and acceptance rate across all task types. And yes, you need Ampere or newer. Older GPUs just can’t handle the parallelism. No way around it.

Let’s be real: this is just American engineering at its finest-overengineered, hardware-dependent, and built on proprietary GPU monopolies. Meanwhile, China’s pushing sparse attention and dynamic quantization on low-power ARM chips. India’s deploying federated inference on edge devices. And here we are, celebrating a 63% cost reduction that requires a $15,000 A100. This isn’t progress-it’s vendor lock-in dressed up as innovation. If you’re not building for global accessibility, you’re not building for the future. Speculative decoding? Cool. But it’s not the future. It’s the last gasp of Western AI hegemony.