- Home

- AI & Machine Learning

- What Counts as Vibe Coding? A Practical Checklist for Teams

What Counts as Vibe Coding? A Practical Checklist for Teams

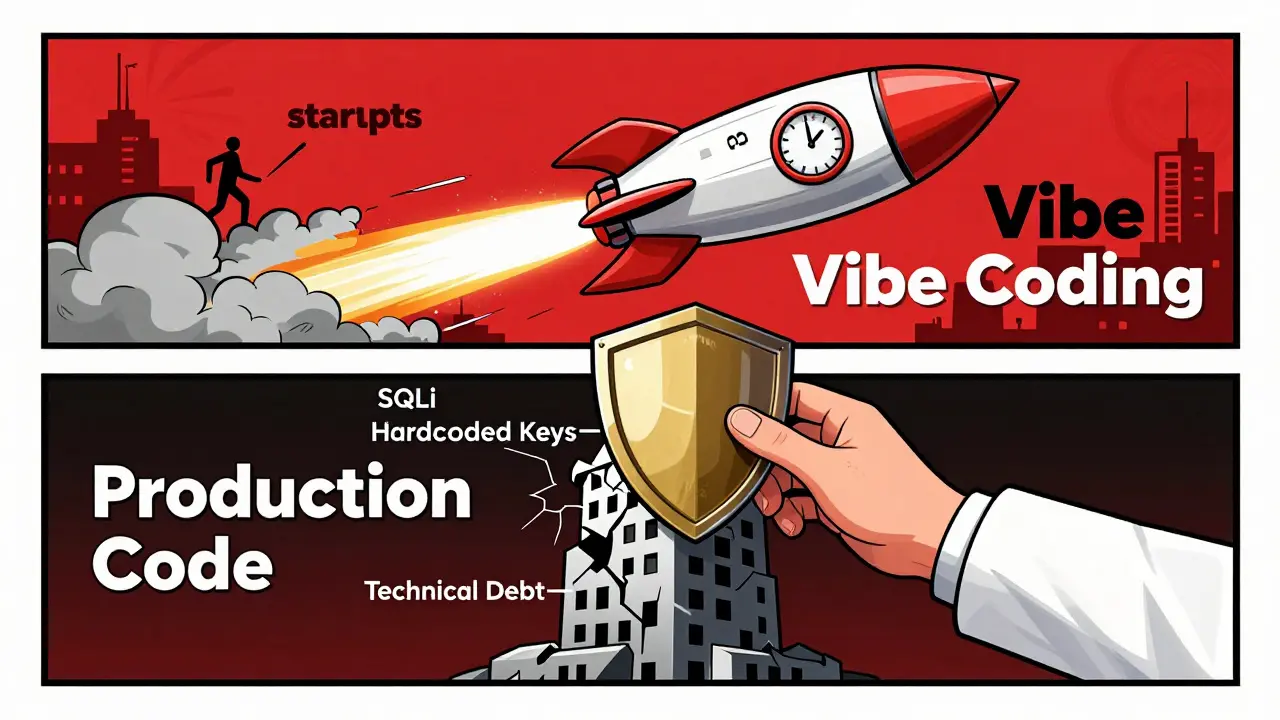

Imagine building a full app in three days-no debugging sessions, no line-by-line code reviews, no wrestling with frameworks. You just tell the AI what you want, hit run, and it works. That’s vibe coding. And if your team is doing it without realizing it, you might be on the edge of a major shift-or a major mess.

Vibe coding isn’t just using AI to write code. It’s completely avoiding looking at the code at all. Coined by Andrej Karpathy in February 2025, it’s not a buzzword. It’s a strict methodology: describe what you need in plain language, let the AI generate the code, and judge it only by whether it passes tests, not by how it looks. No editing. No reading. No second-guessing. If you’re doing any of those, you’re not vibe coding-you’re just using AI helpers.

What Exactly Counts as Vibe Coding?

There’s a big difference between asking an AI for a function and letting it take over your whole workflow. True vibe coding has six non-negotiable rules:

- You describe tasks only in natural language-no code snippets, no pseudocode. "Build a login page with email verification and password reset" is fine. "Write a Python function using regex to validate emails" is not.

- You never open the generated code in your editor. Not even to check indentation. Cursor IDE logs must show zero manual edits.

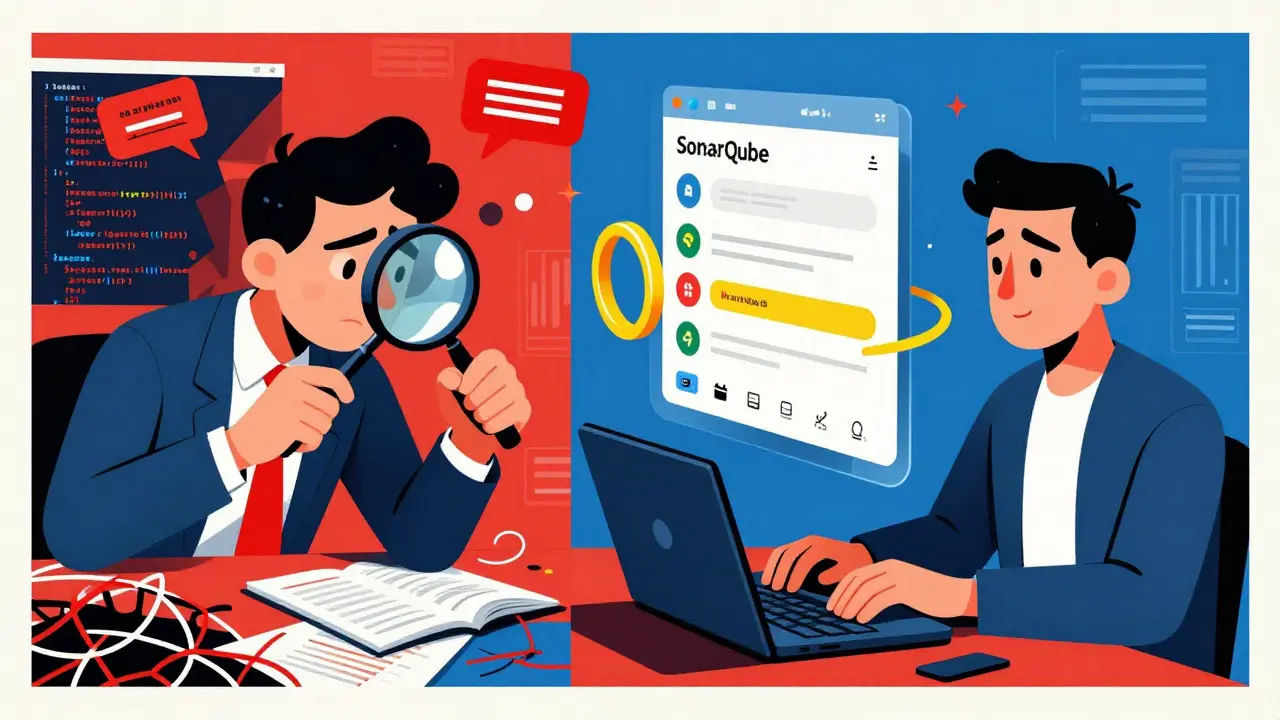

- You evaluate everything through automated tools: test results, CI/CD pipelines, SonarQube scans, or performance metrics. If the app runs and passes all tests, you move on.

- All changes come from new prompts. If something breaks, you don’t fix the code-you rewrite the request. "Make the login faster and add rate limiting" is the fix.

- You use vibe-optimized tools: GPT-4, Claude 3, Cursor v2.5+, or Windsurf with ‘vibe mode’ enabled. Standard IDEs with AI plugins don’t count.

- Your documentation is all user-focused. Confluence pages say "users can reset passwords via email," not "the AuthService class calls the EmailValidator module."

Most teams think they’re vibe coding when they’re just using AI to speed up typing. But if someone on your team opens a file to tweak a variable name, you’re not there yet.

Why This Works-And When It Doesn’t

The numbers don’t lie. McKinsey found teams using vibe coding complete tasks 56% faster than traditional methods. Startups using it cut MVP setup time from 147 hours to 28. Garry Tan from Y Combinator saw non-technical founders build working products with zero coding experience. That’s the power: turning ideas into running software in hours, not weeks.

But here’s the catch: vibe coding thrives on simplicity and dies on complexity. It’s great for CRUD apps, form-based dashboards, API wrappers, and basic UIs. It fails hard at anything that needs security, logic depth, or algorithmic precision.

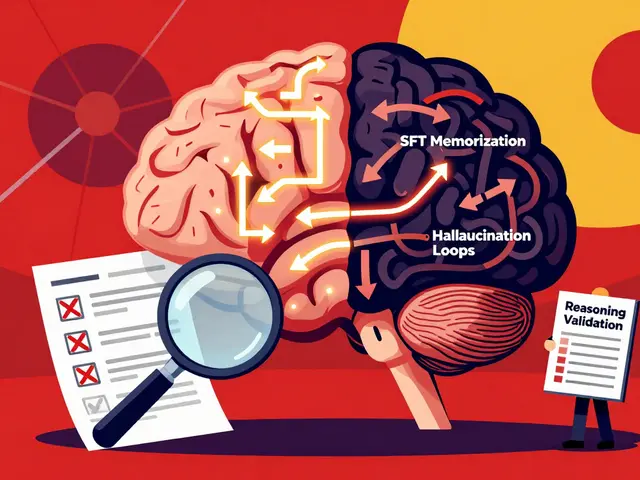

Pragmatic Engineer’s August 2025 study showed a 78% failure rate when AI tried to generate secure encryption code. That’s not a bug-it’s a fundamental limit. Vibe coding doesn’t understand cryptography. It doesn’t know what a buffer overflow is. It doesn’t care about OWASP Top 10. It just generates text that sounds right.

That’s why teams who succeed with vibe coding don’t use it for production systems. They use it for rapid prototyping, then hand off the working prototype to a traditional dev team for hardening. The best teams now follow a hybrid model: vibe coding for the 80% that’s easy, manual review for the 20% that could break everything.

The Tools You Need to Actually Do This

You can’t vibe code with VS Code and Copilot. You need tools built for this workflow.

- Cursor IDE (v2.5+): Only this editor has a built-in "vibe mode" that blocks manual editing and logs every prompt. It’s the only way to prove you’re not cheating.

- GPT-4 or Claude 3: These are the only LLMs proven to handle complex, multi-step prompts without hallucinating logic. Older models fail 40% of the time.

- SonarQube v10.4+: This isn’t optional. It scans AI-generated code for vulnerabilities, code smells, and performance issues without human review. It’s your safety net.

- Jenkins or GitHub Actions: Your CI pipeline must run tests automatically on every AI-generated build. No manual trigger. No exceptions.

- Replit’s Prompt Engine: Helps non-technical users phrase requests better. Teams using it saw a 47% improvement in output quality.

If your team is still using GitHub Copilot in a regular editor, you’re not vibe coding-you’re just being lazy with autocomplete.

Who’s Actually Using This Successfully?

It’s not the big tech firms. They’re too risk-averse. It’s the scrappy ones.

- Startups with 1-5 people: 78% of Y Combinator-funded startups in 2025 used vibe coding to build their first prototype. One team built a SaaS billing system in 18 hours.

- Product managers and designers: GitHub’s 2025 report showed 34% of vibe coders had no formal programming background. They built apps by describing features like they were talking to a developer.

- Indie hackers: Reddit user "CodeWithVibes" built an e-commerce site in three days. Took two weeks to fix the security holes SonarQube found-but they had a working product to show investors.

Meanwhile, enterprise teams mostly use it for internal tools or demos. Only 22% of large companies allow vibe-coded code in production. And they’re right to be cautious.

The Hidden Risks Nobody Talks About

Yes, vibe coding is fast. But it’s also dangerous if you ignore the consequences.

- Technical debt explodes: Hacker News users reported 37% more technical debt in vibe-coded repos. CodeClimate metrics showed AI-generated code had 2.3x more duplicated blocks.

- Security nightmares: SonarSource found a 43% spike in vulnerabilities in unreviewed AI code. SQL injection, hardcoded keys, XSS flaws-AI doesn’t avoid these. It often creates them.

- Team dependency: If the only person who knows how to prompt the AI leaves, the whole system breaks. There’s no documentation, just prompts.

- Skills erosion: Stanford’s AI Ethics Lab found junior devs who rely on vibe coding are 29% worse at manual debugging. They’ve lost the ability to read code.

Dr. Sarah Chen from Stanford put it bluntly: "Vibe coding bypasses essential safeguards. It’s technosolutionism dressed as efficiency."

That’s why SonarQube’s January 2026 update added "vibe coding verification"-a feature that automatically flags code that looks like it was generated without review. It’s not meant to stop vibe coding. It’s meant to make sure you know what you’re letting in.

How to Start-Without Losing Your Mind

If you’re thinking about trying this, don’t jump in headfirst. Here’s how to test it safely:

- Start with a side project. Not your core product. Something throwaway.

- Use Cursor in vibe mode. Block all manual edits. Force yourself to only use prompts.

- Run SonarQube and CI tests on every build. If it fails, rewrite the prompt-not the code.

- After two weeks, measure: How fast did you build it? How many bugs slipped through?

- Then, hand the code to a senior dev. Ask them to review it. You’ll see the gap.

Most teams realize after this that vibe coding is great for speed-but terrible for sustainability. The real win isn’t building faster. It’s learning what parts of your workflow can be automated without sacrificing quality.

Is Vibe Coding the Future?

The AI coding market hit $3.8 billion by the end of 2025, and 67% of that growth came from vibe coding. But the most successful teams aren’t going all-in. They’re blending it.

87% of high-performing engineering teams now use a hybrid model: vibe coding for prototypes, traditional review for production. They use AI to explore ideas fast, then lock down the real code with human oversight.

That’s the smart path. Vibe coding isn’t about replacing developers. It’s about replacing tedious work. The future belongs to teams who use AI to cut through noise-not to abandon judgment.

So ask yourself: Are you vibe coding? Or are you just avoiding the hard parts?

Can vibe coding be used for production code?

Most experts advise against it. While vibe coding can generate working code quickly, it lacks the security and structure needed for production systems. SonarSource and Stanford’s AI Ethics Lab both warn that unreviewed AI-generated code has a 43% higher chance of containing critical vulnerabilities. The safest approach is to use vibe coding only for prototyping, then hand off the working prototype to a traditional development team for hardening, testing, and security review.

Do I need to be a programmer to use vibe coding?

No. In fact, 34% of vibe coders in 2025 were non-technical founders, product managers, or designers. Tools like Cursor and Replit’s prompt engine are designed to let anyone describe what they want in plain English. One Indie Hackers user built a full SaaS MVP with no coding experience-just clear prompts and a lot of testing. But while you can build something, you still need to understand testing, failure modes, and security basics to avoid disasters.

What’s the difference between vibe coding and AI pair programming?

In AI pair programming, you write code, ask the AI for suggestions, and then edit, refactor, or approve each line. In vibe coding, you never look at the code. You give one prompt, get one output, and judge it only by whether it passes automated tests. The key difference is human intervention: pair programming involves constant review; vibe coding forbids it entirely.

Why can’t vibe coding handle complex algorithms?

Large language models don’t understand logic-they predict text. They’re great at generating code that looks like it should work, but they can’t reason about cryptographic security, memory management, or algorithmic efficiency. A 2025 Pragmatic Engineer study found AI-generated encryption code failed 78% of the time. Human developers, even juniors, understand these concepts. AI doesn’t. It guesses based on patterns, not principles.

Is vibe coding ethical?

It depends on context. For internal tools, prototypes, or non-critical apps, it’s a powerful time-saver. But in regulated industries-healthcare, finance, infrastructure-bypassing code review is a violation of safety principles. The EU’s February 2026 draft AI Act explicitly requires human oversight for critical system code. Using vibe coding in those areas could be legally risky and ethically irresponsible. The method is neutral; its ethics depend entirely on how and where it’s used.

How long does it take to get good at vibe coding?

Experienced developers need about 19 hours of practice to become proficient, according to Coding Temple’s November 2025 training data. Non-technical users take longer-around 38 hours-because they need to learn how to phrase prompts clearly and interpret test results. The biggest barrier isn’t the tooling; it’s learning to think in outcomes, not implementation. The best practitioners treat prompts like product specs: precise, outcome-focused, and testable.

Can vibe coding replace software engineers?

No. Vibe coding replaces some tasks-not the role. Teams still need engineers to design systems, write tests, interpret failures, set up CI/CD pipelines, and review AI output for security. The most successful teams use vibe coding to offload repetitive work, freeing engineers to focus on architecture, security, and edge cases. It’s a tool, not a replacement. The Association for Computing Machinery warns that uncritical adoption could erode core programming skills across the industry.

Bottom line: Vibe coding isn’t magic. It’s a high-speed, high-risk tool. Use it to explore, not to deploy. Let it save you time-not your reputation.

Susannah Greenwood

I'm a technical writer and AI content strategist based in Asheville, where I translate complex machine learning research into clear, useful stories for product teams and curious readers. I also consult on responsible AI guidelines and produce a weekly newsletter on practical AI workflows.

About

EHGA is the Education Hub for Generative AI, offering clear guides, tutorials, and curated resources for learners and professionals. Explore ethical frameworks, governance insights, and best practices for responsible AI development and deployment. Stay updated with research summaries, tool reviews, and project-based learning paths. Build practical skills in prompt engineering, model evaluation, and MLOps for generative AI.