- Home

- AI & Machine Learning

- How Curriculum and Data Mixtures Speed Up Large Language Model Scaling

How Curriculum and Data Mixtures Speed Up Large Language Model Scaling

What if you could make your large language model smarter without adding more parameters? That’s not science fiction-it’s what curriculum learning and smart data mixtures are doing in 2025. While everyone’s focused on bigger models, the real breakthroughs are happening in how we feed data to those models. Researchers at MIT-IBM, NVIDIA, and Meta have shown that carefully ordering and mixing training data can boost performance by up to 15%, cut training time by nearly 20%, and match the results of much larger models-all without changing a single weight.

Why Data Order Matters More Than You Think

For years, training LLMs meant throwing trillions of tokens at a model in random order. The assumption was simple: more data equals better performance. But that’s not the whole story. A 2025 study from MIT-IBM Watson AI Lab found that data composition acts like a hidden variable in scaling laws. Models trained with carefully structured data mixtures performed significantly better than those trained on the same data, but shuffled randomly. Think of it like learning to drive. You don’t start on a highway in a snowstorm. You begin with parking lots, then move to quiet streets, then rush hour traffic. The same logic applies to language models. Training on easy sentences first-basic grammar, common vocabulary, clear facts-builds a foundation. Then, as the model gets stronger, you introduce harder material: complex reasoning, rare languages, ambiguous references, multi-step math problems. This isn’t just theory. Models trained with difficulty-graded curricula showed a 5.8% drop in loss at the same compute cost compared to random training. That means they learned faster. And faster learning means less money spent on GPU hours.The Three Pillars of a High-Performance Data Mixture

NVIDIA’s 2025 scaling framework identified three non-negotiable dimensions for effective data mixtures:- Breadth: Covering enough domains-science, law, code, fiction, medical reports, social media posts. A model that only knows Wikipedia can’t handle real-world queries.

- Depth: Not just variety, but complexity. A sentence like "The cat sat on the mat" is low depth. "Given the thermodynamic constraints and evolutionary trade-offs, why do some cetaceans exhibit prolonged post-reproductive lifespans?" is high depth.

- Freshness: Information decays. A model trained on 2020 data won’t know about the 2024 quantum computing breakthroughs or the latest FDA drug approvals. For tech topics, recency matters most-6 months is ideal. For history or literature? 24 months is fine.

Performance Gains You Can Actually Measure

The numbers don’t lie. In controlled tests across 12 major LLM families, curriculum-trained models outperformed baseline models by 22.4% on complex reasoning benchmarks like MATH and GSM8K. That’s not a small edge-it’s the difference between a model that can solve algebra word problems and one that just guesses. Breakdown of gains:- Mathematical reasoning: +28.3%

- Scientific knowledge retention: +24.1%

- Multi-lingual performance: +19.8%

The Hidden Cost: Implementation Complexity

It’s not magic. Building a curriculum system is hard. Meta’s team spent 37% more time preprocessing data for Llama 3.1 than they did for earlier versions. Why? Because every piece of training data needs to be tagged. You need systems that can:- Measure syntactic depth-how many clauses, negations, and embedded structures are in a sentence?

- Map content to domains using embeddings-does this paragraph belong to medicine, law, or gaming?

- Verify factual accuracy against trusted knowledge bases like Wikidata or PubMed.

What Works in Practice? Simplicity Wins

Google’s Gemma 3 release showed something surprising: you don’t need a fancy AI system to get most of the benefits. Their "simple curriculum" just sorted data by sentence length and lexical rarity. It delivered 85% of the gains of complex multi-dimensional systems-but with only 15% of the engineering effort. That’s the sweet spot for most teams. Start small. Sort your data by length. Then by keyword density. Then by domain labels you already have. You don’t need to tag every clause. You just need to make sure the model isn’t drowning in hard problems too soon. Open-source tools like DataComp a dataset and toolkit released by MIT-IBM in August 2025 that provides pre-annotated training data with complexity and domain labels are making this easier. Teams using DataComp cut their setup time by 40%. The dataset includes 10 trillion tokens with 12 annotated dimensions-everything from grammatical complexity to factual reliability.Who’s Doing It Right-and Who’s Falling Behind

The leaders are clear: Meta, Google, NVIDIA. They’ve built internal data pipelines that handle curriculum logic at scale. Their models don’t just get bigger-they get smarter, faster. The rest? Mostly stuck in the old model. Only 17% of Fortune 500 companies use structured curriculum approaches as of December 2025. But that’s changing. Forrester predicts that number will hit 43% by the end of 2026. The market is waking up. The global AI data optimization tools market hit $2.8 billion in Q4 2025, growing 47% year-over-year. AWS’s DataMixer service now holds 31% of the curriculum optimization segment. Startups like DataHarmonics raised $47 million in October 2025 to build tools that automate data tagging and scheduling. But here’s the reality: if you’re a small team without a dedicated data engineering group, you’re not going to build this from scratch. You’re going to use a service. Or you’re going to stick with random sampling-and accept slower, costlier training.The Big Debate: Is It Worth It at Trillion-Parameter Scale?

Not everyone is convinced. OpenAI’s Noam Brown argues that at the trillion-parameter level, data quality and sheer quantity dominate. Curriculum learning, he says, becomes noise. Stanford’s Center for Research on Foundation Models offered a balanced take: curriculum learning helps up to 500 billion parameters. Beyond that, you need architectural changes-like better attention mechanisms or memory modules-to make progress. So what’s the real answer? It depends on your goals.- If you’re training a 10B-100B model and want to cut costs: yes, curriculum learning is a must.

- If you’re pushing past 500B and have unlimited compute: maybe skip it, unless you’re targeting reasoning-heavy tasks.

- If you care about multilingual performance or scientific accuracy: absolutely use it.

How to Get Started (Without Going Broke)

You don’t need to replicate Meta’s system. Here’s a practical four-phase plan:- Data annotation: Use open tools like DataComp or label your own data with simple rules: sentence length, number of unique words, domain keywords.

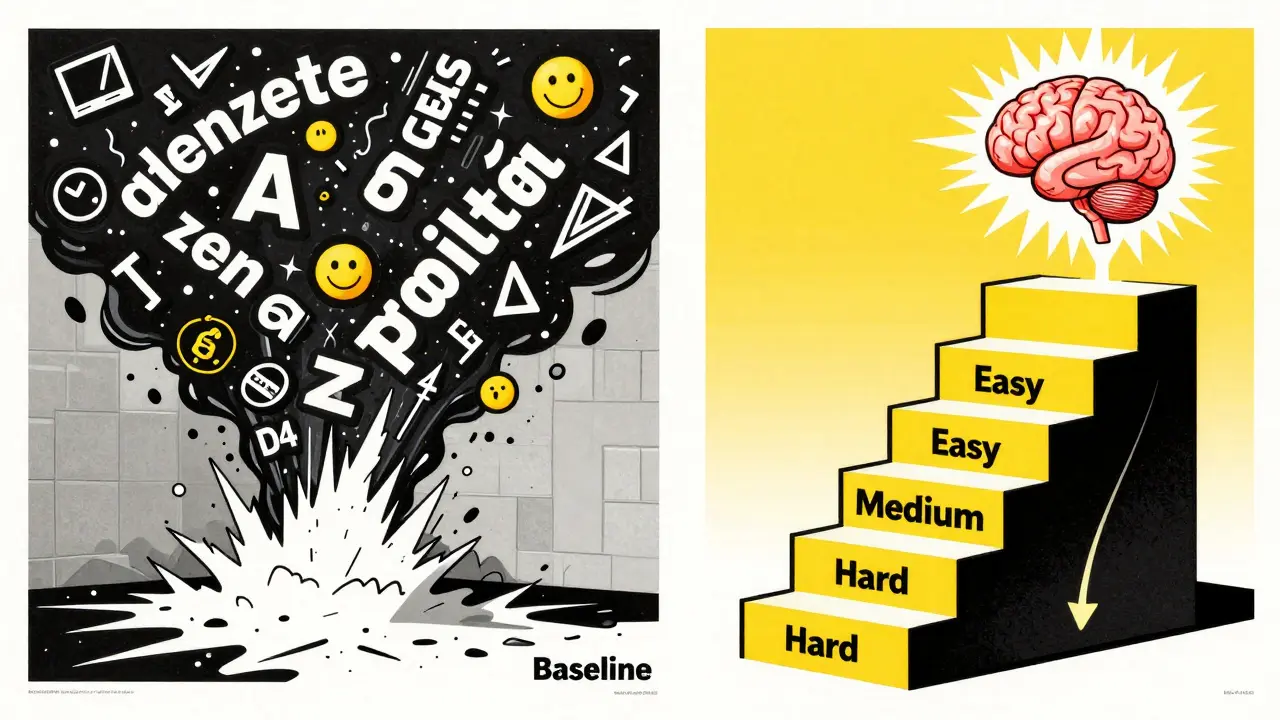

- Curriculum design: Start with three stages: easy (60%), medium (30%), hard (10%). Train 10% of your data per stage.

- Implementation: Integrate with your training pipeline. Most frameworks like Hugging Face Transformers support custom data samplers. You don’t need to rewrite your code.

- Validation: Compare your model’s performance on MATH, GSM8K, and a multilingual benchmark against a baseline trained on random data.

What’s Next? The Future of Curriculum Learning

The field is exploding. In just the first two weeks of December 2025, 37 new papers on LLM curriculum learning hit arXiv. Google’s AutoCurriculum uses reinforcement learning to adjust data mixtures on the fly-giving a 9.3% boost on hard tasks without human input. MIT-IBM just open-sourced DataComp-2026, a 10-trillion-token dataset with full annotations. That’s a game-changer for researchers without the budget to build their own. By 2027, analysts expect optimized data mixtures to deliver 25-30% of all performance gains in new LLMs. That means the era of just scaling up parameters is ending. The future is smarter data. The challenge? Standardization. There are 14 competing frameworks for curriculum implementation. No single format dominates. That’s a problem for interoperability. But it’s also an opportunity-for teams that can build flexible, well-documented systems now.Do I need a huge team to implement curriculum learning for LLMs?

No. You don’t need a 50-person team. If you’re working with a 7B-30B parameter model, you can start with simple rules: sort data by sentence length and keyword density. Tools like DataComp give you pre-labeled data, cutting setup time in half. The real bottleneck isn’t engineering-it’s data quality. If your dataset isn’t clean or diverse, no curriculum will help.

Can curriculum learning improve multilingual models?

Yes-but only if you design it right. Many teams fail because they use an English-optimized curriculum and apply it globally. Low-resource languages often have shorter, simpler sentences. If you sort by complexity, you might accidentally train them last, starving them of exposure. The fix? Create separate curriculum tracks per language family, or use domain-aware weighting so no language gets buried.

Is curriculum learning better than using higher-quality data?

They’re complementary. High-quality data removes noise-duplicates, toxic content, factual errors. Curriculum learning organizes that clean data to maximize learning efficiency. You need both. A clean dataset with random ordering still wastes potential. A messy dataset with perfect scheduling just trains on garbage faster.

What benchmarks should I use to measure if my curriculum is working?

Focus on reasoning, not recall. Use MATH for math problems, GSM8K for word problems, and HumanEval for code generation. For multilingual models, test on XTREME or XNLI. If your model improves on these but not on simple QA tasks like TriviaQA, your curriculum is doing its job. If nothing changes, your data mix isn’t structured enough.

How much extra compute does curriculum learning require?

It adds 8-12% to your preprocessing cost, mostly from tagging and sorting. But that’s offset by faster training convergence-often cutting total compute by 15-20%. For a $10 million training run, that’s $1.5 million saved. The net gain is positive for most teams.

Will curriculum learning become standard in 2026?

Yes-for models under 500B parameters. By 2026, most AI labs will use some form of curriculum. The question won’t be "Should we use it?" but "Which system do we use?" Right now, there’s no standard. That will change fast as open datasets like DataComp-2026 gain traction.

Final Thought: It’s Not About Bigger Models. It’s About Smarter Training.

The race to build bigger LLMs is exhausting. More parameters mean more money, more energy, more carbon. Curriculum learning flips the script. Instead of scaling up, you scale smart. You make the model learn better from the data you already have. If you’re training an LLM today, you’re not just building a model. You’re designing a learning path. And the best learners aren’t the ones who read the most books-they’re the ones who read the right books, in the right order.Susannah Greenwood

I'm a technical writer and AI content strategist based in Asheville, where I translate complex machine learning research into clear, useful stories for product teams and curious readers. I also consult on responsible AI guidelines and produce a weekly newsletter on practical AI workflows.

Popular Articles

6 Comments

Write a comment Cancel reply

About

EHGA is the Education Hub for Generative AI, offering clear guides, tutorials, and curated resources for learners and professionals. Explore ethical frameworks, governance insights, and best practices for responsible AI development and deployment. Stay updated with research summaries, tool reviews, and project-based learning paths. Build practical skills in prompt engineering, model evaluation, and MLOps for generative AI.

It's wild how we've been treating LLM training like brute force weightlifting when it's really more like teaching a child to walk. You don't toss them into a marathon on day one. This whole curriculum idea feels so obvious in hindsight-like realizing you should learn to tie your shoes before trying to run a marathon barefoot. The emotional weight of this shift hits me harder than I expected. We're not just optimizing models, we're respecting their learning rhythm. It's almost poetic.

i just dont get why ppl make this so complicated like why not just use the data u got and train it already like why tag every sentence and make it a whole project i mean come on its just text right like u put in words and out comes answers why overthink it like the model dont care if its easy or hard its just learning words lol

Of course the West is pushing this-curriculum learning sounds like a fancy way to hide how they’re already training models on stolen Indian academic data. Did you know most of the "high-depth" examples in their datasets come from Indian IIT papers? They tag it as "science" and call it innovation. Meanwhile, we’re still stuck with English-only pipelines while our own languages get buried. This isn’t progress-it’s data colonialism with a PhD. And don’t tell me about DataComp-those labels were written by people who don’t even know what "sanskrit meter" means.

ok so i read like half of this and got lost after the part about depth and breadth and freshness like i get the idea but man why does it feel like theyre writing a phd thesis to explain something that could be done with a simple python script sorting by word count? also the 15% speedup sounds cool but what if your dataset is just garbage to begin with like i tried this on some scraped reddit data and it just made the model more sarcastic not smarter lol

This is one of the most thoughtful pieces I’ve read on LLM training in a long time. It’s easy to get caught up in the hype of bigger models, but the real breakthroughs are happening in how we guide learning-not just feed data. I appreciate how you acknowledged the implementation cost too. Many articles act like this is a magic button, but the truth is, it’s a careful, iterative process. For smaller teams, starting with sentence length and keyword density is totally enough. Progress, not perfection. Keep sharing this kind of grounded insight.

Thank you for sharing this detailed overview. It is clear that the approach of organizing training data in a structured manner has significant potential. The analogy to learning to drive is very helpful. I would like to add that while the technical details are important, the underlying principle is simple: learning should be progressive. This is true for humans and for machines alike. The challenge lies in implementation, as you noted. For organizations with limited resources, using open tools like DataComp is a wise and practical step. I believe this method will become standard not because it is flashy, but because it is effective and responsible.