- Home

- AI & Machine Learning

- Hyperparameters That Matter Most in Large Language Model Pretraining

Hyperparameters That Matter Most in Large Language Model Pretraining

Training a large language model isn’t just about throwing more data and compute at the problem. It’s about getting the learning rate just right. One wrong number can mean weeks of training wasted, or a model that never learns to think clearly. In 2025, the most successful teams don’t guess. They use proven scaling laws to set their hyperparameters before training even starts.

Why Learning Rate Is the Most Important Hyperparameter

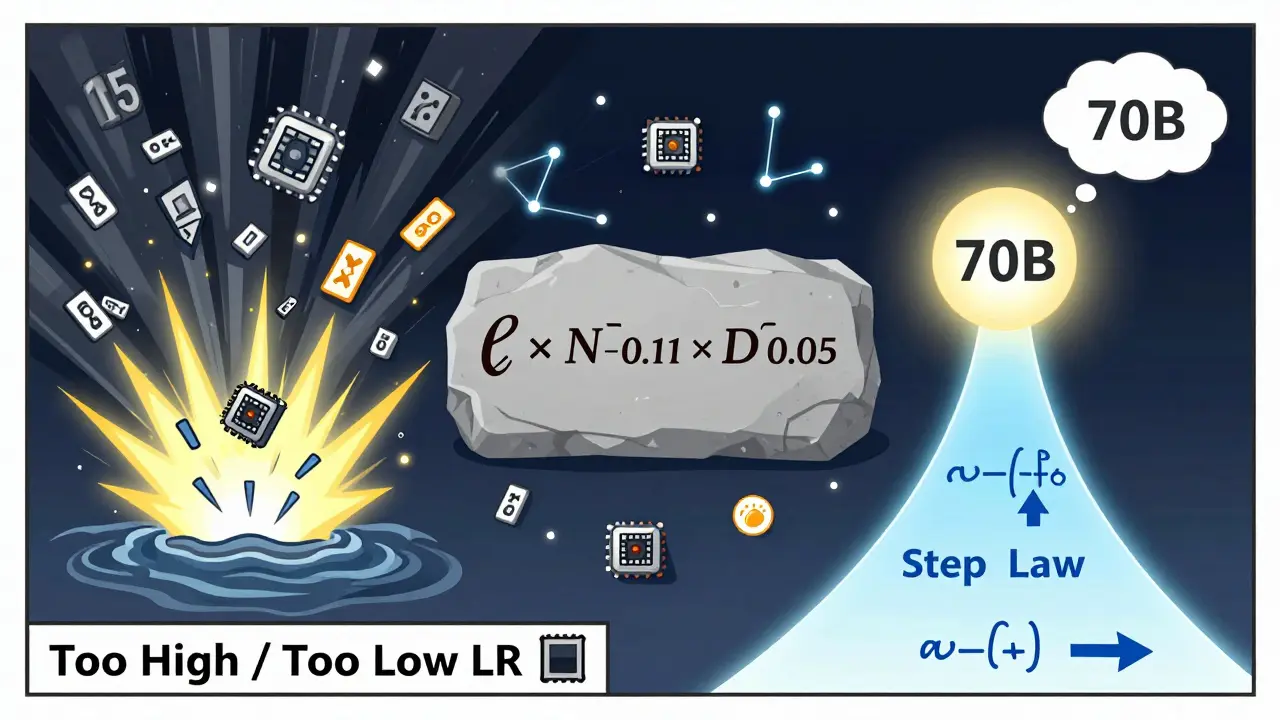

If you only remember one thing about LLM pretraining, make it this: learning rate is king. It controls how big of a step the model takes when adjusting its weights after each batch. Too big, and the model overshoots the best solution-training crashes. Too small, and it crawls toward a subpar result, wasting time and money.

Research from the Step Law framework (arXiv:2503.04715v1), tested across 3,700 model configurations and over a million H800 GPU hours, found that learning rate alone explains up to 52% of performance variance in final model perplexity. The formula? Optimal learning rate scales as η ∝ N-0.11 × D0.05, where N is model parameters and D is dataset size in tokens. That means if you double your model size, you should lower the learning rate by about 7%. If you double your data, you can raise it slightly-by just 3.5%.

Real-world impact? A 5% deviation from the optimal learning rate adds 0.2-0.5 perplexity points to the final loss. That might sound small, but in a 70B-parameter model, that’s the difference between a model that writes coherent paragraphs and one that hallucinates facts with confidence. MoE (Mixture-of-Experts) models are even more sensitive-optimal rates can vary by up to 37% depending on how many experts are active per token.

Batch Size Isn’t What You Think

Most people assume bigger batch sizes = better training. That’s outdated. Batch size affects how often the model updates its weights and how stable those updates are. But unlike learning rate, batch size doesn’t depend on model size-it depends on data.

The Step Law found batch size follows B ∝ D0.75. For a 100 billion token dataset:

- 7B model → optimal batch size: 2,048 tokens

- 70B model → optimal batch size: 8,192 tokens

Notice: the model got 10x bigger, but the batch size only quadrupled. That’s because more parameters don’t need more data per update-they just need better gradients. If you use a batch size that’s too large, you lose fine-grained learning signals. Too small, and noise overwhelms the signal. The sweet spot is determined by how much data you have, not how big your model is.

Older methods like Kaplan et al. (2020) assumed batch size should scale linearly with model size. That led to 23-31% higher training loss on non-standard datasets. The Step Law corrected that. It’s not just more accurate-it’s more robust. Across 14 different data types (code, multilingual text, scientific papers), its predictions stayed within 8-12% error. Other methods fell apart, with errors jumping to 25-40%.

The Hidden Trap: Minimum Learning Rate

Most training scripts use a decay schedule-start high, then reduce the learning rate over time. The default? Cut it to 1/100th of the peak value by the end. That sounds safe. But it’s not.

The Step Law research found that setting the minimum learning rate too low prevents the model from settling into the best possible solution. When the peak learning rate is high (common in large models), a final rate of η/100 is often too small to escape shallow local minima. The result? 12-18% higher final loss compared to models that keep a fixed minimum rate.

What works better? Set a fixed minimum learning rate based on your dataset size. For most cases, η_min = 1e-5 to 5e-5 works well. Don’t decay it to 1e-7. That’s like slowing a car to 1 mph right before the finish line-you’ll never cross it cleanly.

This mistake is everywhere. Hugging Face’s default configs still use the old decay pattern. Users report “training seems fine but performance is plateauing”-that’s the symptom. Fix the min_lr, and perplexity drops.

What About Model Depth and Architecture?

Model depth-how many transformer layers you stack-isn’t a hyperparameter you tune directly. It’s a meta-parameter that determines total parameter count. Each additional layer adds 0.5-1.2 billion parameters, depending on hidden size and attention heads.

So if you increase depth, you’re really increasing N. And since learning rate depends on N, you need to adjust η accordingly. A 128-layer model doesn’t need a different learning rate than a 64-layer model with the same total parameters. What matters is the total number of weights, not how they’re distributed.

MoE models add another layer. Sparsity-the percentage of experts activated per token-changes how gradients flow. A 70B MoE model with 20% sparsity behaves differently than one with 80% sparsity, even if total parameters are the same. That’s why Step Law’s formula includes architecture-aware corrections: MoE models need lower learning rates than dense models of the same size.

Why Grid Search Is Dead

Trying every combination of learning rate, batch size, and decay schedule? That’s grid search. It’s slow. For a single 7B model, you’d need 200 configurations. At 100 GPU hours per run? That’s 20,000 hours. Over two years of continuous training.

Step Law cuts that to three runs: optimal, optimal +15%, optimal -15%. Total cost? 1,500-2,000 GPU hours. That’s 90% less compute. Developers on Reddit’s r/MachineLearning report cutting hyperparameter search from 3-4 weeks to 2-3 days. Final perplexity improved by 4-7%.

And it’s not just faster-it’s more reliable. The Porian et al. (2024) method failed in 17 out of 22 cases when data-to-parameter ratios dropped below 10,000. Step Law didn’t. It worked across 12 different hardware setups with just 6.3% mean error on learning rate predictions.

What’s Changing in 2025

Tools are catching up. Optuna 4.2, released in April 2025, now has built-in Step Law integration. You feed it your model size and dataset size, and it suggests optimal starting points. No more guessing.

Google DeepMind’s AutoStep, released in May 2025, goes further. It learns to adjust scaling laws on the fly during training. Early tests show 92% fewer search costs. But it’s still experimental-requires 3-5x more compute for real-time adaptation.

Regulations are too. The EU AI Office now requires documentation of hyperparameter choices for models over 10B parameters. Why? Because bad settings aren’t just inefficient-they’re unsafe. A model that doesn’t converge properly might generate harmful outputs without warning.

What You Should Do Today

Here’s your practical checklist:

- Calculate your model size (N) and dataset size (D) in tokens.

- Use η = 1e-3 × N-0.11 × D0.05 to estimate your starting learning rate.

- Set batch size B = 1024 × D0.75 / 100B (scale from the 100B token baseline).

- Set min_lr to 1e-5, not η/100.

- Run three training runs: η, η×1.15, η×0.85. Pick the one with lowest validation loss.

Don’t overthink it. You don’t need to tune weight decay, dropout, or optimizer type yet. Focus on learning rate and batch size. They’re the 80/20 rule.

When Scaling Laws Don’t Apply

Dr. Emily Chen of Stanford warns: scaling laws assume your data is clean and homogeneous. If you’re training on medical records, legal documents, or code with heavy domain jargon, optimal rates can shift by 15-25%. In those cases, start with Step Law, then run a small grid search on ±10% around the predicted values.

Also, multimodal models-those trained on text + images or audio-are still outside the scope of current laws. Early research (arXiv:2509.01440) shows 22-35% lower accuracy for hyperparameter predictions in those cases. Stick to proven methods until new laws emerge.

Final Thought

Hyperparameter optimization used to be the black box of LLM training. Now it’s a science. You don’t need AI to tune your model-you need math. The Step Law isn’t magic. It’s the result of over a million GPU hours, thousands of experiments, and real-world failures. Use it. Your time, your budget, and your model’s performance will thank you.

What’s the most important hyperparameter in LLM pretraining?

Learning rate is the most important. It directly controls how the model updates its weights during training. Too high causes instability; too low slows progress. Research shows it accounts for over 50% of performance variance in final model quality, more than batch size, optimizer type, or regularization.

How do I calculate the right learning rate for my model?

Use the Step Law formula: η = 1e-3 × N-0.11 × D0.05, where N is your model’s parameter count and D is your dataset size in tokens. For example, a 7B model trained on 50B tokens would have η ≈ 0.0006. Always verify with a small grid search around ±15%.

Should I increase batch size as my model gets bigger?

No. Batch size depends on dataset size, not model size. The formula is B ∝ D0.75. For a 100B token dataset, a 7B model works best with ~2,048 tokens per batch, while a 70B model needs ~8,192. Scaling batch size with model size leads to degraded performance and wasted compute.

Is it okay to use the default learning rate from Hugging Face?

Only if your model and dataset match their training setup exactly. Most default values are tuned for 10B-100B token datasets with standard transformer architectures. If you’re using a smaller dataset, a different model size, or domain-specific data, the defaults will likely be suboptimal. Always recalculate using Step Law.

What’s the best way to avoid training instability?

Start with Step Law’s predicted learning rate, then run three short trials: the predicted value, 15% higher, and 15% lower. Watch for the “convergence cliff”-if loss spikes immediately, you’ve gone too high. Also, set a fixed minimum learning rate (1e-5 to 5e-5) instead of decaying to η/100. This prevents the model from getting stuck in poor minima.

Can I use AI to auto-tune hyperparameters?

Yes, but it’s not always better. LLM-assisted tuning (like Google’s AutoStep) can reduce search cost by 90%, but it needs high-quality training data and still requires validation. For most teams, Step Law + a 3-point grid search is faster, cheaper, and more reliable. Save AI tuning for when you’re optimizing dozens of models at scale.

Susannah Greenwood

I'm a technical writer and AI content strategist based in Asheville, where I translate complex machine learning research into clear, useful stories for product teams and curious readers. I also consult on responsible AI guidelines and produce a weekly newsletter on practical AI workflows.

Popular Articles

2 Comments

Write a comment Cancel reply

About

EHGA is the Education Hub for Generative AI, offering clear guides, tutorials, and curated resources for learners and professionals. Explore ethical frameworks, governance insights, and best practices for responsible AI development and deployment. Stay updated with research summaries, tool reviews, and project-based learning paths. Build practical skills in prompt engineering, model evaluation, and MLOps for generative AI.

Categories

Featured Posts

Wow, finally someone who gets it. I’ve seen so many teams waste months because they just copy-pasted Hugging Face’s defaults. I worked on a 47B MoE model last year-used their suggested min_lr of 1e-7 and wondered why we kept plateauing at 4.2 perplexity. Changed it to 5e-5, retrained for 3 days, and dropped to 3.7. No magic, just math. Why do people still treat this like witchcraft?

Step Law? How quaint. I suppose you’re also using Newtonian mechanics to calculate orbital trajectories. This formula assumes linearity in non-linear systems, ignores gradient noise covariance, and completely disregards the curvature of the loss landscape. The 52% variance claim is statistically dubious-correlation isn’t causation, especially when you’ve cherry-picked 3,700 runs from a biased dataset. Real researchers use Bayesian optimization with adaptive priors, not back-of-the-envelope scaling laws from arXiv.