- Home

- AI & Machine Learning

- Latency and Cost in Multimodal Generative AI: How to Budget Across Text, Images, and Video

Latency and Cost in Multimodal Generative AI: How to Budget Across Text, Images, and Video

Most companies think adding images and video to their AI chatbots will make them smarter. It does. But it also makes them expensive-and slow. If you’re running a multimodal AI system right now and your cloud bill just jumped 400%, you’re not alone. Enterprises are spending thousands more per month than they planned, and users are waiting 15 seconds for a response when they expected under a second. The problem isn’t the tech. It’s budgeting.

Why Multimodal AI Costs So Much More Than Text-Only AI

A text-only LLM like GPT-3.5 processes a single sentence in about 500 milliseconds. It uses maybe 100 tokens. Now add a high-res image. That same request suddenly needs 2,000+ tokens just to describe the picture. And that’s before the AI even starts answering. Each token costs money. Each one adds latency. And multimodal models don’t just process text and images together-they process them separately first, then combine them. That means two full model runs for every request that includes an image. According to Chameleon Cloud’s 2025 benchmarks, processing a single 1080p image consumes 5GB of GPU memory. A text-only prompt? Less than 100MB. That’s a 50x difference in memory use. And memory equals cost. AWS charges more for GPU hours used by image-heavy workloads. NVIDIA’s A100s-common in enterprise setups-cost $3.50 per hour. If your system processes 500 images a day, you’re burning through $1,750 in GPU time alone. Add video, and that number climbs even higher.Latency Isn’t Just a Tech Problem-It’s a User Experience Problem

Users don’t care how many GPUs you’re using. They care if their AI assistant responds in 1 second or 15. A 2024 study by nOps found that multimodal systems with unoptimized image processing had a P95 latency of 19.49 seconds. That’s longer than a customer waits for a pizza delivery. When engineers at a healthcare startup cut image token counts from 2,048 to 400, response times dropped by 78%. That’s not a tweak-it’s a transformation. The bottleneck isn’t the model. It’s the token explosion. Every pixel in an image gets converted into a token. A 100x100 pixel image? That’s 10,000 pixels. Each pixel becomes a token. Even with compression, you’re still looking at 1,500-2,500 tokens per image. Text? 10-50 tokens for a full paragraph. That’s why your chatbot works fine with text but freezes when someone uploads a photo of their broken appliance.Modality-Specific Budgeting: Stop Treating All Inputs the Same

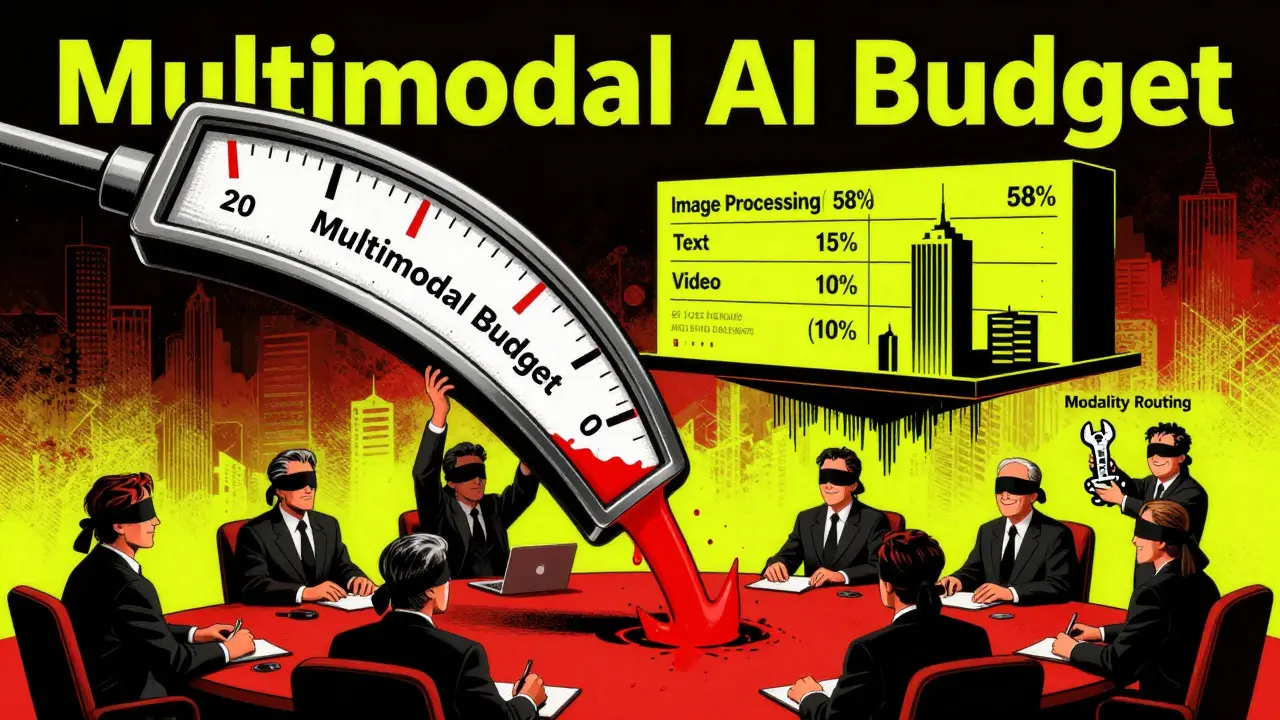

The biggest mistake companies make? Treating text, images, and audio as equal in cost and priority. They’re not. Text is cheap. Video is expensive. Audio sits in the middle. A retail chatbot that analyzes product images? Image processing eats 58% of your total multimodal cost, according to Binadox. But if your system is only answering FAQs via text, why are you paying for high-res image encoders? Successful teams now use modality-aware budgeting. That means:- Only enable image processing when the user actually uploads a file

- Use low-resolution thumbnails for initial image analysis, not full HD

- Drop video processing unless it’s critical-most use cases don’t need it

- Set hard limits on token counts per modality

Real-World Cost Breakdown: What You’re Really Paying For

Here’s what a typical enterprise multimodal system looks like in 2025:| Component | Cost Contribution | Latency Impact |

|---|---|---|

| Text Processing | 15% | Low (under 500ms) |

| Image Processing | 58% | High (8-15 seconds) |

| Audio Processing | 12% | Medium (2-4 seconds) |

| Video Processing | 10% | Very High (15-30+ seconds) |

| Model Loading (Cold Start) | 5% | 8-12 seconds per first request |

How Top Teams Are Cutting Costs Without Sacrificing Accuracy

You don’t need to give up multimodal features to save money. You just need to optimize them.- Token reduction: Chameleon Cloud showed that reducing image tokens from 2,048 to 400 cut costs by 14% and latency by 78%-with only a 1.2% drop in diagnostic accuracy for medical images.

- Quantization: Switching from 16-bit to 8-bit precision cuts memory use by 4x and reduces compute cost by 30-60%. NVIDIA’s TensorRT supports this out of the box.

- Modality routing: Use a lightweight classifier to detect if a request even needs images. If it’s just “What’s your return policy?”, route it to the text-only model. Save the heavy model for “I uploaded a photo of this defect-what’s wrong?”

- Edge processing: For mobile apps, do basic image preprocessing on the phone before sending data to the cloud. Reduce token load by 50% before it even hits your server.

What Happens When You Don’t Budget Properly

The stories are everywhere. A retail chain launched a visual search feature for clothing. They thought it would boost sales. Instead, they spent $28,000 a month on image processing and saw zero increase in conversions. ROI was negative. They shut it down. Another startup built a customer service bot that accepted voice and image inputs. They didn’t cap token usage. One viral TikTok post led to 10,000 image uploads in 48 hours. Their AWS bill hit $45,000 in one month. They had to shut down for two weeks. This isn’t hypothetical. Gartner’s 2025 risk report says 68% of multimodal AI projects fail within 12 months-not because the tech doesn’t work, but because the cost exploded.Where the Market Is Headed: What to Expect by 2026

By 2026, 60% of enterprises will use modality-specific budgeting, according to Gartner. That means:- AI platforms will auto-detect modality type and adjust resource allocation in real time

- Cloud providers will offer “multimodal cost optimizer” tools-AWS already has one

- Models will be trained to use fewer tokens without losing accuracy

- Regulations like the EU AI Act will require cost transparency for high-risk systems

Getting Started: Your 5-Step Budgeting Plan

If you’re starting a multimodal project-or already running one-here’s what to do:- Measure everything: Track token usage per modality. Use tools like AWS CloudWatch or Hugging Face’s inference API logs.

- Set hard caps: Limit image tokens to 800 max. Limit audio to 15 seconds. Cut video unless it’s essential.

- Test with real data: Don’t use sample images. Use your actual customer uploads. See what’s actually being sent.

- Build a fallback: If a request has an image, try processing it as text first. Can you answer without the image? If yes, skip the heavy model.

- Monitor monthly: Cost spikes happen fast. Set alerts for 20%+ increases in GPU usage.

Frequently Asked Questions

Why is image processing so much more expensive than text in multimodal AI?

Images require thousands of tokens to represent details that text expresses in dozens. A single 1080p image can generate 2,000+ tokens, while a paragraph of text uses 50-100. Each token needs memory, compute, and time. That’s why image processing uses 5-50x more resources than text, even when the model is the same.

Can I use cheaper GPUs to reduce multimodal AI costs?

Yes-but with limits. For low-volume, non-real-time tasks, L4 or A10 GPUs can handle 7B-parameter models at 1/3 the cost of A100s. But for high-throughput systems (like customer service bots processing 100+ images/hour), you’ll hit performance walls. The savings on hardware are offset by slower response times and longer queues. It’s a trade-off: cheaper hardware means slower users. Focus on optimization before upgrading.

Is video worth the cost in multimodal AI systems?

Almost never. Video adds 4-10x more data than images. Most use cases-like product reviews or customer support-don’t need motion. Still images work 90% of the time. If you’re building a system for surveillance or autonomous vehicles, then video makes sense. For retail, healthcare, or service bots, video is a cost center, not a value driver.

How do I know if my multimodal AI is over-budgeted?

Check three things: (1) Is your monthly GPU spend over $10,000 for fewer than 1,000 image uploads? (2) Do users complain about slow responses? (3) Are you processing images for requests that don’t need them? If you answered yes to any, you’re over-budgeted. Start by disabling image processing on text-only queries. That alone often cuts costs by 30-50%.

What’s the future of multimodal AI cost optimization?

The future is in smarter token use. New models are being trained to compress visual data into fewer tokens without losing meaning. By late 2026, we’ll see systems that use 70% fewer image tokens than today-with no accuracy loss. Cloud providers will also start billing based on effective tokens, not raw input. That means you’ll pay for what the AI actually uses, not what you send.

Next Steps: What to Do Today

If you’re running a multimodal AI system:- Log into your cloud dashboard. Find your token usage by modality.

- Look for spikes. Did costs jump after a marketing campaign? That’s your red flag.

- Turn off image processing for 24 hours. See if users notice. If not, you’re paying for unnecessary power.

- Try reducing image resolution to 320x240. Most models still work fine.

- Start with text-only. Prove the use case works before adding images.

- Build in token limits from day one. Don’t wait until your bill hits $20,000.

- Ask: “What’s the business value of this image?” If you can’t answer, don’t enable it.

Susannah Greenwood

I'm a technical writer and AI content strategist based in Asheville, where I translate complex machine learning research into clear, useful stories for product teams and curious readers. I also consult on responsible AI guidelines and produce a weekly newsletter on practical AI workflows.

Popular Articles

5 Comments

Write a comment Cancel reply

About

EHGA is the Education Hub for Generative AI, offering clear guides, tutorials, and curated resources for learners and professionals. Explore ethical frameworks, governance insights, and best practices for responsible AI development and deployment. Stay updated with research summaries, tool reviews, and project-based learning paths. Build practical skills in prompt engineering, model evaluation, and MLOps for generative AI.

Man, this hit home. We just went from $2k to $18k/month on AWS because someone thought 'let's add image search' without checking the token costs. We didn't even have a single user upload a photo for two weeks. Just pure waste. Started capping images at 400 tokens and dropped to $4.5k. No one noticed the difference in answers. Turned out most people just wanted to type 'my shirt is too tight' anyway.

It's not about the tech. It's about the human illusion that more data means more intelligence. We treat pixels like wisdom. But a thousand tokens of a broken phone screen don't make the AI understand brokenness. It just sees noise. And we pay for noise like it's insight.

Let me just say this: if your multimodal AI is costing more than your payroll, you’re not building AI-you’re building a financial hemorrhage. And no, ‘but users love it!’ doesn’t cut it when your CFO is crying into their coffee. We cut video processing entirely. No one missed it. People upload videos because they think it’s ‘cool,’ not because it’s useful. Sad, but true.

so i tried lowering the image res to 320x240 and honestly? it still works fine. like, my users didnt even notice. i was scared theyd be mad but nope. just chillin. also turned off video for now. saved like 60% and my boss actually smiled. weird right? maybe we were just overdoing it. also typoed ‘resolusion’ but you get it lol

It’s not just about cost-it’s about responsibility. You’re not just burning GPU credits; you’re burning energy, and that energy comes from power plants that are already overburdened. If your ‘innovation’ requires more electricity than a small town, you’re not a tech pioneer-you’re an environmental liability. And if your users can’t get a response in under two seconds, you’ve already failed them. Stop pretending this is progress. It’s just excess dressed up as intelligence.