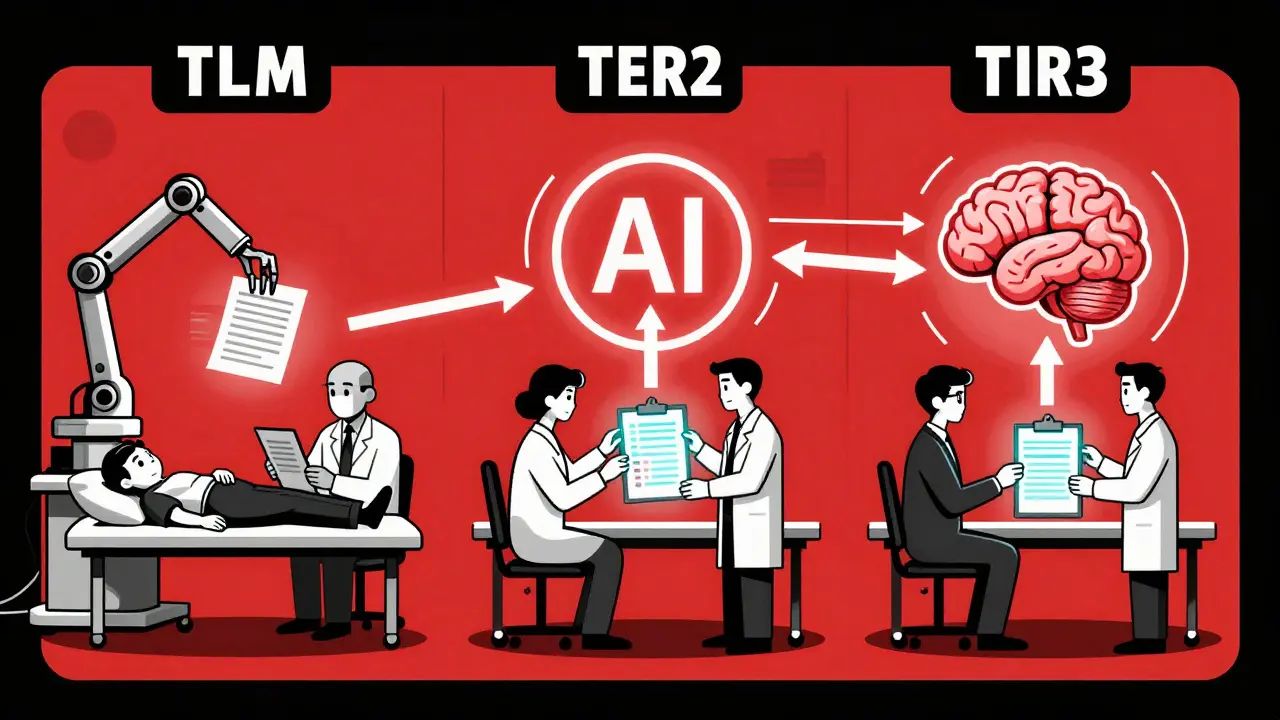

Human-in-the-Loop Evaluation Pipelines for Large Language Models

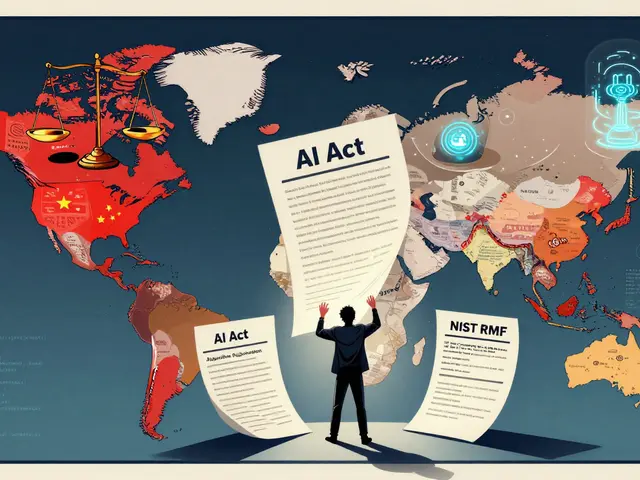

Human-in-the-loop evaluation pipelines combine AI speed with human judgment to ensure large language models produce accurate, safe, and fair outputs. Learn how tiered systems cut review time while improving quality.