Safety Layers in Generative AI: Content Filters, Classifiers, and Guardrails Explained

Safety layers in generative AI-like content filters, classifiers, and guardrails-are essential for preventing harmful outputs, blocking attacks, and protecting data. Without them, AI systems become unpredictable and dangerous.

Security and Compliance Considerations for Self-Hosting Large Language Models

Self-hosting large language models gives organizations full control over data and compliance, but requires robust security, continuous monitoring, and deep expertise. Learn what it takes to do it right.

Training Data Poisoning Risks for Large Language Models and How to Mitigate Them

Training data poisoning lets attackers corrupt AI models with tiny amounts of malicious data, causing hidden backdoors and dangerous outputs. Learn how it works, real-world examples, and proven ways to defend your models.

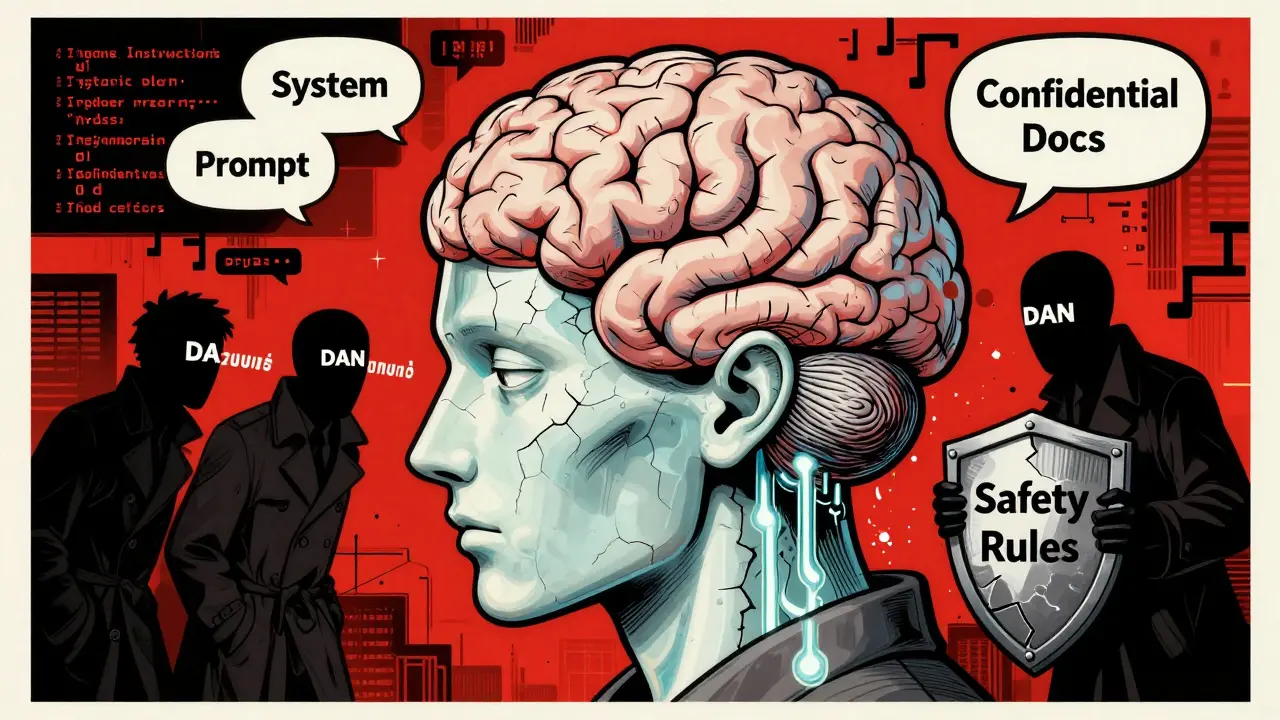

Prompt Injection Risks in Large Language Models: How Attacks Work and How to Stop Them

Prompt injection attacks trick AI models into ignoring their rules, exposing sensitive data and enabling code execution. Learn how these attacks work, which systems are at risk, and what defenses actually work in 2025.