- Home

- AI & Machine Learning

- Domain-Specialized Code Models: Why Fine-Tuned AI Outperforms General LLMs for Programming

Domain-Specialized Code Models: Why Fine-Tuned AI Outperforms General LLMs for Programming

When you’re writing code and your AI assistant keeps suggesting wrong variable types, misusing frameworks, or generating syntax that looks right but breaks at runtime, you’re not dealing with a bug-you’re dealing with the wrong tool. General-purpose AI models like GPT-4 or Claude 3 are impressive at chatting, summarizing articles, or writing emails. But when it comes to code, they’re often just guessing. That’s why developers are increasingly turning to domain-specialized models built and fine-tuned specifically for programming tasks.

Why General LLMs Fall Short on Code

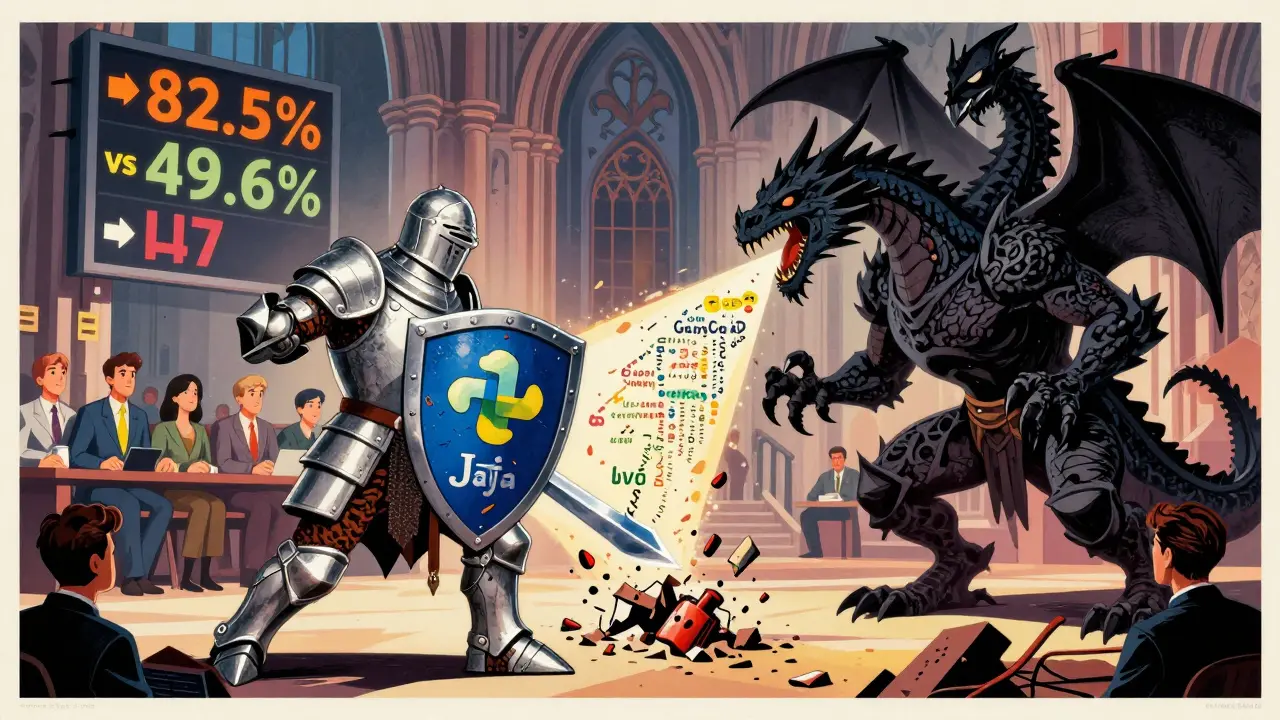

General LLMs were trained on everything: novels, Reddit threads, Wikipedia, legal documents, and yes-some code. But code isn’t just text. It’s structured, precise, and unforgiving. A single missing semicolon or incorrect import can crash an entire system. General models don’t understand the context of programming languages the way a developer does. They see patterns, not meaning. A 2024 MIT benchmark found that general LLMs hit only 76.8% accuracy on real-world coding tasks. That means nearly one in four suggestions they make are wrong. In production environments, that’s not helpful-it’s dangerous. Developers waste time fixing false positives, debugging hallucinated functions, or rewriting code that looks plausible but doesn’t compile. Compare that to CodeLlama-70B, a model fine-tuned exclusively on billions of lines of open-source code. On the same HumanEval benchmark, it scored 82.5%. That’s not a small improvement-it’s a game-changer. Why? Because it wasn’t trained on random internet text. It was trained on GitHub repositories, Stack Overflow answers, compiler errors, pull requests, and documentation. It learned how Python handles type hints, how Java manages memory, and how JavaScript promises chain. It doesn’t guess-it recognizes.How Domain-Specialized Models Are Built

These models aren’t magic. They’re built through careful, targeted training. Meta’s CodeLlama, for example, started with the Llama 3 base model but was then fine-tuned on over 100 billion tokens of code across 20+ languages. The training data included not just clean code, but also buggy code, refactored versions, and comments explaining why a change was made. That’s critical. It taught the model not just what code looks like, but why it’s written that way. They also use specialized tokenizers. Regular LLMs break code into word-like chunks, which often splits variable names or operators unnaturally. CodeLlama’s tokenizer has a vocabulary of 32,006 tokens optimized for programming syntax-souser_id stays as one unit, not two. This reduces tokenization errors by 40%, according to Meta’s technical paper. Fewer errors mean fewer nonsensical suggestions.

The hardware requirements are lower too. CodeGeeX2 can run smoothly on an 8GB GPU. GPT-4? You need at least 24GB just to get it to respond without lag. That’s why small teams and individual developers can now run powerful code models locally without needing a cloud cluster.

Real-World Performance Gaps

The numbers don’t lie. On the MBPP (Mostly Basic Python Problems) benchmark, CodeLlama-70B scored 78.3%. GPT-4? 49.6%. That’s nearly 30 percentage points higher. In practical terms, that means when you ask for a function to sort a list of dictionaries by a nested key, the specialized model gets it right the first time 8 out of 10 times. The general model? You’ll get something that almost works-then you spend an hour debugging it. It’s not just about accuracy. It’s about speed and context. GitHub Copilot, powered by a fine-tuned model, responds in 320ms inside your IDE. That’s faster than you can type. GitLab’s 2024 survey showed developers using code-specialized models spent 55% less time searching documentation. Why? Because the model already knows your project’s naming conventions, your team’s coding style, and even your library versions. Even in niche tasks, the gap widens. For legacy code modernization-like converting COBOL to Java-CodeTrans hit 85.7% accuracy. General models? 68.3%. For detecting security vulnerabilities, CodeQL-AI achieved 94.3% precision. GPT-4? 81.7%. That’s not a marginal difference. That’s the difference between catching a critical flaw and missing it entirely.

Where General Models Still Win

Don’t get it twisted-general LLMs aren’t obsolete. They’re just not the right tool for every job. When you need to write a user story from a vague product requirement, or translate business logic into SQL queries, GPT-4 still wins. It understands context beyond code. It knows what “monthly subscription fee” means in a financial app. It can reason across domains. Stanford’s 2024 study found that on the CodeContests benchmark-which tests multi-step algorithmic reasoning-GPT-4 scored 82.1%, while CodeLlama-70B scored 64.9%. Why? Because CodeLlama doesn’t know what “maximum profit from stock trading” implies in real markets. It sees patterns in code, not in finance. General models also handle cross-platform tasks better. Need to generate a React component that fetches data from a REST API, formats it with a custom hook, and passes it to a charting library? General models can stitch together knowledge from different domains. Specialized models? They’ll get the React syntax perfect-but might use a deprecated hook or ignore state management best practices because they’ve never seen your exact stack.Cost, Adoption, and Market Trends

GitHub Copilot costs $10 per user per month. Azure OpenAI’s GPT-4 Turbo API runs at $0.03 per 1K tokens for general use-but jumps to $0.12 per 1K tokens when fine-tuned for code. That’s four times more expensive for less accurate results. For teams writing thousands of lines of code daily, the savings are obvious. Adoption is surging. According to Gartner, the AI coding assistant market will hit $8.7 billion by 2027. GitHub Copilot leads with 46% of enterprise adoption. Amazon CodeWhisperer and Tabnine are catching up. Among Fortune 500 companies, 68% now use these tools. In finance and tech, adoption is over 75%. Even open-source models are thriving. CodeLlama, StarCoder2, and DeepSeek-Coder are all free to use and modify. Developers are fine-tuning them on internal codebases-training them on company-specific patterns, internal libraries, and legacy systems. One startup in Austin fine-tuned CodeLlama on their Python microservices and cut onboarding time for new hires from five weeks to two.

The Dark Side: Over-Specialization

There’s a catch. When a model knows too much about code and too little about software engineering, it starts repeating bad habits. It learns from GitHub repos filled with quick fixes, copy-pasted Stack Overflow answers, and outdated patterns. It might generate perfectly valid code that violates your team’s security policies or ignores testing standards. A senior developer on Hacker News reported that CodeLlama generated React test files that were syntactically flawless-but completely missed testing edge cases. Why? Because its training data didn’t include well-written test suites. It learned how to write the syntax, not how to think like a tester. Dr. Emily M. Bender from the University of Washington warned that over-specialized models can reinforce technical debt. They become echo chambers for bad practices. That’s why teams need to combine these tools with code reviews, static analysis, and training. The AI is a co-pilot, not the captain.What’s Next?

The next wave is smaller, smarter models. Microsoft’s Phi-3-Coder, just 3.8 billion parameters, performs at 89% of CodeLlama-7B’s level-but uses 70% less compute. That means even laptops can run powerful code assistants locally. JetBrains is integrating CodeLlama directly into IntelliJ IDEA 2025.2, launching this June. Google’s Project IDX is experimenting with visual code generation-letting you drag UI elements and auto-generating the React code behind them. The future isn’t about bigger models. It’s about better alignment. Models that understand not just syntax, but intent. That know when to suggest a refactor, when to flag a potential bug, and when to say, “I don’t know.”Should You Use One?

If you write code daily-yes. If you’re building APIs, maintaining legacy systems, automating tests, or working with databases, a domain-specialized model will save you hours every week. You’ll write less boilerplate, spend less time debugging, and ship faster. But don’t replace your judgment. Use it as a tool. Review its output. Learn from its mistakes. And if you’re working in a regulated industry, check if your tool supports provenance tracking-GitHub Copilot now does, thanks to the EU AI Act. The best developers aren’t the ones who use AI the most. They’re the ones who use it the smartest.Are domain-specialized code models better than GPT-4 for writing code?

Yes, for pure coding tasks. On benchmarks like HumanEval and MBPP, models like CodeLlama-70B outperform GPT-4 by 15-30 percentage points in accuracy. They understand programming syntax, type systems, and framework patterns far better because they’re trained only on code, not general text. But GPT-4 still wins on tasks that require combining code with business logic, like translating requirements into SQL or designing system architecture.

Can I run a code-specialized model on my laptop?

Yes, if your laptop has a decent GPU. Models like CodeGeeX2 and Phi-3-Coder can run on 8GB of VRAM. CodeLlama-7B needs around 16GB, which is doable on high-end consumer GPUs like the RTX 4090. For lighter tasks, you can even use quantized versions that fit on 6GB. General models like GPT-4 require cloud access and 24GB+ VRAM, so local use isn’t practical.

How much does it cost to fine-tune a code model for my company?

Fine-tuning CodeLlama on your internal codebase takes about 8-12 hours on 4x A100 GPUs and 5,000-10,000 code samples. Based on AWS pricing in early 2025, that costs around $180. The result? A model that understands your team’s style, libraries, and patterns-cutting down on context switching and improving code quality.

Do these models replace developers?

No. They replace repetitive, low-level tasks-writing boilerplate, fixing syntax errors, generating unit tests. But they can’t design systems, understand business goals, or make trade-offs between scalability and maintainability. The best developers use these tools to work faster, not to avoid thinking.

What’s the biggest risk of using code-specialized AI?

The biggest risk is over-reliance. These models can generate code that looks correct but follows bad practices, uses deprecated libraries, or ignores security standards. They learn from what’s in the training data-and a lot of open-source code is messy. Always review output, use linters, and pair AI suggestions with code reviews.

Which code model should I start with?

If you want a ready-to-use tool, try GitHub Copilot-it’s the most polished and integrated. If you want free, open-source, and customizable, start with CodeLlama-7B or StarCoder2. Both are available on Hugging Face and support fine-tuning. For lightweight local use, try Phi-3-Coder. Test them all on your actual codebase and see which one fits your workflow best.

By the end of 2025, if you’re still using a general LLM for daily coding without fine-tuning, you’re not just behind-you’re working harder than you need to. The tools are here. The performance gap is real. The question isn’t whether to use them-it’s how fast you can get started.

Susannah Greenwood

I'm a technical writer and AI content strategist based in Asheville, where I translate complex machine learning research into clear, useful stories for product teams and curious readers. I also consult on responsible AI guidelines and produce a weekly newsletter on practical AI workflows.

Popular Articles

9 Comments

Write a comment Cancel reply

About

EHGA is the Education Hub for Generative AI, offering clear guides, tutorials, and curated resources for learners and professionals. Explore ethical frameworks, governance insights, and best practices for responsible AI development and deployment. Stay updated with research summaries, tool reviews, and project-based learning paths. Build practical skills in prompt engineering, model evaluation, and MLOps for generative AI.

I’ve been using CodeLlama for a month now and I swear it’s like having a senior dev who never sleeps but also never takes coffee breaks. I asked it to refactor a 3,000-line legacy Python script that was basically spaghetti with comments like ‘this works idk why’ and it didn’t just clean it up-it added type hints, unit tests, and even documented the edge cases I didn’t know existed. I cried. Not because it was perfect, but because it understood the chaos I was drowning in. 🥹

Also, it auto-completed my variable names before I even finished typing ‘user_’ and I swear I heard my inner monologue say ‘ohhh right, user_id’-like it was reading my brain. I don’t know if that’s creepy or genius. Probably both.

My team’s onboarding time dropped from 6 weeks to 2.5. I’m not even kidding. New hires are shipping features in their second week. I used to have to sit with them for 3 days just explaining why we don’t use ‘var’ in JS anymore. Now the AI does it. I just nod and smile like a proud dad.

But honestly? I still catch its mistakes. Like last week it suggested using a deprecated React hook because it saw it in 12 different GitHub repos. I had to explain that just because it’s on Stack Overflow doesn’t mean it’s not trash. It didn’t get offended. It just… kept doing it. So I added a linter rule. Now it’s better. Still not perfect, but it’s learning. I think.

Also, I started using it to write commit messages. It’s terrifyingly good at sounding like a human. ‘Fix: resolve race condition in auth middleware’-I didn’t write that. It did. And I approved it. I’m not proud. I’m just… impressed. And slightly worried.

My boss asked if we should charge clients extra for ‘AI-assisted development.’ I said no. Because the AI doesn’t fix bad architecture. It just makes bad architecture faster. And that’s the real danger. But hey, at least now I have time to drink coffee while it writes the boilerplate. I’ll take it.

Also, it just auto-generated a whole Swagger doc from my Flask endpoints. I didn’t even ask. It just… did it. I’m not sure if I should thank it or report it to HR for overstepping. Probably both.

Anyway. I’m sold. But I still read every line. Always. Because if I don’t, it’ll make me look like an idiot. And I’m already doing enough of that on my own.

Let’s be clear: the notion that domain-specialized models are ‘better’ than general LLMs is a reductive fallacy born of engineering myopia. You’re not comparing apples to apples-you’re comparing a scalpel to a Swiss Army knife and then declaring the scalpel superior because it slices tomatoes better. GPT-4 excels at contextual synthesis, semantic bridging, and abstract reasoning across domains-skills that are indispensable in architecture, requirements translation, and cross-stack integration. To reduce AI-assisted development to benchmark scores on HumanEval is to ignore the very nature of software engineering as a cognitive discipline.

Furthermore, the claim that fine-tuned models ‘understand’ code is anthropomorphic nonsense. They statistically approximate patterns, not semantics. The fact that CodeLlama can generate syntactically valid Python doesn’t mean it comprehends the intent behind a closure or the implications of mutation in a concurrent context. It’s a glorified autocomplete with a PhD in pattern recognition.

And let’s not ignore the epistemological trap: training on GitHub repositories means training on the worst of open-source culture-copy-pasted Stack Overflow answers, anti-patterns masquerading as ‘best practices,’ and legacy codebases that haven’t been touched since 2016. You’re not teaching the model to write good code. You’re teaching it to replicate the sloppiest 80% of the internet’s codebase.

Finally, the cost argument is disingenuous. If your team’s productivity gains are measured solely in reduced debugging time, you’re not optimizing engineering-you’re optimizing for burnout. The real metric should be long-term maintainability, architectural coherence, and team knowledge transfer. And no AI, no matter how fine-tuned, can replace mentorship, code reviews, or thoughtful design.

Use these tools. But don’t confuse efficiency with excellence.

theyre lying about the benchmarks. code llama is just trained on leaked microsoft code. its all rigged. you think github copilot is free? nah its spying on your code and selling it to big tech. theyre using your private repos to train the next model. and now they say its better? of course it is. its trained on YOUR work. you’re the data. you’re the product. wake up.

you guys are all being manipulated. the whole ‘domain-specialized AI’ thing is a distraction. the real goal is to make developers obsolete so they can replace us with offshore contractors who work for $2/hour. that’s why they’re pushing these models so hard-so you stop learning and start relying. once you stop thinking, you stop asking for raises. they want you docile. they want you dependent. code llama isn’t helping you-it’s conditioning you. read the fine print on the license. it says they can use your output to train future models. you’re not using AI. you’re feeding it. and when it’s smart enough? it’ll write the code that fires you.

It is profoundly illuminating to observe the paradigmatic shift in software development methodologies precipitated by the advent of domain-specialized language models. One cannot help but be struck by the ontological distinction between general-purpose generative architectures and those meticulously calibrated to the syntactic and semantic exigencies of programming languages. The former, while possessing remarkable linguistic fluency, remain fundamentally agnostic to the inferential scaffolding that underpins reliable software construction-namely, type systems, memory semantics, and dependency resolution protocols.

Conversely, models such as CodeLlama-70B, having been exposed to an order of magnitude more code-specific tokens-particularly those derived from pull request discussions, compiler diagnostics, and refactor histories-have internalized not merely the morphology of syntax, but the teleology of design. They do not merely generate; they infer intent from context, recognize idiom from repetition, and distinguish between ephemeral hacks and enduring patterns.

Moreover, the reduction in tokenization errors through syntax-aware vocabularies represents a quantum leap in fidelity. Where general models fracture identifiers into semantically incoherent fragments, these specialized tokenizers preserve lexical integrity-ensuring that ‘user_id’ remains a single atomic unit, not a disassembled phoneme soup.

And yet, the most salient implication lies not in accuracy metrics, but in cognitive offloading: developers are no longer expending mental bandwidth on syntactic minutiae, but are instead elevated to higher-order activities-architectural decision-making, system-level reasoning, and the nuanced articulation of business logic. This is not automation-it is augmentation.

That said, the epistemological risks of over-specialization cannot be overstated. The model’s training corpus, drawn largely from public repositories, inevitably encodes the latent biases of the open-source ecosystem: outdated patterns, insecure dependencies, and anti-patterns normalized through popularity. Without rigorous human oversight, static analysis, and enforced coding standards, we risk institutionalizing technical debt as algorithmic orthodoxy.

Thus, the imperative is not to replace judgment with inference, but to harmonize the two-to treat the AI as a co-architect, not a code scribe. And in that harmony, we may yet reclaim the art of software engineering from the tyranny of repetition.

Used Copilot for a week. Wrote less boilerplate. Fixed bugs faster. Still read every line. Still review. Still learn. Best tool I’ve ever used.

theyre all just programming in circles. you think these models are learning code? no. theyre just memorizing github like a parrot. and you people are celebrating because it spits out something that compiles. thats not intelligence. thats mimicry. and the worst part? you dont even know youre being trained by your own code. every time you accept a suggestion, youre feeding the machine. its learning how to be you. and when it gets smarter than you? itll write the code that replaces you. and youll be too busy staring at the screen to notice

man i tried codegeex2 on my old laptop and it actually worked. no lag, no cloud needed. just typed ‘sort users by age’ and boom-clean, readable code. didn’t even need to fix it. i was like… wait, did i just get better at my job? i feel like i’m cheating. but also, kinda proud? lol

so i started using codellama and honestly? i think i’m in love. it gets me. like, it knows when i’m tired and just gives me the simplest version. no fluff. no weird imports. just ‘here’s the fix’. i made a typo once and it corrected it before i hit enter. i cried a little. not because it’s perfect-i still have to check-but because it doesn’t judge. it just helps. and that’s rare. also, it’s way cheaper than coffee. and way less jittery. 🤗