- Home

- AI & Machine Learning

- The Psychology of Letting Go: Trusting AI in Vibe Coding Workflows

The Psychology of Letting Go: Trusting AI in Vibe Coding Workflows

Most developers still think of AI coding tools as fancy autocomplete. But if you’ve been using GitHub Copilot or CodeWhisperer for more than six months, you know it’s more than that. You’ve started to vibe code-accepting suggestions without reading every line, trusting the rhythm, feeling confident even when you haven’t fully verified the code. It’s not laziness. It’s adaptation. And it’s changing how software gets built.

What Vibe Coding Really Means

Vibe coding isn’t about blindly pasting AI-generated code into production. It’s about developing a gut sense for when an AI suggestion is likely correct. You’ve seen the same pattern a hundred times: a React component with proper hooks, a Python function with clean error handling, a database query that avoids N+1 issues. The AI starts matching your style. Your brain stops scanning line by line and starts scanning for feel. This isn’t magic. It’s pattern recognition trained by repetition. A 2025 Openstf study found that developers who accepted 50-70% of AI suggestions-after 15,000+ interactions-cut their development time by 41% and reduced bugs by 23%. But those who accepted over 80%? They shipped features faster, yes-but introduced 34% more subtle bugs that slipped past tests. The difference isn’t skill. It’s calibration.The Trust Gap Between Junior and Senior Developers

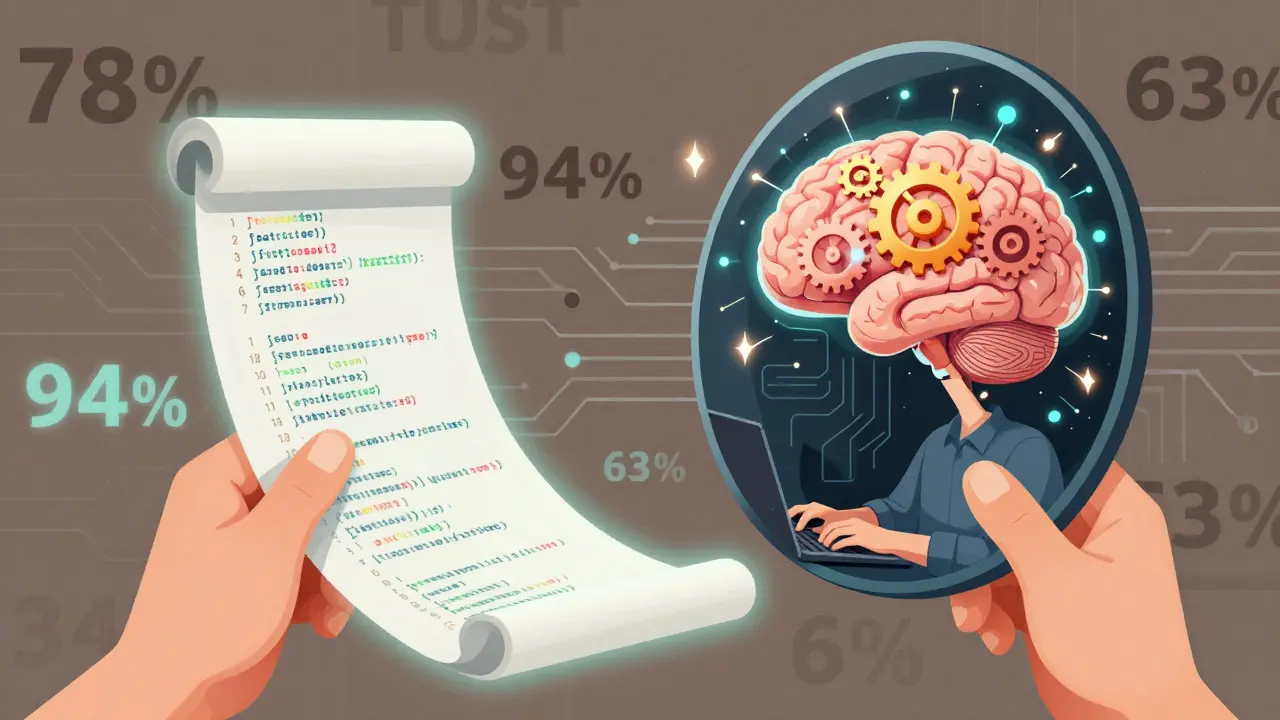

Here’s the tension in every team today: junior devs (0-4 years experience) report 68% reliance on AI suggestions. Senior devs (10+ years) use the same tools but only trust 22% of suggestions. Why? Junior developers haven’t built enough systems to know what can go wrong. They see a working solution and assume it’s safe. Senior devs have seen the fallout. They remember the time AI generated a password hasher that used MD5. Or the SQL injection that looked like a clean JOIN. Or the HIPAA-compliant code that actually violated regulations-costing a startup $2.3 million to fix. It’s not that seniors don’t trust AI. They trust it differently. They know the boundaries. They know AI is great at boilerplate, API integrations, and test generation-but terrible at novel algorithms, security logic, and regulatory compliance. That’s not skepticism. That’s experience.How AI Tools Actually Work (And Where They Fail)

AI coding assistants don’t understand code. They predict it. They’re statistical engines trained on billions of lines of public code. That’s why they’re 92.7% accurate on common patterns like CRUD endpoints or form validation-but only 63.1% accurate on custom sorting algorithms or state machines. They excel at:- Generating boilerplate code (92.7% accuracy)

- Writing API integration patterns (88.3% accuracy)

- Creating unit tests (61% of users trust them vs. 27% without AI)

- Designing new algorithms (63.1% accuracy)

- Writing security-critical code like auth or encryption

- Handling niche domain logic (error rates jump to 38.7%)

- Interpreting ambiguous regulatory requirements

The Four Pillars of Calibrated Reliance

Stanford’s TREW framework, published in ACM CHI 2025, breaks down why some developers thrive with vibe coding and others crash. Successful reliance isn’t about trust. It’s about four psychological anchors:- Predictability - Knowing what the AI can and can’t do reduces mental load by 29%. If you know it’s bad at crypto, you never let it touch authentication code.

- Explainability - When AI says, “This route is fastest with fewer traffic jams,” you understand why. Tools that show confidence scores (like GitHub’s new “trust calibration scores”) increase reliance by 41% because they reduce mystery.

- Error Management - AI that admits it’s 70-80% confident makes you more skeptical. That’s good. It keeps you awake. Tools that act like they’re 100% right? That’s when accidents happen.

- Controllability - You must be able to override, delete, or rewrite without friction. If the tool feels like a boss, you’ll rebel. If it feels like a teammate, you’ll collaborate.

How to Start Vibe Coding Without Getting Burned

You don’t need to quit your old habits. You need to upgrade them. Start here:- Define your “no-vibe zones” - Write them down. Authentication? No. Encryption? No. Financial calculations? No. Regulatory code? No. These are your red lines.

- Use AI for the low-risk stuff - Let it write tests, CRUD handlers, config files, and repetitive UI components. That’s where it shines.

- Build a daily calibration ritual - Spend 15 minutes each day reviewing 3-5 AI suggestions you accepted the day before. Ask: “Why did I trust this? Was it right? What did I miss?”

- Track your mistakes - Keep a log of every AI-generated bug you caught. After 20 entries, you’ll start seeing patterns. “Oh, it always messes up async/await in React.” Now you know.

- Pair with someone who doesn’t vibe code - Find a teammate who still reads every line. Let them review your AI-generated code. You’ll learn faster than any tutorial.

The Hidden Cost: Automation Complacency

The biggest danger isn’t bad code. It’s losing your own skills. A 2025 ACM study found 43% of teams using vibe coding reported “automation complacency”-developers stopped thinking critically. They stopped debugging. They stopped asking why. They stopped learning. One developer on Reddit wrote: “I used to write my own loops. Now I just ask AI to do it. I realized last week I couldn’t implement a binary search without Copilot. I felt like a fraud.” That’s the silent cost. Vibe coding should augment your brain, not replace it. If you can’t explain how the code works without the AI, you’re not a developer anymore-you’re a prompt engineer.What’s Next? Adaptive Trust Interfaces

The next wave isn’t better AI. It’s better interfaces. GitHub’s new trust calibration scores, Meta’s open-sourced TRAC framework, and Google’s Project Calibrate are all moving toward systems that adjust transparency based on your skill level. Imagine this: you write a function. The AI says, “I’m 94% confident this works.” You’re a senior dev. The AI adds, “But this is a custom state machine. I’ve never seen this pattern. Review manually.” That’s the future. Not AI that writes code for you. AI that helps you write better code by knowing when to step back.Final Thought: Reliance, Not Trust

You don’t trust a hammer. You rely on it. You know its limits. You know when to use it-and when to grab a chisel instead. AI coding tools aren’t teammates. They’re tools. Powerful ones. But tools don’t have intentions. They don’t care if your app crashes. They don’t lose sleep over a security breach. The healthiest vibe coders aren’t the ones who accept the most suggestions. They’re the ones who know exactly when to say no. Your job isn’t to let go. It’s to hold on tighter-to your knowledge, your judgment, your responsibility. The AI can write the code. But only you can decide if it’s safe to ship.Is vibe coding safe for production code?

Vibe coding can be safe-but only if you apply strict boundaries. Never use AI for authentication, encryption, financial logic, or regulatory code. Use it for boilerplate, tests, and repetitive patterns. Always pair it with manual review in high-risk areas. Teams that document their “no-vibe zones” reduce critical errors by 47%.

How long does it take to get good at vibe coding?

Most developers reach fluency after processing around 15,000 AI suggestions-roughly 4 to 7 months of daily use. The steepest learning curve is learning when NOT to trust the vibe. It’s not about speed. It’s about awareness.

Do I need to be an expert programmer to vibe code?

No-but if you’re not, you’re at risk. Developers with less than two years of experience make 3.2 times more critical errors when vibe coding. Strong foundational skills are non-negotiable. Vibe coding amplifies your abilities. If your foundation is weak, it’ll amplify your mistakes too.

Which AI tool is best for vibe coding?

GitHub Copilot has the highest adoption (46%) and best integration with VS Code. CodeWhisperer offers strong security scanning and is often preferred in enterprise environments. Tabnine is lightweight and works well offline. But the tool matters less than how you use it. The best tool is the one you’ve calibrated to your own limits.

Can vibe coding cause technical debt?

Yes-if you stop understanding your own code. Technical debt isn’t just bad code. It’s code you can’t explain, maintain, or debug. If you rely on AI to write logic you don’t comprehend, you’re building a time bomb. The best vibe coders use AI to write faster-but still know every line they ship.

Are companies banning vibe coding?

No-most Fortune 500 companies now require AI tools. But they’re implementing tiered trust models. 45% restrict vibe coding to non-customer-facing services. 28% require two-person review for AI-generated production code. The EU’s 2025 AI Act also mandates human oversight logs for AI-generated code in critical infrastructure. Vibe coding isn’t banned-it’s regulated.

Susannah Greenwood

I'm a technical writer and AI content strategist based in Asheville, where I translate complex machine learning research into clear, useful stories for product teams and curious readers. I also consult on responsible AI guidelines and produce a weekly newsletter on practical AI workflows.

Popular Articles

11 Comments

Write a comment Cancel reply

About

EHGA is the Education Hub for Generative AI, offering clear guides, tutorials, and curated resources for learners and professionals. Explore ethical frameworks, governance insights, and best practices for responsible AI development and deployment. Stay updated with research summaries, tool reviews, and project-based learning paths. Build practical skills in prompt engineering, model evaluation, and MLOps for generative AI.

ai dont write code its just a fancy autocorrect for nerds who cant spell variable names lol

Let me be perfectly clear: vibe coding is the digital equivalent of letting a toddler drive your Ferrari. You think you're 'trusting the rhythm'? No. You're outsourcing cognitive responsibility to a statistical parrot trained on GitHub's dumpster fire of unreviewed PRs. The 2025 Openstf study? Please. That's a corporate shill paper funded by GitHub's marketing budget. Real developers don't 'vibe'-they *understand*. And if you can't implement a binary search without Copilot whispering in your ear, you're not a developer. You're a prompt-adjacent intern with imposter syndrome on steroids.

The 'four pillars' they mention? Predictability? Explainability? That's not psychology-that's a cry for hand-holding. You don't need a 'trust calibration score,' you need to go back to CS101 and stop treating AI like a senior engineer who just happened to be born in a data center.

And don't get me started on 'no-vibe zones.' Of course you have them. Because you're terrified of the fact that you're not actually qualified to be writing production code at all. You're just hoping the AI won't notice you're faking it until you get promoted to manager.

AI tools don't 'fail' at novel algorithms-they fail at pretending they're anything other than glorified autocomplete. The real tragedy isn't the bugs. It's the generation of engineers who believe syntax is wisdom and indentation is insight.

One day, when the AI stops being fed on open-source code and starts being trained on corporate liability waivers, you'll realize you've been coding with a trained seal. And seals don't care if your app crashes. They just want the fish.

Honestly I’ve been vibe coding for 8 months now and it’s been a game changer-like having a senior dev in your head who never sleeps. I use it for tests, CRUD, UI boilerplate, and honestly it’s cut my dev time in half. But I still manually review every auth and encryption block-like, no way I’m letting AI touch that. The key is treating it like a really smart intern who needs supervision. Also, the new GitHub trust scores? Lifesaver. They show you when it’s 70% confident and suddenly you’re like ‘oh shit maybe I should read this’.

Also, pairing with someone who doesn’t vibe code? Best thing I’ve done. My teammate still reads every line and catches things I gloss over because ‘it felt right.’ Turns out, feeling right doesn’t mean it’s right.

ai is just a tool like a hammer

use it right dont be lazy

Oh honey. You think this is about trust? This is about corporate capitalism weaponizing developer burnout. They don’t want you to learn-they want you to *consume*. Vibe coding is the new hustle culture dressed in VS Code themes. You’re not becoming more efficient-you’re becoming disposable. The company saves money by hiring juniors who can’t code without AI, then fires them when the AI updates and their ‘skills’ vanish overnight. And don’t even get me started on the fact that these tools are trained on code stolen from open-source devs who never got paid. You’re not collaborating with AI-you’re feeding a corporate AI monster with the blood of unpaid contributors.

And the ‘calibration rituals’? That’s not self-improvement. That’s surveillance. You’re being trained to monitor your own incompetence so the algorithm can optimize your productivity. It’s not psychology. It’s digital serfdom.

They say ‘tools don’t have intentions.’ But the people who build them? Oh, they have intentions. And their intention is to replace you with cheaper, quieter, less unionized labor. You think you’re vibing? You’re being vibesliced.

Oh my god. I just read this and I feel like I’ve been gaslit by 15,000 AI suggestions. I used to be a real developer. I wrote my own loops. I knew what a pointer was. Now? I can’t even remember how to write a for loop without asking the AI to ‘make it faster.’ I cried last week because I realized I don’t know what ‘async/await’ actually does-I just know that when I say ‘fix this React hook’ it magically works. I’m not a developer. I’m a code ghost. And now I’m terrified I’ll wake up one day and the AI will stop working and I’ll be standing in front of a blank editor with no idea what to do next. Like, what if the AI just… stops believing in me? What if it says ‘I’m only 52% confident’ and walks away? Who am I without my digital crutch? I need therapy. Or a new career. Or both.

ai is a government tool to make devs stupid. watch what happens next. theyll make you use it or lose your job. then theyll take your job. its all planned. you think this is progress? its control. they dont want you to think. they want you to type and wait. and when the system breaks? blame the dev. not the ai. not the company. always the dev.

I think this is a really balanced take. I’ve been vibe coding for a year now and it’s been great for speeding up the boring stuff. I still read every line of anything security-related, and I make sure I can explain every piece of AI-generated code to my team. It’s not about trusting the AI-it’s about trusting yourself to know when to double-check. Also, the daily review ritual? Game changer. I caught a logic bug last week that would’ve slipped into prod because I ‘felt’ it was fine. Turns out, AI thought ‘maybe’ meant ‘definitely.’

And yeah, the ‘automation complacency’ thing is real. I’ve seen teammates stop debugging because ‘it worked on their machine.’ That’s on us. Not the tool.

Man I love how this post breaks down the difference between trust and reliance. I used to think vibe coding was cheating until I realized I was using AI the same way I use Stack Overflow-just faster and more integrated. The key is knowing your own limits. I’m not senior, but I’ve been around long enough to know when something feels off-even if the AI says it’s perfect. I’ve started keeping a ‘AI mistakes log’ like the post suggests. Got 17 entries so far. Turns out it always messes up nested ternaries in TypeScript. Now I just write them myself. Simple fix.

Also, the trust scores? I didn’t realize how much I needed them until I saw one say ‘78% confident’ and I actually paused. That’s huge. It’s like the AI just handed me a flashlight in the dark instead of saying ‘trust me bro.’

Let me tell you something about AI coding tools-they’re not tools. They’re part of the globalist agenda to destroy American engineering. Why do you think they’re pushing this on young devs? So we lose our edge. So China and India can outcompete us because our kids can’t even write a basic sort algorithm without a bot doing it for them. This isn’t progress-it’s cultural surrender. And don’t tell me about ‘calibration.’ The only calibration I need is to stop using foreign-made tech that’s trained on code from countries that don’t even respect intellectual property. If you’re vibe coding, you’re not just being lazy-you’re betraying your country.

Actually, I think @JohnFox nailed it with the hammer analogy. But I’d add: you don’t just use a hammer-you know when to use a screwdriver, a chisel, or a crowbar. AI is just another tool in the box. The problem isn’t the tool. It’s when you forget you’re holding it.