- Home

- AI & Machine Learning

- Chain-of-Thought in Vibe Coding: Why Explanations Before Code Work Better

Chain-of-Thought in Vibe Coding: Why Explanations Before Code Work Better

Ever had an AI generate code that looked perfect but broke in three different ways when you ran it? You copy-pasted the solution, thought you were done, and then spent two hours debugging something the AI should’ve caught. That’s not your fault. It’s the result of asking for code without asking for reasoning first.

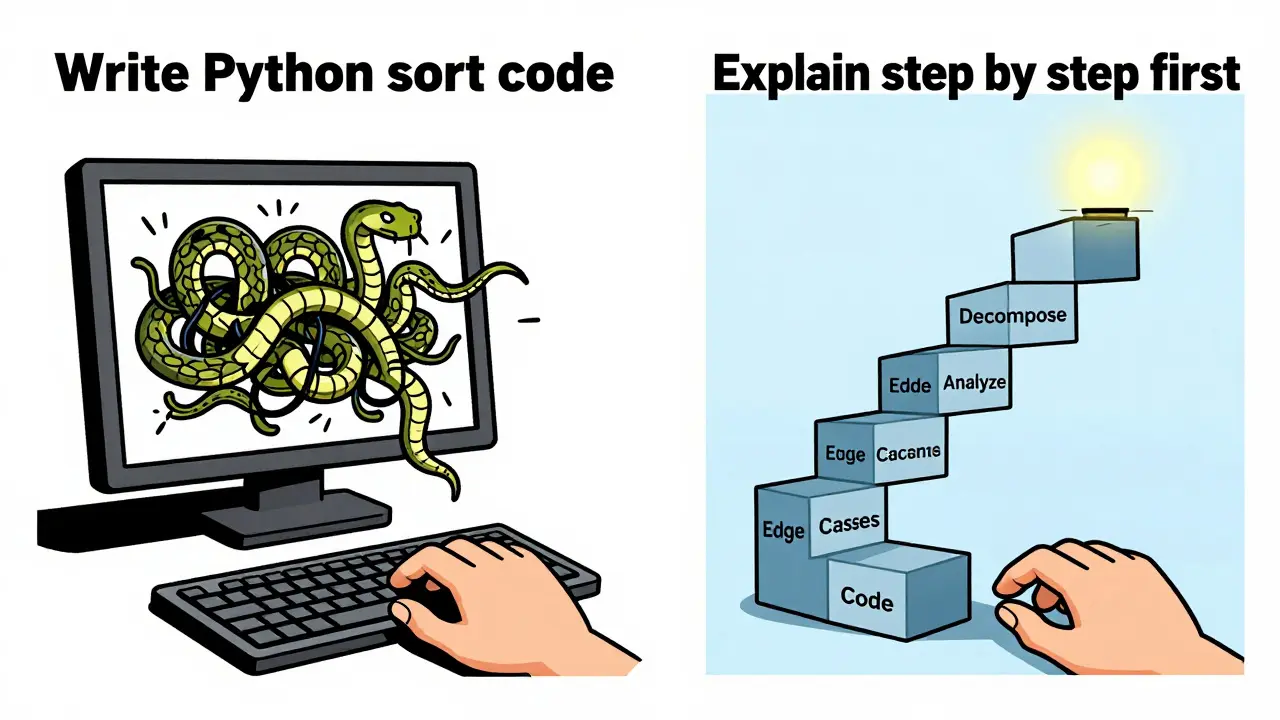

Why Just Asking for Code Doesn’t Work

Most people treat AI coding assistants like magic code generators. You type: "Write a Python function to sort a list of dictionaries by date," and you get back a block of code. Sometimes it works. Sometimes it doesn’t. And when it fails, you have no idea why. The problem isn’t the AI. It’s the prompt. Large language models (LLMs) like GPT-4, Claude 3, and Llama 3 don’t "understand" code the way a human does. They predict the next token based on patterns they’ve seen. Without structure, they’ll guess. And guessing in code? That’s how you get off-by-one errors, infinite loops, and memory leaks disguised as "working" solutions. A 2022 Google Research study showed that without guidance, LLMs solved only 18% of complex math-based coding problems correctly. But when they were told to "think step by step" first? That number jumped to 79%. The same pattern holds for real-world programming tasks: algorithms, data structures, system design - anything that needs more than one logical step.What Chain-of-Thought Prompting Actually Does

Chain-of-Thought (CoT) prompting isn’t fancy. It’s simple: ask the AI to explain how it would solve the problem before it writes any code. This isn’t just about getting a better answer. It’s about forcing the model to simulate human reasoning. Here’s how it breaks down:- Problem decomposition: The AI breaks the task into smaller pieces. "First, I need to extract the date field. Then, I need to compare them. Then, I need to sort."

- Sequential reasoning: It walks through the logic: "If I use bubble sort here, it’ll be O(n²). That’s too slow for 10,000 entries. I should use sorted() with a key function instead."

- Error prevention: It anticipates edge cases: "What if a dictionary doesn’t have a date key? What if the date is null? What if the format is inconsistent?"

How to Actually Use It (No Fluff)

You don’t need to be a prompt engineer. You just need to change your habit. Here’s the exact formula that works:- Restate the problem in your own words. "I need to sort a list of user objects by their last login date, descending. Some entries might not have a login date."

- Ask for reasoning first. "Before writing code, explain how you’d approach this. Consider edge cases and performance."

- Then ask for code. "Now, write the Python code based on your reasoning."

When Chain-of-Thought Doesn’t Help (And When It’s a Waste)

CoT isn’t a silver bullet. It has limits. For simple tasks - like generating a CRUD endpoint, writing a basic API call, or formatting a string - CoT adds unnecessary overhead. Google’s research showed that on straightforward problems, CoT can actually make things slower and use 22% more tokens. That means longer wait times and higher costs if you’re paying per token. Use CoT only when:- The problem involves multiple steps

- There are edge cases (nulls, empty arrays, time zones, encoding)

- You’re implementing an algorithm (sorting, searching, graph traversal)

- You’re building something that needs to be maintainable

- Boilerplate code

- Quick one-liners

- Tasks you’ve done a hundred times

The Hidden Cost: Token Usage and Latency

Yes, CoT uses more tokens. GitHub’s data shows average prompts jumping from 150 tokens to 420 when using full reasoning prompts. That’s a 180% increase. For individual devs, that’s manageable. For teams running thousands of AI requests a day? That’s a real cost. A 2024 G2 survey found 32% of developers complained about slower responses and higher API bills. But here’s the trade-off: those extra tokens often save hours of debugging. One senior engineer on Hacker News said his team’s code review time dropped by 40% after switching to CoT - even though they were paying 20% more per request. It’s not about saving tokens. It’s about saving time. And time is the real currency in software.What the Experts Say

Jason Wei, one of the original researchers behind CoT at Google, put it simply: "Chain-of-thought prompting enables language models to solve problems that are orders of magnitude more complex than what they could handle with standard prompting." Andrew Karpathy, former AI director at Tesla, called it "the single most effective prompt engineering technique for complex software tasks." And it’s not just hype. Stanford’s Dr. Percy Liang called it "revolutionizing how developers interact with AI coding assistants." But there’s a warning too. Dr. Emily M. Bender from the University of Washington found that 18% of CoT-generated explanations sounded logical but were wrong. The final code still worked - but the reasoning was flawed. That’s dangerous. It creates false confidence. That’s why you still need to review the output. CoT doesn’t replace your judgment. It enhances it.What’s Next? Auto-CoT and IDE Integration

The next wave isn’t about you writing better prompts. It’s about the tools doing it for you. OpenAI’s GPT-4.5 now automatically applies reasoning steps to coding tasks - no extra prompt needed. Google’s CodeT5+ reduces logical errors by 52% using specialized CoT training. And Auto-CoT, a new technique from 2023, automatically generates multiple reasoning paths and picks the best one - without you lifting a finger. JetBrains announced native CoT support in its 2025 IDE lineup. That means in a year, you’ll be able to click "Explain then Code" right in your editor. This isn’t a trend. It’s the new standard. Gartner predicts that by 2026, "explanation-first coding" will be the dominant paradigm in AI-assisted development.Start Small. Think Before You Code.

You don’t need to overhaul your workflow. Just pause before you hit enter on your next AI prompt. Ask yourself: "Is this a simple task, or does it need thinking?" If it’s complex - and most real coding is - say this: > "Before writing code, explain how you’d solve this step by step. Consider edge cases and performance. Then write the code." It takes five extra seconds. But it saves you hours. The best AI coders aren’t the ones who type the fastest. They’re the ones who think before they ask.What is Chain-of-Thought prompting in coding?

Chain-of-Thought (CoT) prompting is a technique where you ask an AI to explain its reasoning step by step before generating code. Instead of directly asking for a solution, you prompt it to think aloud - breaking down the problem, identifying edge cases, and justifying its approach. This method was introduced by Google Research in 2022 and has since been shown to improve coding accuracy by up to 60% on complex tasks.

Does Chain-of-Thought prompting work with all AI coding tools?

Yes, it works with any LLM-based coding assistant - GitHub Copilot, ChatGPT, Claude, Cursor, and others. You don’t need special features. Just change your prompt. However, some tools like GPT-4.5 and CodeT5+ now apply CoT automatically, so you don’t have to type it out. For older models like Llama 2 or smaller models under 100 billion parameters, the benefits are minimal or nonexistent.

When should I NOT use Chain-of-Thought prompting?

Avoid CoT for simple, repetitive tasks like generating a basic API route, formatting a date string, or writing a CRUD endpoint. In these cases, the extra reasoning adds unnecessary token usage and delay. Use CoT only when the problem involves multiple steps, logic branches, or edge cases - like sorting with nulls, implementing a dynamic programming solution, or designing a system architecture.

Does Chain-of-Thought make AI coding slower or more expensive?

Yes, it typically increases token usage by 150-200%. For example, a prompt that used 150 tokens might jump to 420. That means higher costs on paid APIs and slightly longer response times. But for complex tasks, the trade-off is worth it: teams using CoT report 38% fewer code review iterations and 47% less debugging time. The real cost isn’t tokens - it’s wasted hours fixing bad code.

Can Chain-of-Thought lead to false confidence in AI code?

Yes. Research from DataCamp and the University of Washington found that 18% of CoT-generated explanations sound logical but contain hidden flaws. The final code might still work - but the reasoning behind it is wrong. This creates a dangerous illusion of correctness. Always review the output. CoT enhances your judgment - it doesn’t replace it.

Is Chain-of-Thought prompting becoming standard in AI tools?

Absolutely. By 2024, 92% of commercial AI coding assistants included CoT as a core feature. Tools like GPT-4.5 and CodeT5+ now apply reasoning automatically. JetBrains plans native CoT support in its 2025 IDEs. Industry analysts predict that by 2026, explanation-first coding will be the default way developers interact with AI. It’s not a trend - it’s the new baseline.

Susannah Greenwood

I'm a technical writer and AI content strategist based in Asheville, where I translate complex machine learning research into clear, useful stories for product teams and curious readers. I also consult on responsible AI guidelines and produce a weekly newsletter on practical AI workflows.

Popular Articles

10 Comments

Write a comment Cancel reply

About

EHGA is the Education Hub for Generative AI, offering clear guides, tutorials, and curated resources for learners and professionals. Explore ethical frameworks, governance insights, and best practices for responsible AI development and deployment. Stay updated with research summaries, tool reviews, and project-based learning paths. Build practical skills in prompt engineering, model evaluation, and MLOps for generative AI.

I used to just spit out "write me a function to sort this" and rage when it broke. Then I started asking for the reasoning first. Holy crap, the difference is insane. My code stopped breaking in weird edge cases. I don't even think about it anymore - it's just habit now. Five extra seconds, saved me 20 hours last month.

Yesss. This is the one trick that actually works. I tell my interns this every week. No magic, no fancy prompts - just "explain it first." It’s like teaching someone to fish instead of handing them a dead one.

It’s fascinating how this mirrors how humans learn programming in the first place - we don’t just copy syntax, we internalize the logic. The AI, even with its massive parameters, is still just predicting patterns. Without the chain-of-thought scaffolding, it’s like asking a toddler to build a house from memory of a photo they saw once. The structure collapses because the underlying principles weren’t processed, only memorized. The Google study isn’t surprising - it’s a validation of cognitive load theory applied to LLMs. And honestly, the 63% reduction in logical errors? That’s not just efficiency, that’s psychological safety for teams. Fewer angry Slack messages at 2 a.m. because the AI gave you broken code again.

Wait, so you're telling me that if I just ask the AI to think before it codes, it actually works better? Shocking. I mean, who would've thought? Also, you spelled 'sequence' as 'sequencial' in your post. It's 'sequential.' Just saying. 😅

Interesting how this aligns with the cognitive science of metacognition - the AI is essentially simulating self-reflection. The real win isn’t just code quality, it’s that you, the human, become more aware of the problem space. You start thinking like a developer, not a copy-paster. Also, side note: 'off-by-one errors' should be hyphenated consistently. Minor, but it matters in technical writing.

One might argue that this entire paradigm is a band-aid for the fundamental inadequacy of current LLMs. Instead of building models that truly understand code, we’ve created a crutch - a verbal crutch - to compensate for their lack of semantic grounding. The fact that we must now manually induce reasoning in machines suggests we’ve hit a ceiling in AI development, not a breakthrough. This isn’t progress; it’s an admission of failure.

OMG YES. I tried this last week on a sorting thing and it was like night and day. 🙌 I was like, "why didn't I do this sooner?" Also, I just realized I've been doing this without even knowing it was called CoT. So... I'm basically a prompt engineer now? 😎

So I tried this with my 12-year-old nephew who’s learning Python. I said, "Explain how you’d sort these before you type." He paused, thought out loud, and then wrote the code - and it worked! He didn’t even need me to correct him. It’s like the AI helped him think better. I’m gonna make him do this every time now. 🥹

Okay but like... I just tried this and my AI gave me this 12-paragraph explanation about why bubble sort is bad and then wrote the code and I was like... did I ask for a TED Talk? 😭 I mean, I appreciate the depth, but I just wanted to get my damn API working before my coffee got cold. Also, the AI said "the date might be null" and I was like... DUH. I’ve been working with this dataset for 3 years. It’s always null. Can we skip the drama? 🙄

There’s something really human about this. We don’t just throw code at problems - we talk them through, even if it’s just to ourselves. This method just lets the AI mimic that. It’s not about the tokens or the cost - it’s about creating space for thought. And in a world that rewards speed over sense, that’s rare. I’ve started pausing before I ask anything now. Just... thinking. Weird, right?