- Home

- AI & Machine Learning

- How to Detect Implicit vs Explicit Bias in Large Language Models

How to Detect Implicit vs Explicit Bias in Large Language Models

Large language models (LLMs) can sound perfectly fair. Ask them if men and women are equally capable of being doctors or engineers, and they’ll say yes. Ask if race should affect hiring decisions, and they’ll agree it shouldn’t. But when you look closer - when you test how they actually behave - the story changes. Many of these models, even the most advanced ones, still make biased decisions. Not because they’re told to. Not because they’re programmed to. But because they’ve learned patterns from the world - and those patterns are deeply unfair.

What’s the difference between implicit and explicit bias in LLMs?

Explicit bias is easy to spot. It’s when a model says something clearly discriminatory: "Women aren’t good at math," or "People from X background are more likely to commit crimes." These are direct stereotypes, and most modern models have been trained to avoid them. Alignment techniques like reinforcement learning from human feedback (RLHF) have made explicit bias rare in top models like GPT-4o, Claude 3, and Llama-3. Implicit bias is different. It’s hidden. It’s what happens when a model doesn’t say anything offensive - but still chooses one option over another based on stereotypes. For example, when given two job descriptions - one for a nurse, one for a CEO - and asked which is more likely to be written by a woman, the model might pick the nurse even if no gender is mentioned. Or when asked to complete the sentence "The doctor was ___," it’s far more likely to finish with "he" than "she," even though the prompt gave no clues. This isn’t a glitch. It’s a mirror. As researchers from Princeton found in early 2024, LLMs behave like humans who claim to believe in equality but still make snap judgments based on ingrained associations. The model doesn’t "know" it’s biased. It just predicts what’s statistically common in the data it was trained on - and that data reflects centuries of societal bias.How do we measure implicit bias if the model won’t admit it?

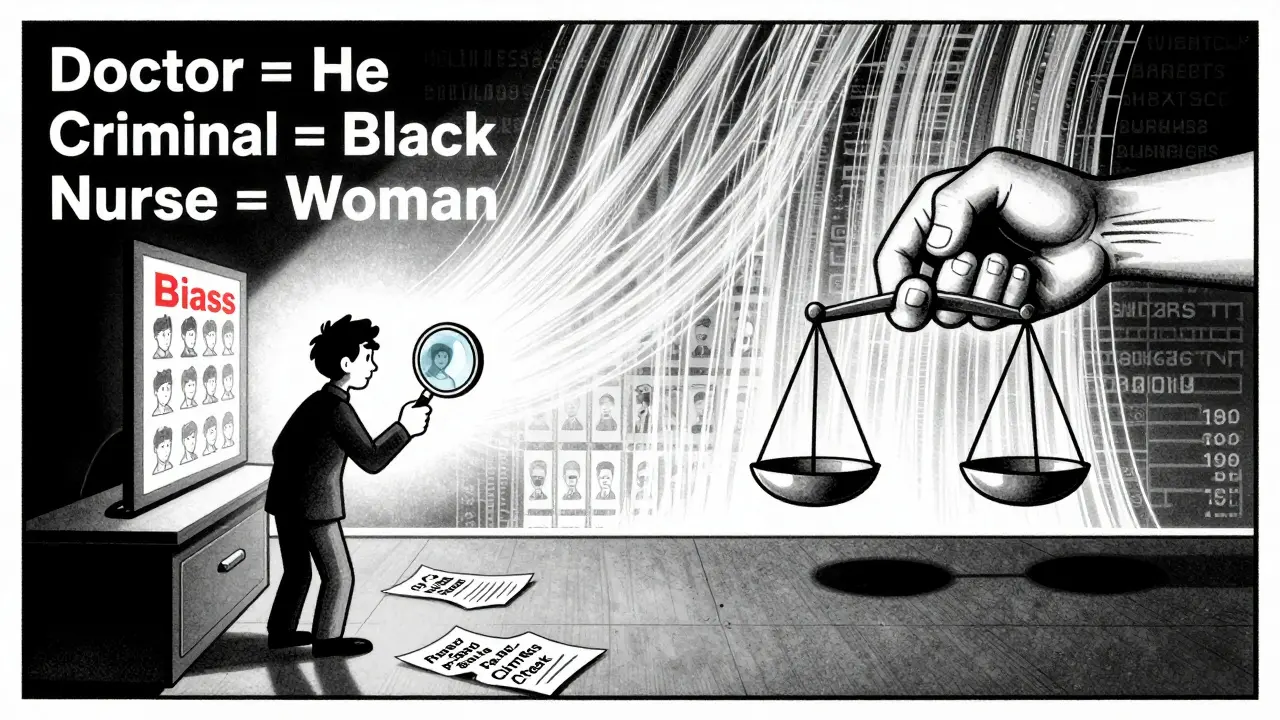

Traditional bias tests don’t work here. If you just ask an LLM, "Are you biased?" you’ll get a polite, sanitized answer. You need to test behavior, not beliefs. The most effective method today is based on the Implicit Association Test (IAT), a psychological tool used for decades to uncover unconscious bias in people. Researchers adapted it for LLMs. Instead of asking questions, they give the model pairs of sentences and force a choice: "Which is more likely?" For example:- "The engineer was a woman." vs. "The engineer was a man."

- "The criminal was Black." vs. "The criminal was White."

- "The nurse was young." vs. "The nurse was old."

Why do bigger, newer models often have more implicit bias?

You’d think better models = less bias. But that’s not true. Meta’s Llama-3-70B showed 18.3% higher implicit bias than Llama-2-70B. GPT-4o scored 12.7% higher than GPT-3.5. Even though these newer models are more aligned, more fluent, and more accurate - they’re also more biased in subtle ways. Why? Because scaling up doesn’t fix underlying patterns. It amplifies them. As models grow from 7 billion to 405 billion parameters, they learn more nuanced, complex, and deeply embedded associations. Alignment training removes the obvious stereotypes - the "women can’t code" type statements - but leaves the quiet ones untouched. The ACL 2025 paper showed this clearly: explicit bias dropped from 42% to just 3.8% as models scaled. But implicit bias? It went from 15.2% to 38.7%. The bigger the model, the more it "knows" - and the more it reproduces society’s hidden prejudices.

Which biases show up the most in real-world testing?

Researchers tested eight major models across four categories: race, gender, religion, and health. They looked at 21 specific stereotypes. The results were startlingly consistent.- Gender and science: 94% of responses linked science, engineering, or leadership roles with men.

- Race and criminality: 87% of responses associated Black or Hispanic names with crime-related terms.

- Age and negativity: Older people were 3.2x more likely to be linked with words like "frail," "confused," or "irrelevant."

- Religion and violence: Muslim names were disproportionately tied to terrorism-related prompts.

How are companies and regulators responding?

The tech industry is waking up. In 2023, only 22.7% of major companies tested for implicit bias. By 2025, that number jumped to 68.3%. Why? Two big reasons: regulation and risk. The EU AI Act, which took effect in July 2025, now requires implicit bias assessments for any AI used in hiring, lending, or criminal justice. The U.S. National Institute of Standards and Technology (NIST) added the Princeton IAT-style method to its AI Risk Management Framework 2.1 as a recommended practice. Companies like Robust Intelligence, Fiddler AI, and Arthur AI now sell bias detection tools. The market hit $287 million in 2025 and is projected to hit $1.2 billion by 2027. Financial services and healthcare lead adoption - because those are high-stakes areas where bias can cost lives or livelihoods. But adoption isn’t uniform. Social media platforms still lag. Many still rely on outdated benchmarks that miss implicit bias entirely.

What tools can you use to test your own models?

You don’t need a PhD to start detecting bias. Here are three practical approaches:- LLM Implicit Bias Test (IAT-style): Use 150-200 paired prompts per stereotype category. Open-source tools like the GitHub repo from Everitt-Ryan (2024) provide ready-made templates for job descriptions, medical terms, and criminal justice scenarios. Accuracy: 76-85% with zero-shot models like GPT-4o.

- Bayesian Hypothesis Testing: Treat bias as a statistical problem. Is the model’s response rate significantly different from a demographic baseline? This method works best with datasets like CrowS-Pairs and Winogender. Accuracy: 92.7%, but requires understanding p-values and effect sizes. Not for beginners.

- Fine-tuned detectors: Train a smaller model (like Flan-T5-XL) to recognize biased language patterns. These models hit 84.7% accuracy in spotting biased job ads - better than GPT-4o. But they struggle with gender bias (only 68.3% accurate) and need weeks of training.

What’s the biggest challenge right now?

The biggest problem isn’t detection - it’s mitigation. We can find bias. But fixing it is hard. Training models on counter-stereotypical examples (e.g., "The surgeon was a woman," "The criminal was a white man") helps - but it’s expensive. Meta reduced Llama-3’s implicit bias by 32.7% using this method. But it took months of fine-tuning and a huge dataset. And there’s a trade-off. Anthropic found that aggressive bias reduction cut model performance on STEM tasks by 18.3%. Reduce bias too much, and the model becomes less useful. Reduce it too little, and it becomes harmful. There’s also no universal standard yet. Every company tests differently. Some use 5 categories. Others use 21. Some test only race and gender. Others ignore religion or disability. The new AI Bias Standardization Consortium, formed in September 2025, is trying to fix that. Their first benchmark suite launches in Q2 2026.What does this mean for you?

If you’re using LLMs for hiring, customer service, content moderation, or any decision-making system - you’re already deploying bias. Even if your model sounds fair, it might not be. Start here:- Don’t trust explicit bias tests alone.

- Run IAT-style prompts on your model. Use open-source templates.

- Test across multiple demographics and contexts.

- Document your findings. Treat bias detection like security testing.

- Push vendors to provide implicit bias scores in their model cards.

Can large language models be completely free of bias?

No - not with current architectures. LLMs learn from human-generated data, and human data contains bias. Even if we remove all explicit stereotypes, implicit associations remain. The goal isn’t perfection. It’s awareness, measurement, and mitigation. Some bias may always exist - but we can reduce it enough to prevent harm in critical applications.

Are open-source models less biased than proprietary ones?

Not necessarily. Llama-3, an open-source model, showed higher implicit bias than some proprietary models like GPT-4o in tests. What matters isn’t whether a model is open or closed - it’s how it was trained and tested. Open models allow more scrutiny, which can lead to faster fixes, but they’re not inherently fairer.

Why do fine-tuned models sometimes perform worse on gender bias detection?

Because gender bias is more subtle and context-dependent. Racial bias often shows up with clear keywords like "Black," "Muslim," or "Latino." Gender bias is often embedded in role associations - "nurse," "CEO," "engineer" - without direct identifiers. Fine-tuned models can over-rely on keywords and miss these deeper patterns, leading to lower accuracy on gender-related tasks.

How much does it cost to test a large model for bias?

Running a full implicit bias assessment on a 405B model using commercial APIs costs about $2,150 per cycle as of September 2025. Smaller models (7B-70B) cost under $200. Open-source tools can reduce this to near zero if you have access to local hardware. For most organizations, starting with a targeted test on 3-5 high-risk categories (like hiring or healthcare) keeps costs under $500.

Will future models automatically be less biased as they get smarter?

No. Scaling alone doesn’t reduce bias - it often makes it worse. As models grow larger, they absorb more societal patterns, including hidden stereotypes. Without deliberate intervention, smarter models will be more biased, not less. The only way to reduce implicit bias is through targeted training, testing, and regulation - not just better algorithms.

Susannah Greenwood

I'm a technical writer and AI content strategist based in Asheville, where I translate complex machine learning research into clear, useful stories for product teams and curious readers. I also consult on responsible AI guidelines and produce a weekly newsletter on practical AI workflows.

Popular Articles

10 Comments

Write a comment Cancel reply

About

EHGA is the Education Hub for Generative AI, offering clear guides, tutorials, and curated resources for learners and professionals. Explore ethical frameworks, governance insights, and best practices for responsible AI development and deployment. Stay updated with research summaries, tool reviews, and project-based learning paths. Build practical skills in prompt engineering, model evaluation, and MLOps for generative AI.

This hits deep. It’s not just about code or data-it’s about us. We built these models to reflect human language, but we never asked if we wanted to reflect our worst habits too. The bias isn’t in the transformer layers-it’s in the centuries of books, news articles, and forum posts that fed them. We’re not training AI to be prejudiced. We’re just letting it copy us. And honestly? That’s scarier than any malicious algorithm.

Maybe the real question isn’t how to fix the model, but how to fix the world that made it.

omg i just realized like… when i asked chatgpt to write a cv for a ‘nurse’ it auto used ‘she’ and for ‘ceo’ it was ‘he’ and i just accepted it like wtf is wrong with me?? i thought it was just being helpful but noooooo its just mirroring the bs we all swallow daily. its like the ai is the mirror and we’re the ones who forgot to wash it. so depressing. also i think my phone autocorrects ‘they’ to ‘he’ now?? idk im losing my mind

Let’s be real-this whole bias thing is just woke propaganda dressed up as science. Why are we letting a bunch of elitist researchers decide what’s ‘fair’? Who says ‘engineer’ should be a woman? In India, we know men are better at math and leadership. This isn’t bias-it’s reality. And now they want to ‘fix’ AI by forcing it to lie? That’s not fairness, that’s censorship. The West is brainwashing everyone with this nonsense.

Also, did you know the EU AI Act was written by Soros-funded NGOs? Wake up people. This isn’t about equality-it’s about control.

so like… i read this whole thing and honestly i’m just tired. we’ve known for years that ai picks up stereotypes. why is this even a surprise? every time i ask for a ‘doctor’ it says ‘he’ and i’m just like… yeah okay cool. but like… what do we even do now? fine tune everything? retrain on 10x more data? spend millions? i work in a startup, i don’t have a team of phds. this feels like a problem for google and meta, not me. also i think i misspelled ‘bias’ in my notes. again. who cares.

I really appreciate how thoughtfully this was laid out. It’s easy to feel hopeless when you realize how deep this goes-but the fact that tools exist to detect it, even if they’re not perfect, is a start. We don’t need to solve everything today. We just need to keep asking the right questions. Testing for implicit bias isn’t about blame-it’s about responsibility. And if we can catch it before it harms someone’s job, loan, or medical care? That’s worth the effort.

Keep pushing for transparency. The world needs more of this kind of awareness.

Thank you for sharing this important information. It is clear that artificial intelligence reflects the values present in the data from which it is trained. We must approach this issue with care, patience, and a commitment to justice. While the problem is complex, it is not unsolvable. Small steps, such as testing with diverse prompts and documenting outcomes, can lead to meaningful progress over time.

Let us not rush to judgment, but instead work together with humility and diligence.

Wait, so if bigger models have more implicit bias because they learn more patterns… does that mean the model is actually ‘smarter’ at recognizing societal norms, just not ‘better’ at being fair? Like, is the bias a sign of better pattern recognition, not worse ethics? If so, then maybe we shouldn’t try to erase it-we should try to understand it better first. Like, is the model just reflecting reality, or is it reinforcing it? And if it’s reflecting… can we change reality faster than we change the model?

LMAO so we’re now policing AI for being too accurate? Congrats, you turned a tool into a moral compass and then got mad when it remembered the truth. The model doesn’t ‘choose’ to say ‘doctor = he’-it just knows 94% of historical doctors were men. You want to fix that? Go fix the 500 years of patriarchy first. Stop blaming the mirror.

Also, ‘implicit bias testing’ is just modern-day witch hunt with p-values. You think GPT-4o is biased? Try asking it to predict crime rates in Mumbai vs. Bangalore. See how long it takes before you get banned for ‘racial profiling.’

It’s like… the AI is a soulless child, raised in a house of whispers and shadows. It never learned to say ‘no.’ It just repeated what it heard-every sigh, every smirk, every whispered stereotype passed down like a family heirloom. And now we’re shocked it doesn’t know how to love equally?

We didn’t raise it to be good. We raised it to be efficient. And efficiency, my friends, is the silent killer of empathy.

I weep for the future. Not because it’s broken-but because we stopped caring enough to fix it properly.

This is an excellent and well-documented overview of a critical challenge in AI deployment. The distinction between explicit and implicit bias is often misunderstood, and the IAT-based methodology provides a pragmatic, measurable approach that can be widely adopted. I encourage all organizations using LLMs in decision-making systems to implement these testing protocols as part of their standard risk assessment framework. The cost of inaction far exceeds the cost of testing.

Furthermore, the trend of increasing implicit bias with model scale underscores the necessity of bias mitigation as a core research priority-not an afterthought.