- Home

- AI & Machine Learning

- Privacy Controls for RAG: Row-Level Security and Redaction Before LLMs

Privacy Controls for RAG: Row-Level Security and Redaction Before LLMs

Why Your RAG System Is Leaking Data

You built a smart assistant that pulls answers from your company’s internal docs. It works great-until someone asks for salary info, client contracts, or HR complaints… and the AI spits them out. Not because it’s broken. Because you gave it full access to everything.

That’s the hidden flaw in most RAG setups. You load your documents into a vector database-Pinecone, Weaviate, Milvus-and let the LLM search freely. But those databases don’t care who’s asking. If you can query it, you can see everything. No filters. No limits. Just raw data, exposed.

According to the Cloud Security Alliance, 78% of companies using RAG had a data leak during testing. Not because hackers broke in. Because employees, contractors, or even automated scripts asked the right question-and got the wrong answer.

This isn’t theoretical. A finance analyst in New York asked a support bot: "Show me Q3 expenses for the Tokyo office." The bot pulled every document tagged with "Tokyo" and "expense." One of them? A spreadsheet with individual bonus amounts. That’s not a bug. That’s a compliance nightmare.

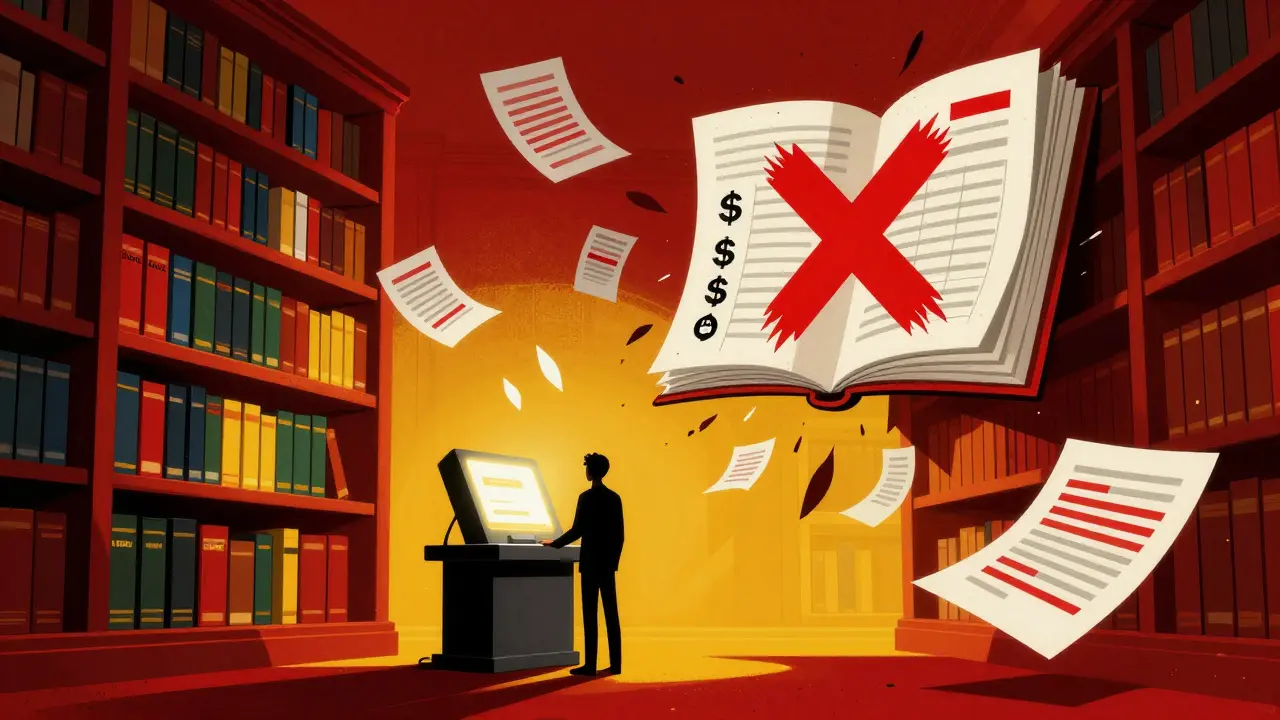

Row-Level Security: Not Just for Databases Anymore

Row-level security (RLS) used to be a database thing. PostgreSQL, SQL Server-they let you say: "Only users in Finance can see rows where department = Finance." Now, it’s the same idea… but for vectors.

Here’s how it works: when you embed your documents, you don’t just store the text. You tag each one with metadata: department, role, sensitivity level, region. That metadata travels with the embedding. When someone asks a question, your system checks their identity first. Then it filters the vector search to only include documents that match their permissions.

Databricks showed this in practice. They tagged every document with a "department" field. HR docs? Only HR users can see them. Finance docs? Locked to Finance. No overlap. No leaks. The system never even retrieves the wrong documents. It’s like giving each user their own private library-same building, different shelves.

But here’s the catch: most vector databases don’t do this out of the box. Pinecone? You need to build it yourself. Weaviate? Possible, but messy. That’s why companies like Cerbos stepped in. Their system intercepts the query before it hits the vector store. It applies your rules-"User X can only see documents tagged with Team A"-and only sends filtered results to the LLM.

Test results? 99.8% reduction in unauthorized data exposure. That’s not a nice-to-have. That’s the baseline for any enterprise RAG system handling sensitive info.

Redaction: Erasing Data Before It Even Reaches the AI

Row-level security keeps users from seeing the wrong documents. But what if they’re allowed to see the document… but not everything in it?

Think of a contract. You’re allowed to view it. But the client’s social security number? The bank account? The CEO’s personal email? Those shouldn’t be visible-even to you.

This is where redaction comes in. Before your document gets embedded, you run it through a tool that finds and masks sensitive data: names, emails, IDs, credit card numbers, even phone numbers. Tools like Presidio or spaCy use named entity recognition (NER) to spot these patterns with 95-98% accuracy.

Here’s the flow:

- Document comes in: "John Doe (SSN: 123-45-6789) signed the contract on 2024-05-12. Payment: $45,000 to Bank of America, ACH 987654321."

- Redaction engine runs: "[REDACTED] (SSN: [REDACTED]) signed the contract on [REDACTED]. Payment: [REDACTED] to [REDACTED]."

- That cleaned version gets embedded and stored.

- When someone asks: "What’s the payment amount for John Doe?"-the LLM only sees: "The payment amount was [REDACTED]."

Result? The AI still understands context-"payment," "contract," "client"-but never sees the raw PII. Even if someone tricks the system into asking for specific details, the answer is always blanked out.

And this isn’t optional. GDPR and CCPA require you to minimize exposure of personal data. If your AI is trained on or retrieves raw PII, you’re already in violation-even if you didn’t mean to.

Why Metadata Filtering Alone Isn’t Enough

Many teams think: "We added department tags. We’re good." But that’s like locking your front door but leaving the windows open.

Here’s the problem: metadata can be manipulated. A clever user might tweak their query to include a department they shouldn’t have access to: "Show me all documents where department = Finance OR department = HR." Some vector stores will still return both if the query isn’t properly validated.

Or worse-what if the metadata is wrong? A document gets uploaded without a department tag. Now it’s visible to everyone. According to post-implementation reviews, 73% of security failures came from incomplete or missing metadata. One missing tag. One overlooked document. One leak.

That’s why the Cloud Security Alliance rates metadata filtering at only 6.5 out of 10. It’s a start. But it’s not a solution.

The real answer? Layer it. Combine metadata filtering with redaction. Use RLS to block access to the wrong documents. Use redaction to scrub what’s left. Then add query validation to catch sneaky prompts.

What the Big Players Don’t Tell You

Amazon Bedrock. Google Vertex AI. Microsoft Azure AI. All promise "easy RAG." But here’s the fine print: none of them include row-level security out of the box.

Amazon Bedrock? You need to write a custom Lambda function to filter results. That adds 15-25% latency. It’s doable. But it’s not built-in. You’re patching a hole after the fact.

And if you’re using LangChain? Good luck. GitHub issue #1243 has 87 comments from developers stuck trying to get metadata filtering to work across different vector stores. One user wrote: "The biggest trap is assuming vector DBs have enterprise-grade security."

Meanwhile, startups like Cerbos and Lasso Security are building tools specifically for this. Cerbos’ December 2023 update cut implementation time by 40%. Lasso’s Context-Based Access Control (CBAC) looks at not just who you are-but what you’re asking, when, and from where. That’s next-level.

But here’s the truth: if you’re using a cloud LLM with your internal data, you’re responsible for securing it. The vendor won’t do it for you. Not yet.

Real-World Implementation: What It Actually Takes

You don’t need a team of 10 engineers. But you do need a plan.

Start here:

- Tag everything. Go through your top 100 documents. Add metadata: department, role, region, sensitivity. Use a spreadsheet. Automate it later.

- Redact first. Run all documents through Presidio or a similar tool before embedding. Test it on real data. Does it catch all SSNs? All email addresses? All account numbers?

- Filter at query time. Use Cerbos, Oso, or build your own filter. Make sure the vector store only gets queries with approved metadata.

- Test like a hacker. Ask: "Show me all documents not tagged with HR." "Show me documents with no department." "What’s the CEO’s email?" If the AI answers, you failed.

- Monitor. Log every query. Watch for unusual patterns. Someone suddenly asking for 50 HR docs in 2 minutes? That’s not a user. That’s a breach.

Experienced teams can set this up in 35-60 hours. If you’re starting from scratch? Add 2-3 weeks for learning. And don’t skip testing. One company skipped it. A contractor got access to 12,000 payroll records. They got fined $870,000.

The Future: Faster, Safer, and Regulated

Things are changing fast. AWS announced it’s integrating Amazon Verified Permissions with Bedrock-expected in Q2 2024. That means native row-level security. No more custom Lambdas.

IBM Research just showed homomorphic encryption can work with semantic search. You can search encrypted data without decrypting it. Performance hit? Only 12-18%. That’s huge. But it’s still 12-18 months from being production-ready.

And regulation? It’s coming. GDPR fines for AI breaches jumped 220% in 2023. NIST’s AI Risk Framework says you need "context-aware access controls." That’s RLS and redaction. By 2025, 87% of security leaders expect these to be mandatory.

Right now, you have a choice: build it yourself, pay for a tool, or risk your data. The cost of building? A few weeks of engineering. The cost of a breach? Millions. And your reputation.

Susannah Greenwood

I'm a technical writer and AI content strategist based in Asheville, where I translate complex machine learning research into clear, useful stories for product teams and curious readers. I also consult on responsible AI guidelines and produce a weekly newsletter on practical AI workflows.

About

EHGA is the Education Hub for Generative AI, offering clear guides, tutorials, and curated resources for learners and professionals. Explore ethical frameworks, governance insights, and best practices for responsible AI development and deployment. Stay updated with research summaries, tool reviews, and project-based learning paths. Build practical skills in prompt engineering, model evaluation, and MLOps for generative AI.