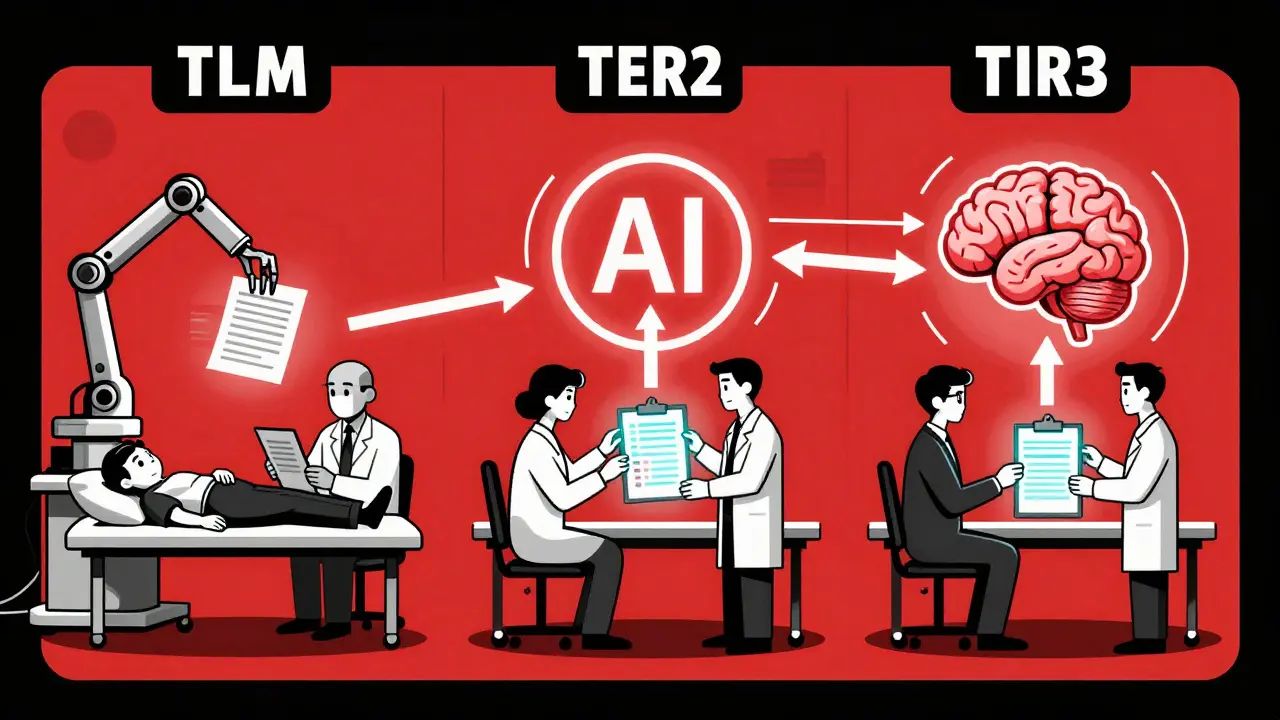

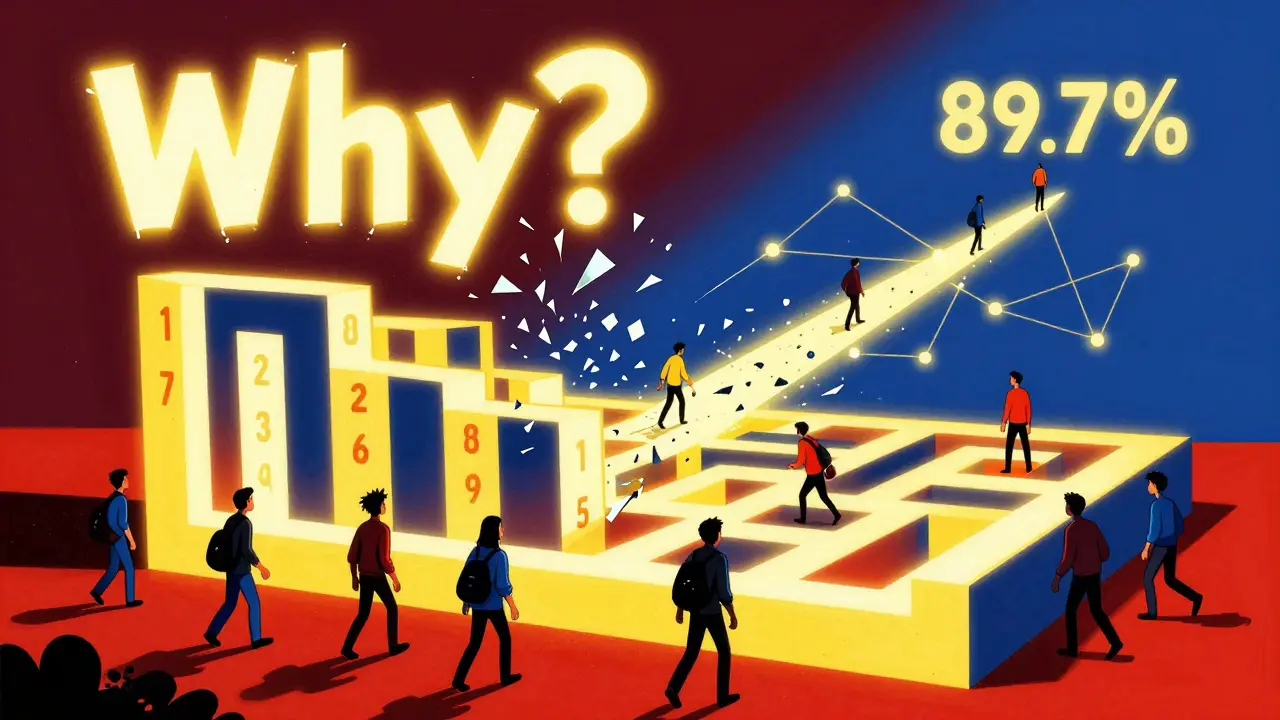

Human-in-the-Loop Evaluation Pipelines for Large Language Models

Human-in-the-loop evaluation pipelines combine AI speed with human judgment to ensure large language models produce accurate, safe, and fair outputs. Learn how tiered systems cut review time while improving quality.

Best Visualization Techniques for Evaluating Large Language Models

Discover the most effective visualization techniques for evaluating large language models, from bar charts and scatter plots to heatmaps and parallel coordinates - and learn how to avoid common pitfalls in model assessment.