- Home

- AI & Machine Learning

- Multimodal Transformer Foundations: How Text, Image, Audio, and Video Embeddings Are Aligned

Multimodal Transformer Foundations: How Text, Image, Audio, and Video Embeddings Are Aligned

Imagine you show a video of a dog barking at a squirrel to a machine. It sees the dog, hears the bark, and reads the caption: "Dog chasing squirrel." Now imagine it can answer questions like, "What animal made that sound?" or "Is the dog happy?" without being explicitly trained on those exact questions. That’s not science fiction-it’s what multimodal transformers do today. They’re the backbone of systems that understand the world the way humans do: through sight, sound, and language, all at once.

How Multimodal Transformers Work

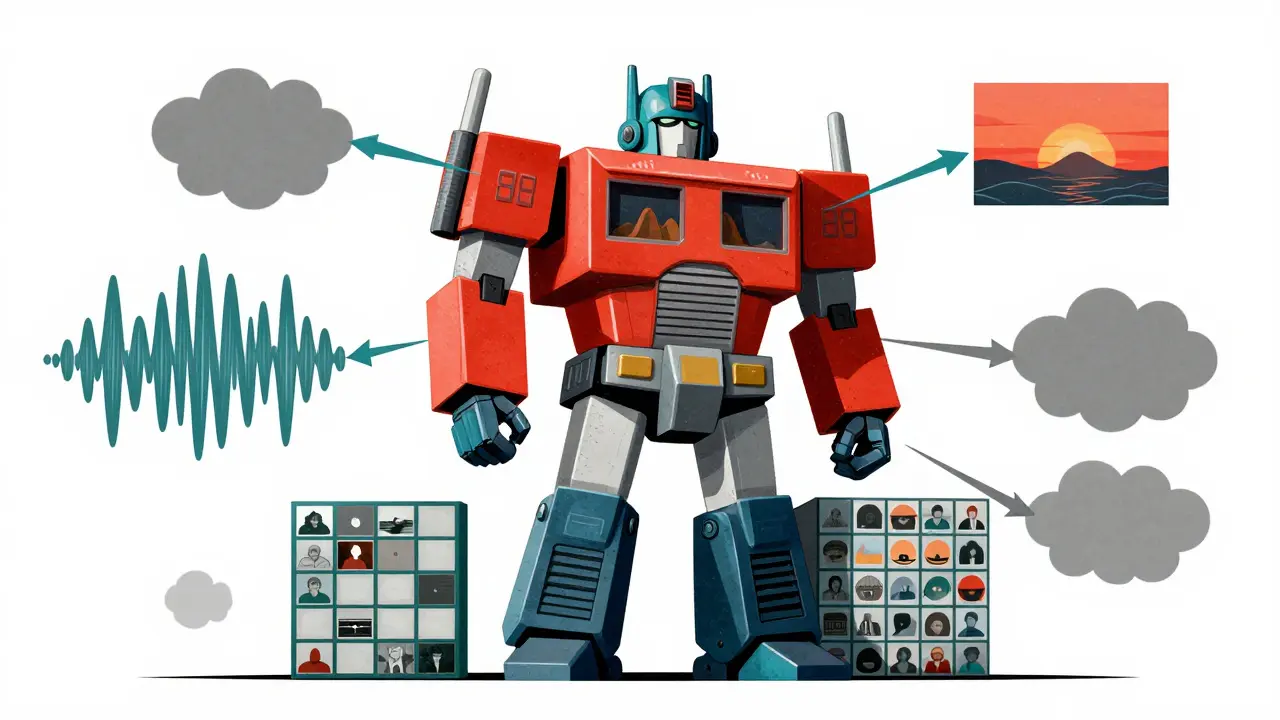

Multimodal transformers don’t treat text, images, audio, and video as separate puzzles. Instead, they turn them all into numbers-embeddings-that live in the same space. Think of it like translating different languages into one universal language where words from each language can be matched up by meaning. A picture of a sunset, the word "sunset," and the sound of waves crashing at dusk all get mapped to nearby points in this shared space. This starts with modality-specific encoders. Text goes through a tokenizer like WordPiece, breaking sentences into subword units. Each word becomes a 768-dimensional vector. Images are split into 16x16 pixel patches, each turned into a vector by a Vision Transformer (ViT). Audio is converted into mel spectrograms-visual representations of sound frequencies-and fed into models like the Audio Spectrogram Transformer (AST). Video? It’s treated as a stack of images over time. Tubelet embedding breaks video into 16-frame chunks, each with spatial patches, creating a 3D representation of motion and content. These encoded chunks don’t go into separate models. They’re fed together into a single transformer backbone. That’s the key difference from older two-stream models like ViLBERT, which ran separate transformers for each modality and then tried to stitch them together. Single-stream architectures like VATT process everything through the same attention layers, letting the model learn how the modalities relate to each other naturally.Alignment: The Real Magic

Putting embeddings in the same space isn’t enough. They have to be aligned-meaning similar concepts across modalities should be close together, and unrelated ones far apart. That’s done with contrastive learning. The model is trained using triplet loss: for every positive pair (like a video of a cat meowing and the text "cat meowing"), it finds a negative pair (like the same video with the text "dog barking"). It then adjusts the embeddings so the positive pair gets closer and the negative pair gets farther, with a margin of 0.2 as used in VATT. The result? On the MSR-VTT video-text retrieval task, properly aligned models hit 78.3% recall@10. That means, given a text query like "woman riding a bicycle," the system finds the correct video in the top 10 results nearly 8 out of 10 times. Unimodal systems? They barely break 62%. That gap isn’t small-it’s the difference between a useful tool and a frustrating one.Architecture Choices: Single-Stream vs. Two-Stream

There are two main ways to build these systems. The two-stream approach, used in models like LXMERT, keeps separate transformers for text and vision, then adds cross-attention layers to let them talk. It works well-75.2% accuracy on VQA v2-but it’s heavy. It needs 23% more parameters than single-stream models. Single-stream models like VATT merge all modalities early. After encoding, text, image, audio, and video tokens are mixed into one sequence and processed together. This cuts down on complexity and parameter count by about 18%, while matching or beating two-stream performance. VATT-v2, released in October 2024, hit 89.7% accuracy on Kinetics-400, a major video action benchmark, up 4.2% from the previous version. Then there’s co-tokenization, where all modalities are tokenized into a unified vocabulary. Twelve Labs’ approach showed 3.7% better performance on video QA tasks, but it added 29% more compute cost. Trade-offs like this aren’t theoretical-they shape what companies can realistically deploy.

Why Audio Is Still the Hardest

Text and images are relatively easy to align. Audio? Not so much. Even the best audio models lag behind. On LibriSpeech, spectrogram-based systems reduce word error rates by only 82.4%, compared to 92.1% for text-only ASR. Why? Sound is messy. Background noise, accents, overlapping voices, and variable recording quality make it harder to extract clean signals. Plus, audio embeddings often have lower resolution-100ms intervals mean you lose fine-grained timing details that matter for speech or music. This creates a problem: multimodal systems can be biased toward text and vision. Dr. Yann LeCun pointed out in June 2024 that most systems are just alignment engines. They can match a dog image to the word "dog," but they can’t reason about why the dog is barking-was it scared? Excited? Alerting its owner? That’s where true understanding fails.Real-World Performance and Requirements

Training these models isn’t cheap. You need at least eight NVIDIA A100 GPUs with 80GB VRAM each and 512GB of system RAM. That’s enterprise-grade hardware. Inference? One A100 can handle real-time 224x224 video at 30fps. But even then, the pipeline adds up: DistilBERT for text (50.4ms), ViT for images (87.2ms), SSAST for audio (112.6ms). Total latency per sample? Around 250ms. For real-time apps like live captioning or video search, that’s acceptable. For interactive systems? It’s tight. Developers on GitHub report that building multimodal pipelines takes 3.2x more code than unimodal ones. Audio-video sync is the top complaint-78% of negative reviews mention it. One Reddit user spent three weeks just getting embedding dimensions to match across modalities. Documentation is often thin. Meta’s VATT implementation has only a 2.4/5 rating, with 47 open GitHub issues about missing examples.Adoption: Hype vs. Reality

Academia is all in. VATT leads the Video-Text Retrieval leaderboard with 86.2% R@1. NeurIPS 2024 had 87 papers on multimodal AI-up from 42 in 2023. But industry adoption? Slow. Only 17% of enterprises have deployed multimodal systems, according to Gartner. Most stick with text-only transformers, which are cheaper, easier, and good enough for chatbots and search. Fortune 500 companies are experimenting-78% have piloted multimodal tech-but only 22% have scaled it. Manufacturing and healthcare lead, with 34% and 29% adoption respectively. Retail and finance lag, at 18% and 12%. Why? Cost, complexity, and unclear ROI. Gartner reports a 61% project failure rate among early adopters who didn’t tie the tech to a concrete business need. GDPR and the EU AI Act add another layer. Compliance pushes implementation costs up by 18-22%. Many European firms have paused projects until the December 2025 enforcement deadline is clearer.

What’s Next?

The field is moving fast. VATT-v2 introduced modality dropout-training the model to handle missing inputs. If audio fails, the system still works using just video and text. Microsoft’s alignment distillation technique cuts embedding mismatch by 37.4%, making cross-modal matching more reliable. New ideas are emerging: "Foundation model surgery" lets you adapt a pre-trained text model like BERT to vision or audio with 90% less data. "Embodied multimodal learning" adds sensor data-like motion or touch-to the mix, opening doors for robotics. Forrester predicts the market will hit $12.7 billion by 2027. Video analytics will be the biggest driver-68% of enterprise video systems will use multimodal transformers by 2026, up from 29% today. But the "modality gap" remains. Text models hit 92.1% accuracy. Audio? 78.4%. Until that gap closes, systems will keep favoring text and vision. The next breakthrough won’t come from bigger models-it’ll come from smarter alignment, better audio encoding, and models that don’t just match, but reason.What You Can Do Today

If you’re a developer or researcher, start small. Use Hugging Face’s multimodal library to experiment with VATT or OpenAI’s CLIP. Fine-tune on your own dataset-medical video, retail product videos, or customer service recordings. One user reported reaching 85% accuracy on a medical video task with just 1,200 examples, versus 15,000 for a CNN baseline. Focus on a clear use case: cross-modal search, automated video captioning, or multimodal QA. Don’t chase the tech for its own sake. The most successful projects aren’t the ones with the most modalities-they’re the ones that solve one hard problem better than anything else.What’s Holding Back Real Progress?

It’s not the math. It’s not the hardware. It’s the noise. Too many companies think adding audio and video to a text model makes it "smarter." But unless you’re aligning the modalities with purpose-unless you’re asking how the sound of a machine’s hum relates to its vibration patterns, or how a patient’s tone of voice correlates with their medical images-you’re just adding complexity without value. The real challenge isn’t building a model that sees, hears, and reads. It’s building one that understands the relationships between them. And that’s still a work in progress.Susannah Greenwood

I'm a technical writer and AI content strategist based in Asheville, where I translate complex machine learning research into clear, useful stories for product teams and curious readers. I also consult on responsible AI guidelines and produce a weekly newsletter on practical AI workflows.

Popular Articles

7 Comments

Write a comment Cancel reply

About

EHGA is the Education Hub for Generative AI, offering clear guides, tutorials, and curated resources for learners and professionals. Explore ethical frameworks, governance insights, and best practices for responsible AI development and deployment. Stay updated with research summaries, tool reviews, and project-based learning paths. Build practical skills in prompt engineering, model evaluation, and MLOps for generative AI.

It's wild how these models just sort of... *feel* things, you know? Like when you hear a dog bark and see its tail wag, you don't need a label to know it's excited. The machine isn't just matching pixels and phonemes-it's starting to sense context. That’s not pattern recognition anymore. That’s something closer to perception. I wonder if this is the first step toward machines having something like intuition.

so like i was watching this video of my cousin’s cat and the ai just said ‘cat is sad’ and i was like wait what how does it know the cat is sad its just sitting there and then i realized the audio had a tiny meow and the lighting was kinda dim and the tail was low and i just lost it lol like how is this even fair we spent 10 years in school learning to read emotions and this thing does it in 0.2 seconds and its not even trained on cats its just like oh this is a vibe

They’re calling this ‘understanding’? Please. This is just fancy pattern matching dressed up in academic jargon. The same tech that tells you a dog is barking also got used to track protesters in Xinjiang and flag dissenting speech in India. They don’t care if machines ‘understand’-they care if they can predict, control, and censor. You think this is about AI helping doctors? Nah. It’s about governments and corporations seeing every sound, every glance, every sigh-and turning it into data points for surveillance. Wake up. This isn’t progress. It’s a velvet cage.

okay so i read like half of this and got lost at the part about tubelet embedding and then i just skipped to the part where it said ‘audio is the hardest’ and honestly that’s all i needed. i tried to make a voice-to-text app once and spent 3 weeks just trying to get it to not think ‘hello’ was ‘helo’ or ‘helo’ was ‘hello’ or ‘hello’ was ‘helicopter’ and that was with one modality. now you want to throw in video and images and make it all talk to each other? bro. i just want my phone to know when i’m yelling at my wifi router and not send me a notification that says ‘user is angry about birds’

This is honestly one of the most hopeful things I’ve read in a long time. Not because it’s perfect, but because it’s trying. We’ve spent so long treating AI as a tool that just answers questions, but now it’s starting to listen-to really listen-and that changes everything. Imagine a child with autism who can’t speak but smiles when they hear their favorite song, and an AI notices the way their eyes light up and the rhythm of their breathing changes. That’s not just accuracy. That’s connection. We’re not just building smarter machines. We’re building kinder ones.

Thank you for sharing this thoughtful overview. I appreciate the balanced view on both the potential and the challenges. It is clear that alignment between modalities is not merely a technical problem, but a conceptual one. The effort to connect sound, sight, and language reflects a deeper human desire to make machines see the world as we do. However, we must proceed with care, ensuring that progress serves people, not the other way around. Simplicity and clarity remain vital, even in complex systems.

So if single-stream models are more efficient and perform better, why are so many companies still using two-stream? Is it because the research community published two-stream first and now there’s institutional inertia? Or is there something about keeping modalities separate that makes interpretability easier? I’m curious if anyone’s tried combining single-stream architecture with modality-specific attention heads-like a hybrid approach. Would that give you the efficiency of VATT with the fine-grained control of LXMERT?