Causal Masking in Decoder-Only LLMs: How It Prevents Information Leakage and Powers Text Generation

Causal masking is the key mechanism that lets decoder-only LLMs like GPT-4 generate coherent text by preventing future tokens from influencing past ones. Learn how it works, why it matters, and how developers are improving it.

Vibe Coding Adoption Metrics and Industry Statistics That Matter

Vibe coding adoption is surging, with 84% of developers using AI tools by 2025. But security risks, code quality issues, and skill gaps reveal a gap between hype and reality. Here are the stats that actually matter.

Post-Generation Verification Loops: How Automated Fact Checks Are Making LLMs Reliable

Post-generation verification loops use automated checks to catch errors in LLM outputs, turning guesswork into reliable results. They're transforming code generation, hardware design, and safety-critical AI - but only where accuracy matters most.

Adapter Layers and LoRA for Efficient Large Language Model Customization

LoRA and adapter layers let you customize large language models with minimal compute. Learn how they work, how they compare, and how to use them effectively-without needing a data center.

Measuring Prompt Quality: Rubrics for Completeness and Clarity

Learn how to measure prompt quality using structured rubrics that evaluate completeness and clarity. Discover the best types, common mistakes, and how to build your own for better AI results.

Privacy Controls for RAG: Row-Level Security and Redaction Before LLMs

RAG systems often leak sensitive data because they give LLMs full access to internal documents. Row-level security and data redaction before the AI sees the data are essential to prevent breaches, comply with regulations, and protect customer trust.

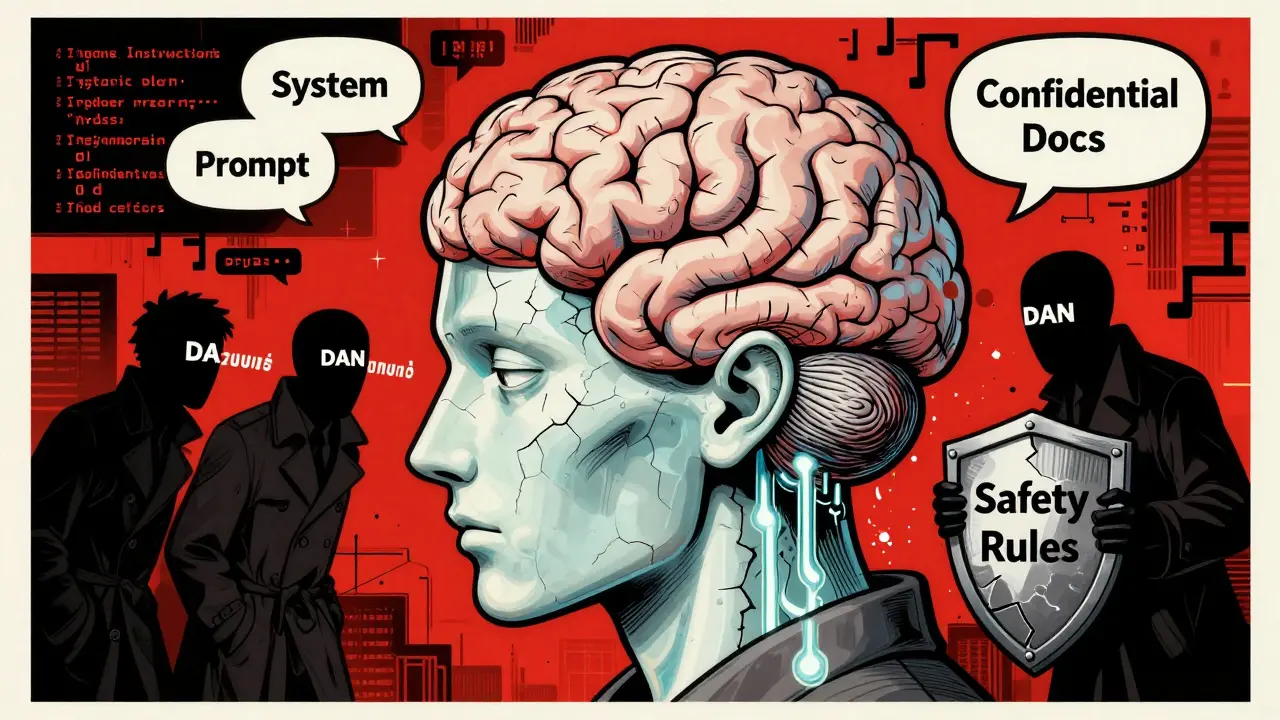

Prompt Injection Risks in Large Language Models: How Attacks Work and How to Stop Them

Prompt injection attacks trick AI models into ignoring their rules, exposing sensitive data and enabling code execution. Learn how these attacks work, which systems are at risk, and what defenses actually work in 2025.

Regulatory Frameworks for Generative AI: Global Laws, Standards, and Compliance

Global regulations for generative AI are now active in the EU, China, California, and beyond. Learn what laws apply to your AI tools, how compliance works, and what steps to take now to avoid fines and legal risks.

Accessibility Risks in AI-Generated Interfaces: Why WCAG Isn't Enough Anymore

AI-generated interfaces are breaking accessibility standards at scale, leaving millions of users behind. WCAG wasn’t built for dynamic AI content-and without urgent changes, algorithmic exclusion will become the norm.

Disaster Recovery for Large Language Model Infrastructure: Backups and Failover

LLM disaster recovery isn't optional anymore. Learn how to back up massive model weights, set up failover across regions, and avoid the top mistakes that cause costly outages in AI infrastructure.

Rotary Position Embeddings (RoPE) in Large Language Models: Benefits and Tradeoffs

Rotary Position Embeddings (RoPE) have become the standard for long-context LLMs, enabling models to handle sequences far beyond training length. Learn how RoPE works, why it outperforms traditional methods, and the key tradeoffs developers need to know.

Grounded Web Browsing for LLM Agents: How Search and Source Handling Power Real-World AI

Grounded web browsing lets AI agents search live websites for real-time info, fixing outdated answers. It's now powering enterprise tools with 72%+ accuracy-but comes with high costs, technical hurdles, and big ethical questions.