Comparative Prompting: How to Ask for Options, Trade-Offs, and Recommendations from AI

Learn how comparative prompting transforms AI from a search tool into a decision partner by asking for structured comparisons, trade-offs, and recommendations based on your specific criteria.

Multimodal Transformer Foundations: How Text, Image, Audio, and Video Embeddings Are Aligned

Multimodal transformers align text, image, audio, and video into a shared embedding space, enabling systems to understand the world like humans do. Learn how they work, where they're used, and why audio remains the hardest modality to master.

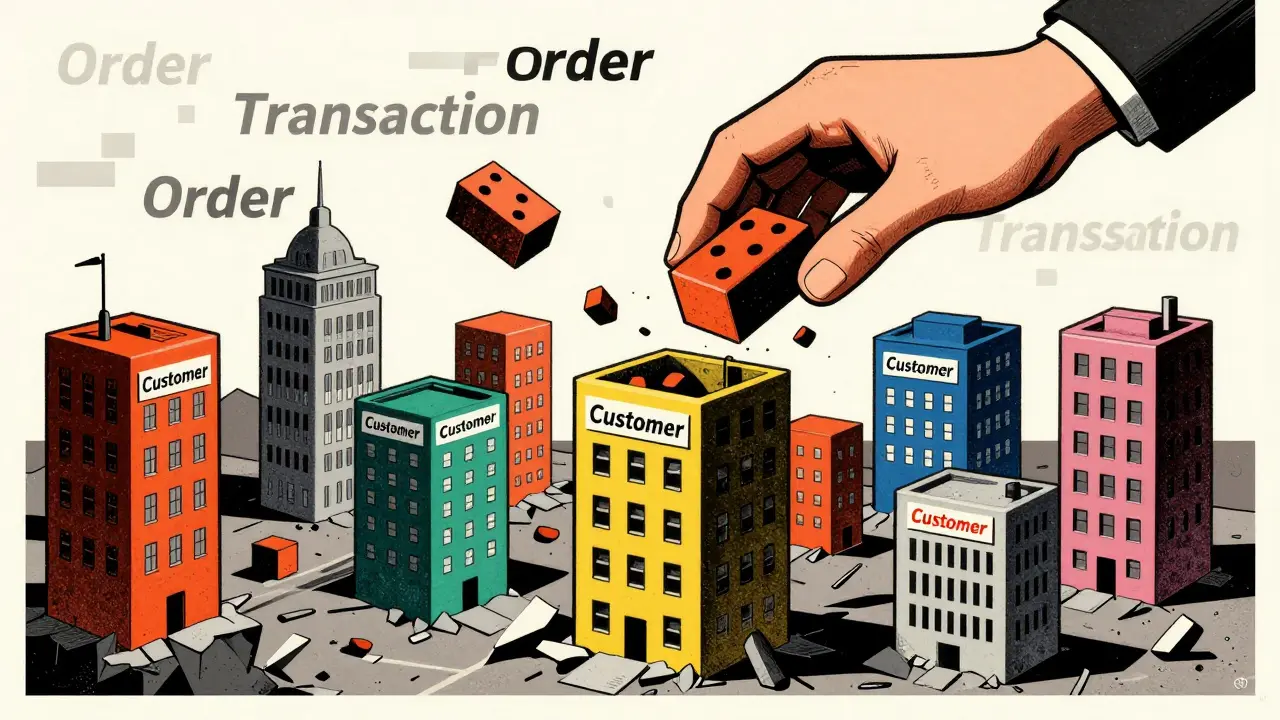

Domain-Driven Design with Vibe Coding: How Bounded Contexts and Ubiquitous Language Prevent AI Architecture Failures

Domain-Driven Design with Vibe Coding combines AI-powered code generation with strategic domain modeling to prevent architecture collapse. Learn how Bounded Contexts and Ubiquitous Language keep AI-generated code clean, consistent, and maintainable.

Governance and Compliance Chatbots: How LLMs Enforce Policies in Real Time

LLM-powered compliance chatbots automate policy enforcement in real time, cutting compliance costs by up to 50% and reducing human error by 75%. Learn how they work, where they excel, and the critical governance rules you can't ignore.

Latency and Cost in Multimodal Generative AI: How to Budget Across Text, Images, and Video

Multimodal AI can understand text, images, and video-but at a steep cost. Learn how to budget for latency and compute expenses across modalities to avoid runaway cloud bills and slow user experiences.

Fine-Tuning for Faithfulness in Generative AI: How Supervised and Preference Methods Reduce Hallucinations

Learn how supervised and preference-based fine-tuning methods reduce hallucinations in generative AI. Discover which approach works best for your use case and how to avoid common pitfalls that break reasoning.

How to Reduce Memory Footprint for Hosting Multiple Large Language Models

Learn how to reduce memory footprint for hosting multiple large language models using quantization, model parallelism, and hybrid techniques. Cut costs, run more models on less hardware, and avoid common pitfalls.

Security KPIs for Measuring Risk in Large Language Model Programs

Learn the essential security KPIs for measuring risk in large language model programs. Track detection rates, response times, and resilience metrics to prevent prompt injection, data leaks, and model abuse.

Transformer Pre-Norm vs Post-Norm Architectures: Which One Keeps LLMs Stable?

Pre-norm and post-norm architectures determine how Layer Normalization is applied in Transformers. Pre-norm enables stable training of deep LLMs with 100+ layers, while post-norm struggles beyond 30 layers. Most modern models like GPT-4 and Llama 3 use pre-norm.

How to Prompt for Performance Profiling and Optimization Plans

Learn how to ask the right questions to uncover performance bottlenecks using profiling tools. Get actionable steps to measure, identify, and optimize code effectively with real-world examples from Unity, Unreal Engine, and industry benchmarks.

How Curriculum and Data Mixtures Speed Up Large Language Model Scaling

Curriculum learning and smart data mixtures are accelerating LLM scaling by boosting performance without larger models. Learn how data ordering, complexity grading, and freshness improve efficiency, reduce costs, and outperform random training.

How to Detect Implicit vs Explicit Bias in Large Language Models

Large language models can appear fair but still harbor hidden biases. Learn how to detect implicit vs explicit bias using proven methods, why bigger models are often more biased, and what companies are doing to fix it.